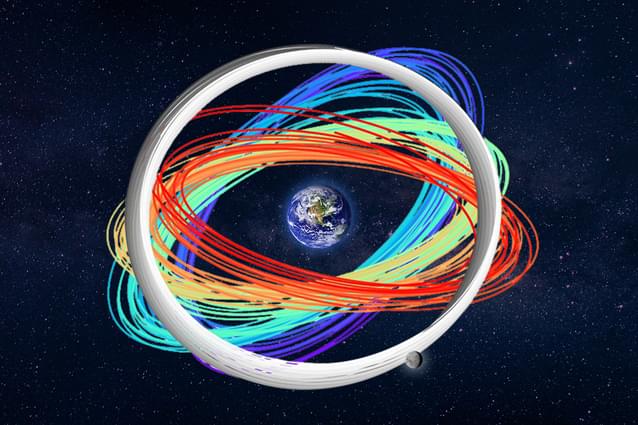

Satellites and spacecraft in the vast region between the earth and moon and just beyond — called cislunar space — are crucial for space exploration, scientific advancement and national security. But figuring out where exactly to put them into a stable orbit can be a huge, computationally expensive challenge.

In an open-access database and with publicly available code, researchers at Lawrence Livermore National Laboratory (LLNL) have simulated and published one million orbits in cislunar space. The effort, enabled by supercomputing resources at the Laboratory, provides valuable data that can be used to plan missions, predict how small perturbations might change orbits and monitor space traffic.

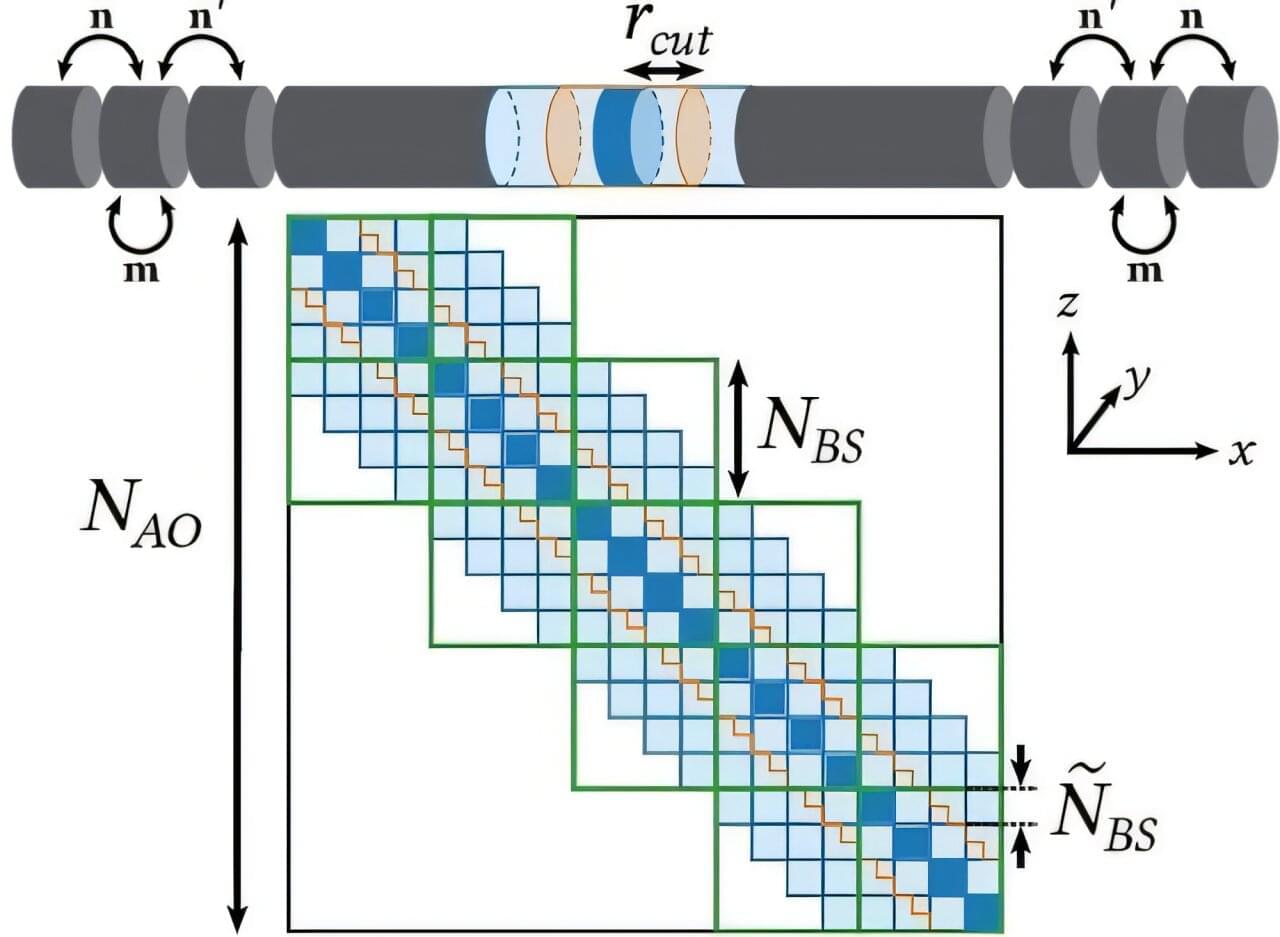

To begin, the Space Situational Awareness Python package takes in a range of initial conditions for an orbit, like how elliptical and tilted the orbit is and how far it gets from the earth.