Introduction.

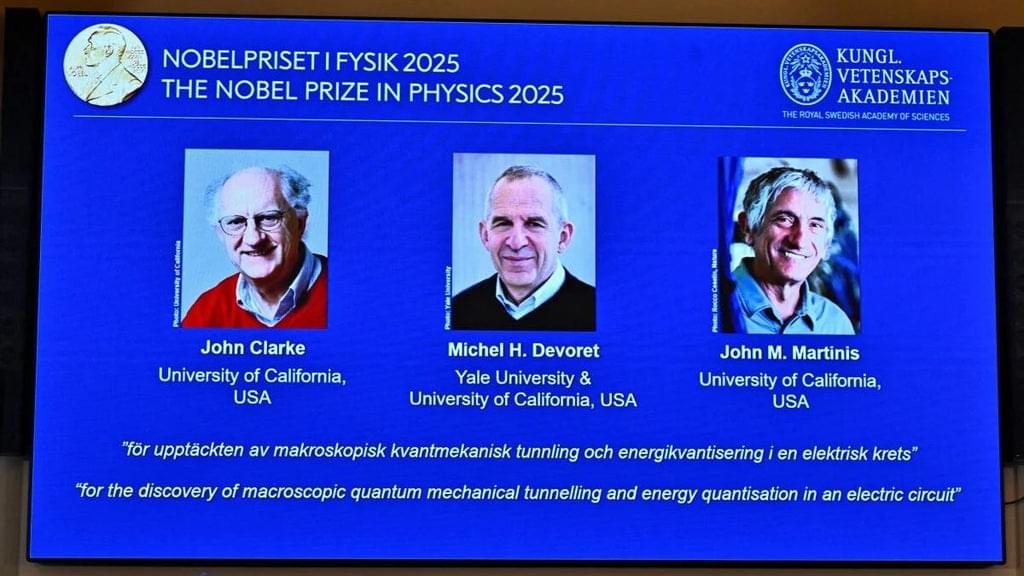

Grounded in the scientific method, it critically examines the work’s methodology, empirical validity, broader implications, and opportunities for advancement, aiming to foster deeper understanding and iterative progress in quantum technologies. ## Executive Summary.

This work, based on experiments conducted in 1984–1985, addresses a fundamental question in quantum physics: the scale at which quantum effects persist in macroscopic systems.

By engineering a Josephson junction-based circuit where billions of Cooper pairs behave collectively as a single quantum entity, the laureates provided empirical evidence that quantum phenomena like tunneling through energy barriers and discrete energy levels can manifest in human-scale devices.

This breakthrough bridges microscopic quantum mechanics with macroscopic engineering, laying foundational groundwork for advancements in quantum technologies such as quantum computing, cryptography, and sensors.

Overall strengths include rigorous experimental validation and profound implications for quantum information science, though gaps exist in scalability to room-temperature applications and full mitigation of environmental decoherence.

Framed within the broader context, this award highlights the enduring evolution of quantum mechanics from theoretical curiosity to practical innovation, building on prior Nobel-recognized discoveries like the Josephson effect (1973) and superconductivity mechanisms (1972).