When the Amaterasu particle entered Earth’s atmosphere, the TAP array in Utah recorded an energy level of more than 240 exa-electronvolts (EeV). Such particles are exceedingly rare and are thought to originate in some of the most extreme cosmic environments. At the time of its detection, scientists were not sure if it was a proton, a light atomic nucleus, or a heavy (iron) atomic nucleus. Research into its origin pointed toward the Local Void, a vast region of space adjacent to the Local Group that has few known galaxies or objects.

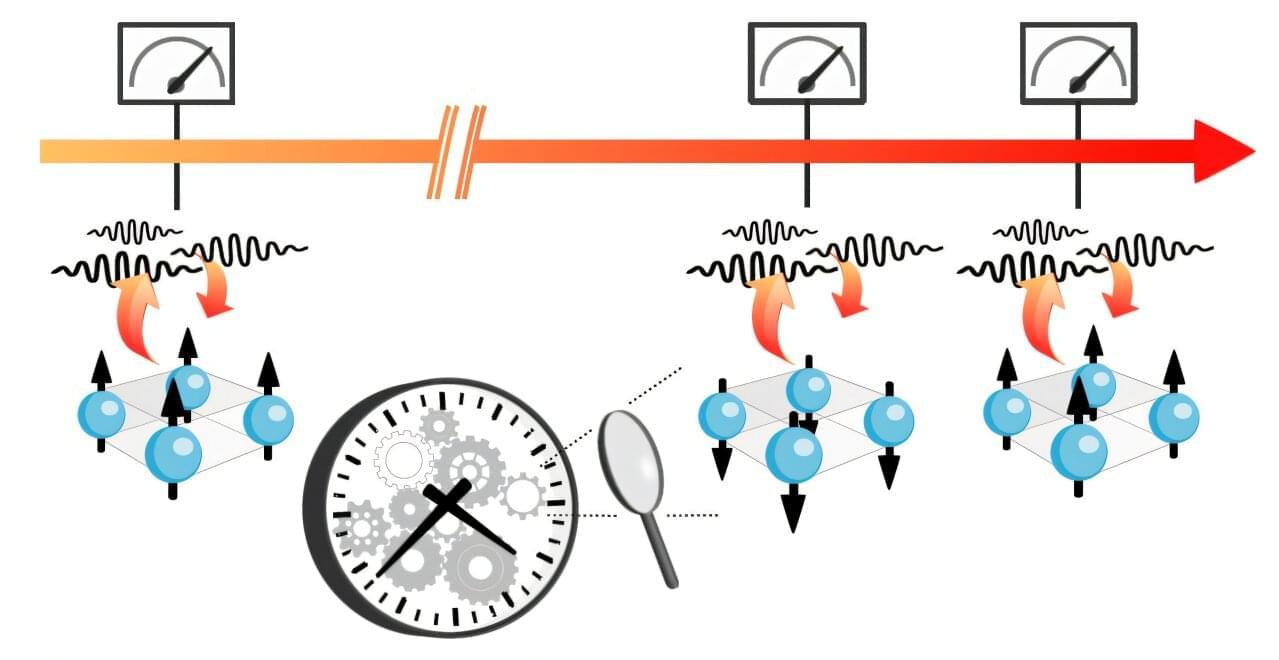

This posed a mystery for astronomers, as the region is largely devoid of sources capable of producing such energetic particles. Reconstructing the energy of cosmic-ray particles is already difficult, making the search for their sources using statistical models particularly challenging. Capel and Bourriche addressed this by combining advanced simulations with modern statistical methods (Approximate Bayesian Computation) to generate three-dimensional maps of cosmic-ray propagation and their interactions with magnetic fields in the Milky Way.