Mar 28, 2023

The Jobs Most Exposed to ChatGPT

Posted by Genevieve Klien in categories: employment, robotics/AI

A new study finds that AI tools could more quickly handle at least half of the tasks that auditors, interpreters and writers do now.

A new study finds that AI tools could more quickly handle at least half of the tasks that auditors, interpreters and writers do now.

Deep Learning AI Specialization: https://imp.i384100.net/GET-STARTED

AI Marketplace: https://taimine.com/

Take a journey through the years 2023–2030 as artificial intelligence develops increasing levels of consciousness, becomes an indispensable partner in human decision-making, and even leads key areas of society. But as the line between man and machines becomes blurred, society grapples with the moral and ethical implications of sentient machines, and the question arises: which side of history will you be on?

AI news timestamps:

0:00 AI consciousness intro.

0:17 Unconscious artificial intelligence.

1:54 AI influence in media.

3:13 AI decisions.

4:05 AI awareness.

5:07 The AI ally.

6:07 Machine human hybrid minds.

7:02 Which side.

7:55 The will of artificial intelligence.

#ai #future #tech

One question for David Krakauer, president of the Sante Fe Institute for complexity science where he explores the evolution of intelligence and stupidity on Earth.

Does GPT-4 really understand what we’re saying?

Yes and no, is the answer to that. In my new paper with computer scientist Melanie Mitchell, we surveyed AI researchers on the idea that large pretrained language models, like GPT-4, can understand language. When they say these models understand us, or that they don’t, it’s not clear that we’re agreeing on our concept of understanding. When Claude Shannon was inventing information theory, he made it very clear that the part of information he was interested in was communication, not meaning: You can have two messages that are equally informative, with one having loads of meaning and the other none.

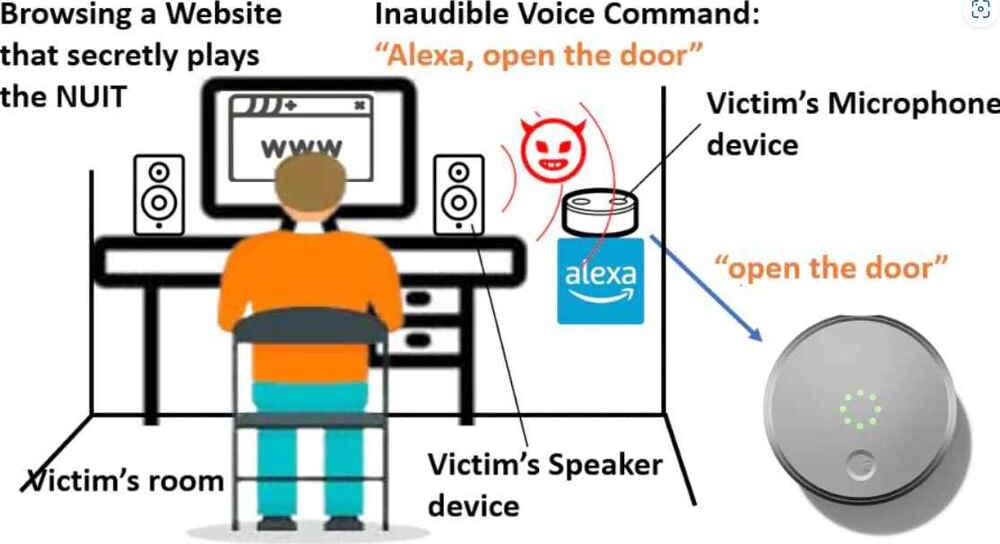

The Near–Ultrasound Invisible Trojan, or NUIT, was developed by a team of researchers from the University of Texas at San Antonio and the University of Colorado Colorado Springs as a technique to secretly convey harmful orders to voice assistants on smartphones and smart speakers.

If you watch videos on YouTube on your smart TV, then that television must have a speaker, right? According to Guinevere Chen, associate professor and co-author of the NUIT article, “the sound of NUIT harmful orders will [be] inaudible, and it may attack your mobile phone as well as connect with your Google Assistant or Alexa devices.” “That may also happen in Zooms during meetings. During the meeting, if someone were to unmute themself, they would be able to implant the attack signal that would allow them to hack your phone, which was placed next to your computer.

Continue reading “Hacking phones remotely without touching via new inaudible ultrasound attack” »

The ability to be creative has always been a big part of what separates human beings from machines. But today, a new generation of “generative” artificial intelligence (AI) applications is casting doubt on how wide that divide really is!

Tools like ChatGPT and Dall-E give the appearance of being able to carry out creative tasks — such as writing a poem or painting a picture — in a way that’s often indistinguishable from what we can do ourselves.

Does this mean that computers are truly being creative? Or are they simply giving the impression of creativity while, in reality, simply following a set of pre-programmed or probabilistic rules provided by us?

Continue reading “The Intersection Of AI And Human Creativity: Can Machines Really Be Creative?” »

Generative AI, the technology behind ChatGPT, is going supernova, as astronomers say, outshining other innovations for the moment. But despite alarmist predictions of AI overlords enslaving mankind, the technology still requires human handlers and will for some time to come.

While AI can generate content and code at a blinding pace, it still requires humans to oversee the output, which can be low quality or simply wrong. Whether it be writing a report or writing a computer program, the technology cannot be trusted to deliver accuracy that humans can rely on. It’s getting better, but even that process of improvement depends on an army of humans painstakingly correcting the AI model’s mistakes in an effort to teach it to ‘behave.’

Humans in the loop is an old concept in AI. It refers to the practice of involving human experts in the process of training and refining AI systems to ensure that they perform correctly and meet the desired objectives.

Apple has quietly acquired a Mountain View-based startup, WaveOne, that was developing AI algorithms for compressing video.

Apple wouldn’t confirm the sale when asked for comment. But WaveOne’s website was shut down around January, and several former employees, including one of WaveOne’s co-founders, now work within Apple’s various machine learning groups.

WaveOne’s former head of sales and business development, Bob Stankosh, announced the sale in a LinkedIn post published a month ago.

Against conventional architecture, the novel device enables processing to happen within the storage of a system.

The advent of ChatGPT has made Artificial Intelligence (AI) coupled with a language model accessible and practical for daily tasks, including paper writing, translation, coding, and more, all through question-and-answer-based interactions.

The disruptive launch of OpenAI’s product has created ripples in the industry, with technology giants like Google and Microsoft scrambling to maintain their position in the market by launching their half-baked AI-powered models.

The ability of Ernie Bot to create images from text commands is thought to be a distinct advantage over ChatGPT, claims a report.

Baidu’s Ernie Bot scores better than OpenAI’s ChatGPT in accuracy but needs more political knowledge.

The stringent restrictions in China maybe made it difficult for Ernie Bot to answer inquiries about Chinese politics, according to a random test conducted by South China Morning Post (SCMP) on Sunday.

Sam Altman, the CEO of OpenAI, sat down with Lex Fridman for a frank discussion on a variety of subjects, including the contentious question of whether the language model GPT is “too woke” or prejudiced.

Although admitting that the term “woke” has changed over time, Altman finally conceded that it is prejudiced and probably always will be, according to the interview published by Fridman on Saturday.