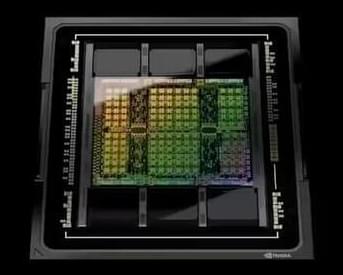

GPUs sell for tens of thousands of dollars, and GPU-maker Nvidia is worth over $2 trillion. But what is a GPU, exactly, and why is it special?

Tesla’s Optimus is taking baby steps while OpenAI’s Figure 1 is doing burnouts on the track.

All non-Google chat GPTs affected by side channel that leaks responses sent to users.

Amazon.com’s self-driving car unit, Zoox, is seeking to stay abreast of rival Waymo by expanding its vehicles’ testing in California and Nevada to include a wider area, higher speeds and nighttime driving.

ANYmal is a truly remarkable robot, capable of standing and lifting things like a humanoid, or slinking around on all fours like a quadruped, with or without wheels. But what’s really surprised us now is the eerie grace it’s starting to move with.

Their AI is able to recognize patterns in complex data sets and to formulate them in a physical theory. The development of a new theory is typically associated with the greats of physics. You might think of Isaac Newton or Albert Einstein, for example. Many Nobel Prizes have already been awarded for new theories. Researchers at Forschungszentrum Jülich have now programmed an artificial intelligence that has also mastered this feat. Their AI is able to recognize patterns in complex data sets and to formulate them in a physical theory.

In the following interview, Prof. Moritz Helias from Forschungszentrum Jülich’s Institute for Advanced Simulation (IAS-6) explains what the “Physics of AI” is all about and to what extent it differs from conventional approaches.

Figure has demonstrated the first fruit of its collaboration with OpenAI to enhance the capabilities of humanoid robots. In a video released today, the Figure 1 bot is seen conversing in real-time.

The development progress at Figure is nothing short of extraordinary. Entrepreneur Brett Adcock only emerged from stealth last year, after gathering together a bunch of key players from Boston Dynamics, Tesla Google DeepMind and Archer Aviation to “create the world’s first commercially viable general purpose humanoid robot.”

By October, the Figure 1 was already up on its feet and performing basic autonomous tasks. By the turn of the year, the robot had watch-and-learn capabilities, and was ready to enter the workforce at BMW by mid-January.