Dr. Abba Zubair, MD: “Our hope is to study these space-grown cells to improve treatment for age-related conditions such as stroke, dementia, neurodegenerative diseases and cancer.”

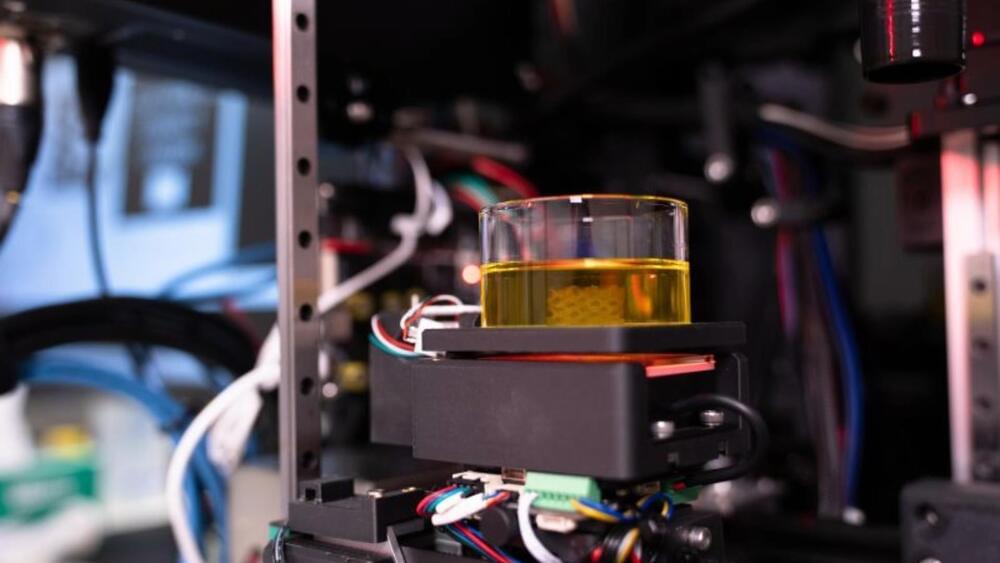

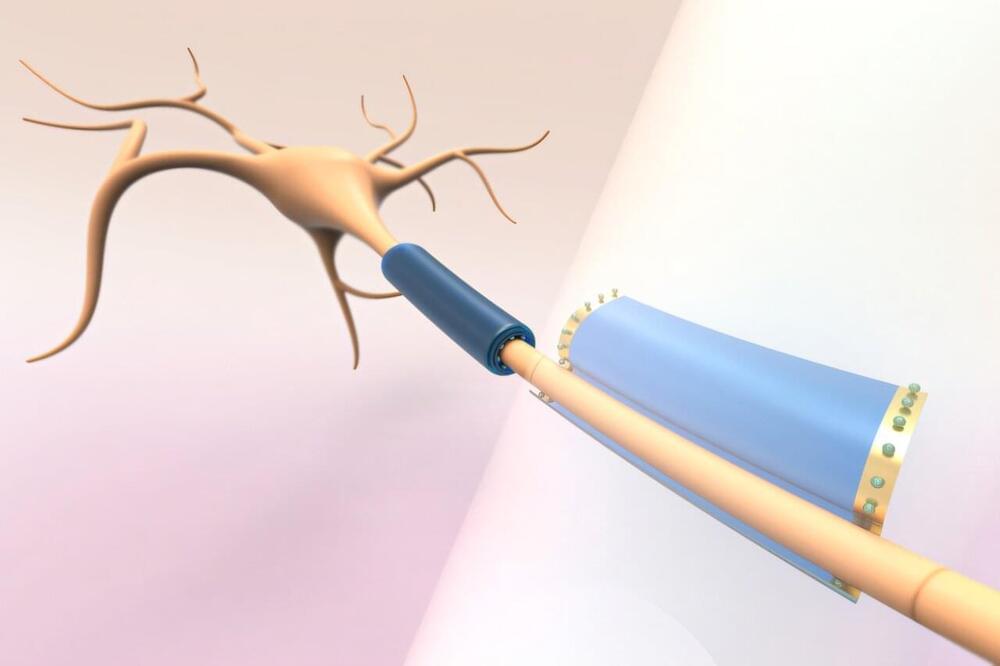

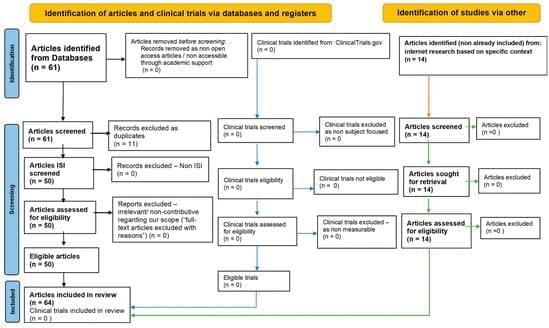

What can microgravity teach us about stem cell growth? This is what a recent study published in NPJ Microgravity hopes to address as a pair of researchers from the Mayo Clinic investigated past research regarding the growth properties of stem cells, specifically regeneration, differentiation, and cell proliferation in microgravity and whether the stem cells can maintain these properties after returning to Earth. This study holds the potential to help researchers better understand how stem cell growth in microgravity can be transitioned into medical applications, including tissue growth for disease modeling.

“The goal of almost all space flight in which stem cells are studied is to enhance growth of large amounts of safe and high-quality clinical-grade stem cells with minimal cell differentiation,” said Dr. Abba Zubair, MD, who is a faculty at the Mayo Clinic and the sole co-author on the study. “Our hope is to study these space-grown cells to improve treatment for age-related conditions such as stroke, dementia, neurodegenerative diseases and cancer.”

Continue reading “Space-Born Stem Cells: A New Frontier in Regenerative Medicine” »