Nov 21, 2022

Researchers at MIT Solve a Differential Equation Behind the Interaction of Two Neurons Through Synapses to Unlock a New Type of Speedy and Efficient Artificial Intelligence AI Algorithm

Posted by Dan Breeden in categories: information science, mathematics, robotics/AI

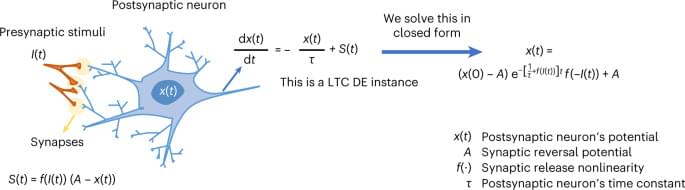

Continuous-time neural networks are one subset of machine learning systems capable of taking on representation learning for spatiotemporal decision-making tasks. Continuous differential equations are frequently used to depict these models (DEs). Numerical DE solvers, however, limit their expressive potential when used on computers. The scaling and understanding of many natural physical processes, like the dynamics of neural systems, have been severely hampered by this restriction.

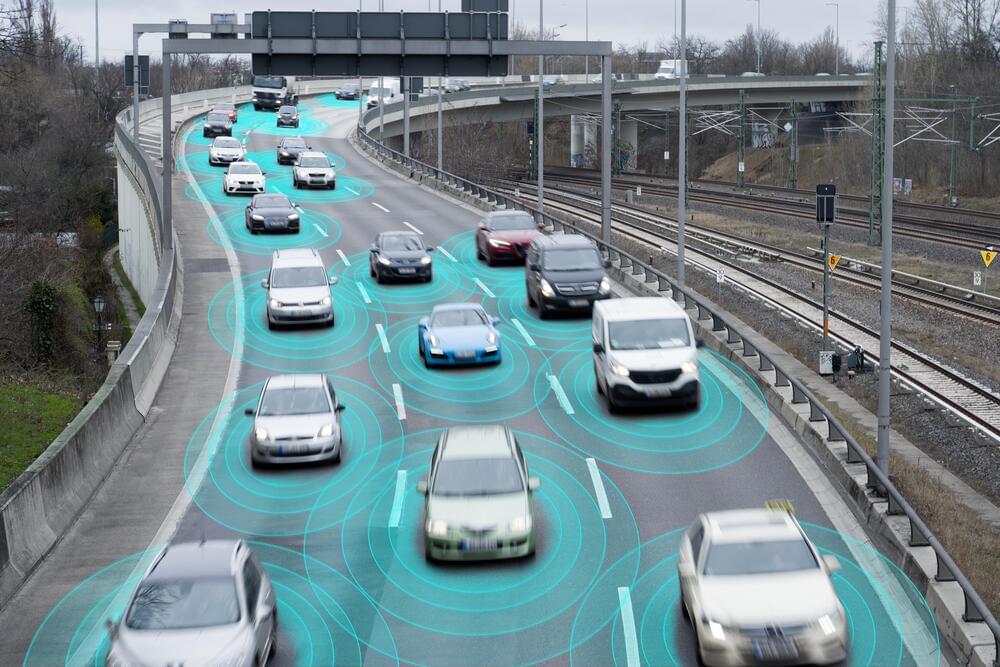

Inspired by the brains of microscopic creatures, MIT researchers have developed “liquid” neural networks, a fluid, robust ML model that can learn and adapt to changing situations. These methods can be used in safety-critical tasks such as driving and flying.

However, as the number of neurons and synapses in the model grows, the underlying mathematics becomes more difficult to solve, and the processing cost of the model rises.