Nov 12, 2022

Clever Machines Learn How to Be Curious

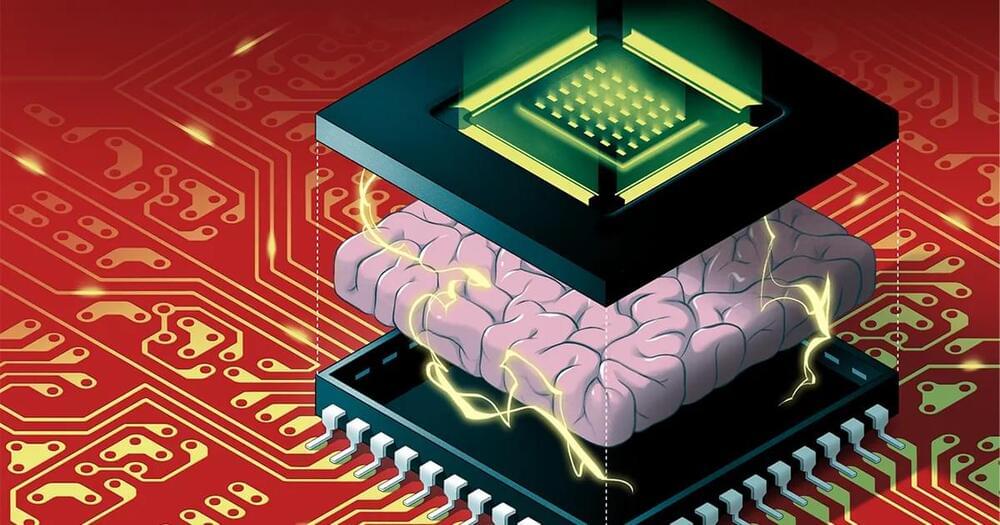

Posted by Dan Breeden in categories: computing, information science, neuroscience

“You can think of curiosity as a kind of reward which the agent generates internally on its own, so that it can go explore more about its world,” Agrawal said. This internally generated reward signal is known in cognitive psychology as “intrinsic motivation.” The feeling you may have vicariously experienced while reading the game-play description above — an urge to reveal more of whatever’s waiting just out of sight, or just beyond your reach, just to see what happens — that’s intrinsic motivation.

Humans also respond to extrinsic motivations, which originate in the environment. Examples of these include everything from the salary you receive at work to a demand delivered at gunpoint. Computer scientists apply a similar approach called reinforcement learning to train their algorithms: The software gets “points” when it performs a desired task, while penalties follow unwanted behavior.