• Relightable Gaussian Codec Avatars ➡️ https://go.fb.me/gdtkjm • URHand: Universal Relightable Hands ➡️ https://go.fb.me/1lmv7o • RoHM: Robust Human Motion Reconstruction via Diffusion ➡️ https://www.youtube.com/embed/hX7yO2c1hEE

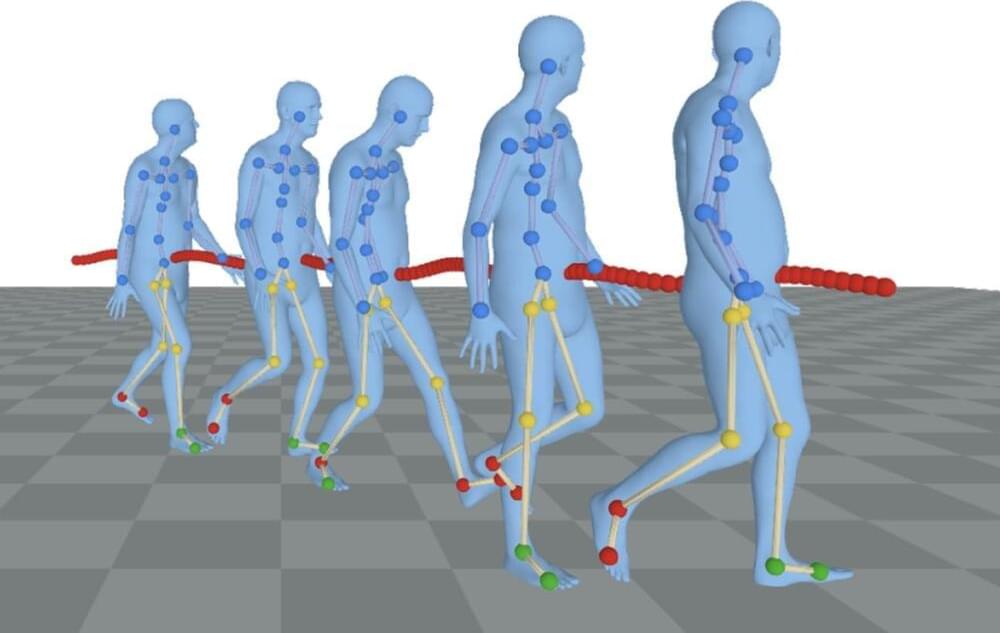

RoHM is a novel diffusion-based motion model that, conditioned on noisy and occluded input data, reconstructs complete, plausible motions in consistent global coordinates. Given the complexity of the problem — requiring one to address different tasks (denoising and infilling) in different solution spaces (local and global motion) — we decompose it into two sub-tasks and learn two models, one for global trajectory and one for local motion. To capture the correlations between the two, we then introduce a novel conditioning module, combining it with an iterative inference scheme. We apply RoHM to a variety of tasks — from motion reconstruction and denoising to spatial and temporal infilling. Extensive experiments on three popular datasets show that our method outperforms state-of-the-art approaches qualitatively and quantitatively, while being faster at test time.

Leave a reply