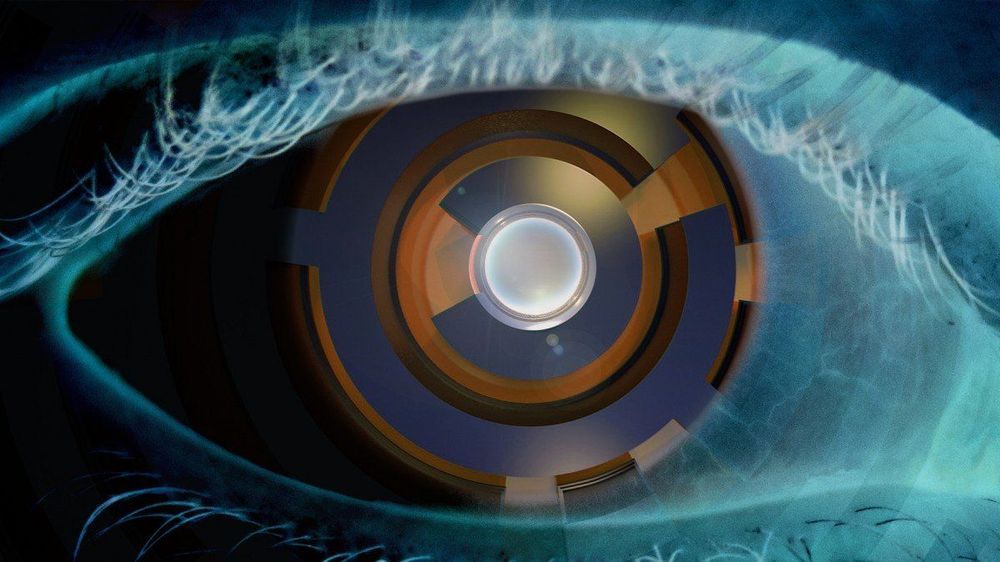

The news: A new type of artificial eye, made by combining light-sensing electronics with a neural network on a single tiny chip, can make sense of what it’s seeing in just a few nanoseconds, far faster than existing image sensors.

Why it matters: Computer vision is integral to many applications of AI—from driverless cars to industrial robots to smart sensors that act as our eyes in remote locations—and machines have become very good at responding to what they see. But most image recognition needs a lot of computing power to work. Part of the problem is a bottleneck at the heart of traditional sensors, which capture a huge amount of visual data, regardless of whether or not it is useful for classifying an image. Crunching all that data slows things down.

A sensor that captures and processes an image at the same time, without converting or passing around data, makes image recognition much faster using much less power. The design, published in Nature today by researchers at the Institute of Photonics in Vienna, Austria, mimics the way animals’ eyes pre-process visual information before passing it on to the brain.

Comments are closed.