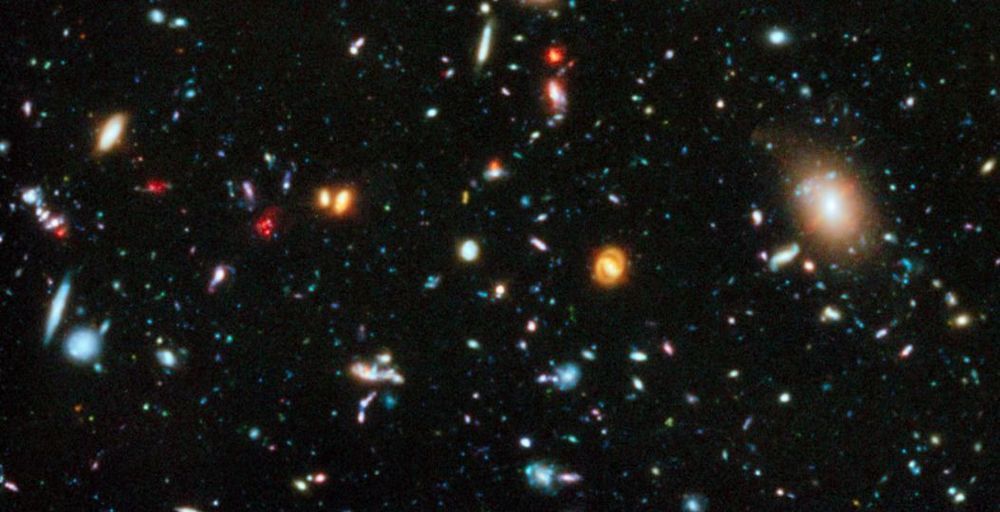

Our observable Universe is an enormous place, with some two trillion galaxies strewn across the abyss of space for tens of billions of light-years in all directions. Ever since the 1920s, when we first unambiguously demonstrated that those galaxies were well beyond the extent of the Milky Way by accurately measuring the distances to them, one fact leaped out at us: the farther away a galaxy is, on average, the more severely shifted towards the red, long-wavelength part of the spectrum its light will be.

This relationship, between redshift and distance, looks like a straight line when we first plot it out: the farther away you look, the greater the distant object’s redshift is, in direct proportion to one another. If you measure the slope of that line, you get a value, colloquially known as the Hubble constant. But it isn’t actually a constant at all, as it changes over time. Here’s the science behind why.

In our Universe, light doesn’t simply propagate through a fixed and unchanging space, arriving at its destination with the same properties it possessed when it was emitted by the source. Instead, it must contend with an additional factor: the expansion of the Universe. This expansion of space, as you can see, above, affects the properties of the light itself. In particular, as the Universe expands, the wavelength of the light passing through that space gets stretched.

Comments are closed.