For a robot to be able to “learn” sign language, it is necessary to combine different areas of engineering such as artificial intelligence, neural networks and artificial vision, as well as underactuated robotic hands. “One of the main new developments of this research is that we united two major areas of Robotics: complex systems (such as robotic hands) and social interaction and communication,” explains Juan Víctores, one of the researchers from the Robotics Lab in the Department of Systems Engineering and Automation of the UC3M.

The first thing the scientists did as part of their research was to indicate, through a simulation, the specific position of each phalanx in order to depict particular signs from Spanish Sign Language. They then attempted to reproduce this position with the robotic hand, trying to make the movements similar to those a human hand could make. “The objective is for them to be similar and, above all, natural. Various types of neural networks were tested to model this adaptation, and this allowed us to choose the one that could perform the gestures in a way that is comprehensible to people who communicate with sign language,” the researchers explain.

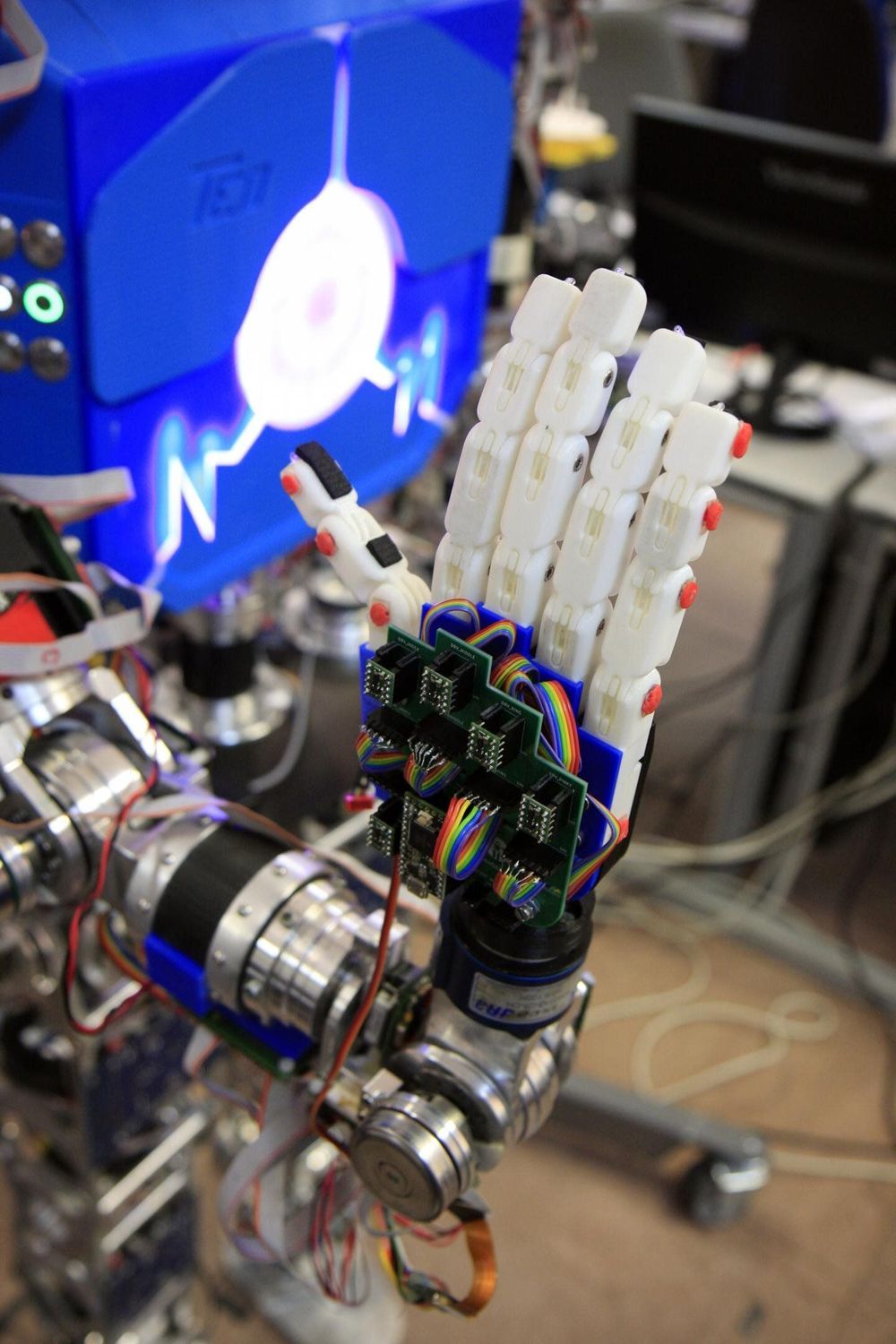

Finally, the scientists verified that the system worked by interacting with potential end-users. “The deaf people who have been in contact with the robot have reported 80 percent satisfaction, so the response has been very positive,” says another of the researchers from the Robotics Lab, Jennifer J. Gago. The experiments were carried out with TEO (Task Environment Operator), a humanoid robot for home use developed in the Robotics Lab of the UC3M.