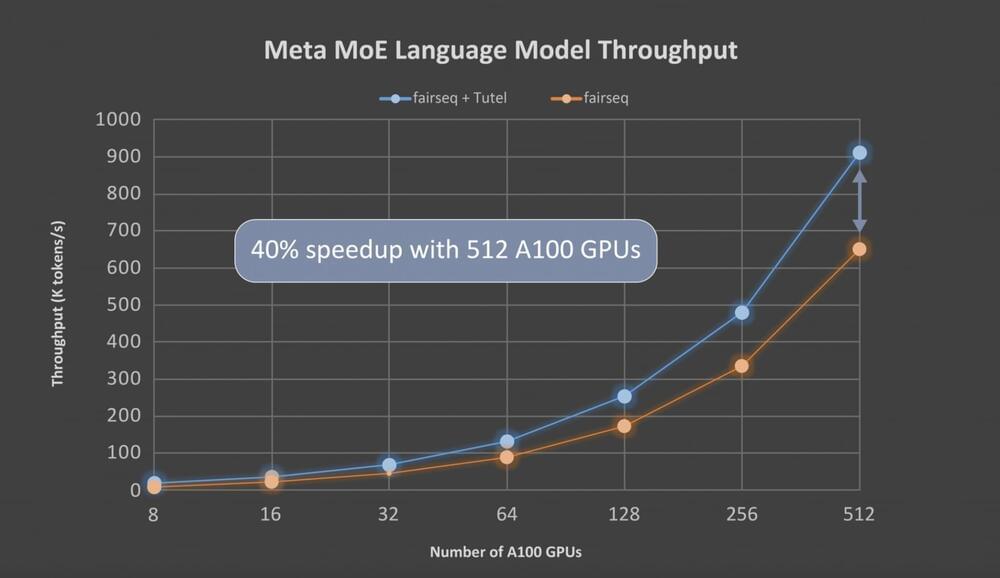

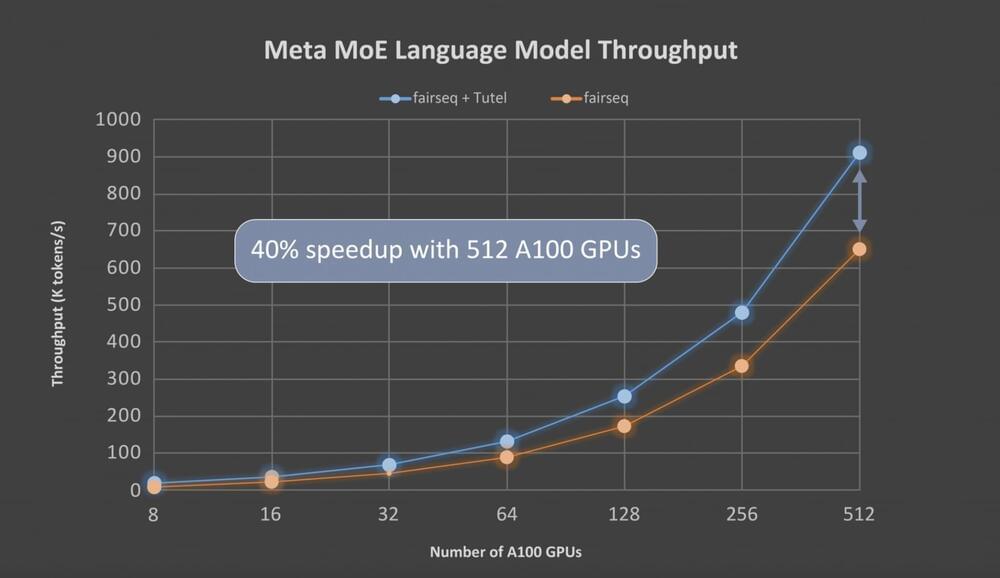

Tutel is a high-performance MoE library developed by Microsoft researchers to aid in the development of large-scale DNN (Deep Neural Network) models; Tutel is highly optimized for the new Azure NDm A100 v4 series, and Tutel’s diverse and flexible MoE algorithmic support allows developers across AI domains to execute MoE more easily and efficiently. Tutel achieves an 8.49x speedup on an NDm A100 v4 node with 8 GPUs and a 2.75x speedup on 64 NDm A100 v4 nodes with 512 A100 GPUs compared to state-of-the-art MoE implementations like Meta’s Facebook AI Research Sequence-to-Sequence Toolkit (fairseq) in PyTorch for a single MoE layer.

Tutel delivers a more than 40% speedup for Meta’s 1.1 trillion–parameter MoE language model with 64 NDm A100 v4 nodes for end-to-end performance, thanks to optimization for all-to-all communication. When working on the Azure NDm A100 v4 cluster, Tutel delivers exceptional compatibility and comprehensive capabilities to assure outstanding performance. Tutel is free and open-source software that has been integrated into fairseq.

Tutel is a high-level MoE solution that complements existing high-level MoE solutions like fairseq and FastMoE by focusing on the optimizations of MoE-specific computation and all-to-all communication and other diverse and flexible algorithmic MoE supports. Tutel features a straightforward user interface that makes it simple to combine with other MoE systems. Developers can also use the Tutel interface to include independent MoE layers into their own DNN models from the ground up, taking advantage of the highly optimized state-of-the-art MoE features right away.