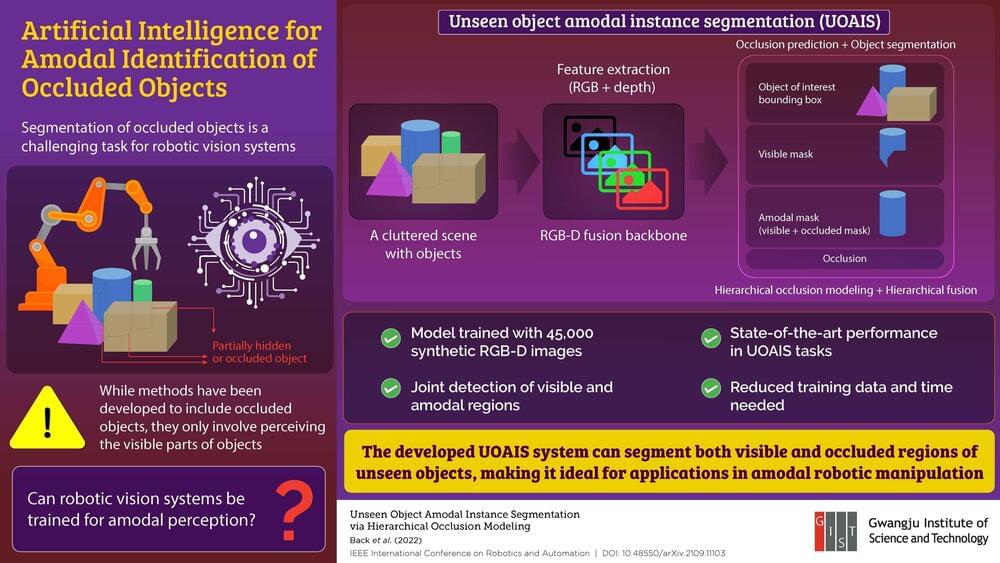

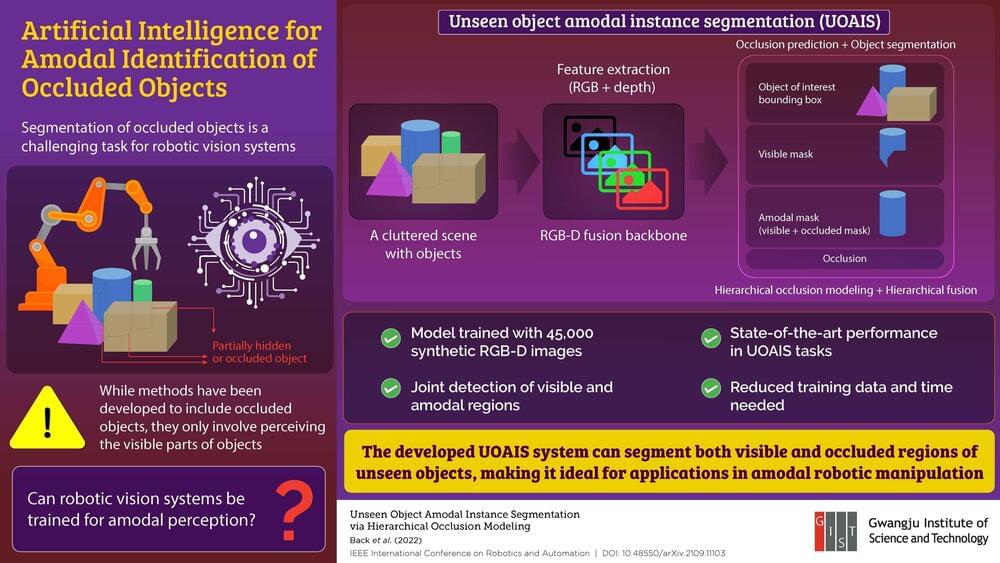

When artificial intelligence systems encounter scenes where objects are not fully visible, they have to make estimations based only on the visible parts of the objects. This partial information leads to detection errors, and large training data is required to correctly recognize such scenes. Now, researchers at the Gwangju Institute of Science and Technology have developed a framework that allows robot vision to detect such objects successfully in the same way that we perceive them.

Robotic vision has come a long way, reaching a level of sophistication with applications in complex and demanding tasks, such as autonomous driving and object manipulation. However, it still struggles to identify individual objects in cluttered scenes where some objects are partially or completely hidden behind others. Typically, when dealing with such scenes, robotic vision systems are trained to identify the occluded object based only on its visible parts. But such training requires large datasets of objects and can be pretty tedious.

Associate Professor Kyoobin Lee and Ph.D. student Seunghyeok Back from the Gwangju Institute of Science and Technology (GIST) in Korea found themselves facing this problem when they were developing an artificial intelligence system to identify and sort objects in cluttered scenes. “We expect a robot to recognize and manipulate objects they have not encountered before or been trained to recognize. In reality, however, we need to manually collect and label data one by one as the generalizability of deep neural networks depends highly on the quality and quantity of the training dataset,” says Mr. Back.