Artificial intelligence has entered our daily lives. First, it was ChatGPT. Now, it’s AI-generated pizza and beer commercials. While we can’t trust AI to be perfect, it turns out that sometimes we can’t trust ourselves with AI either.

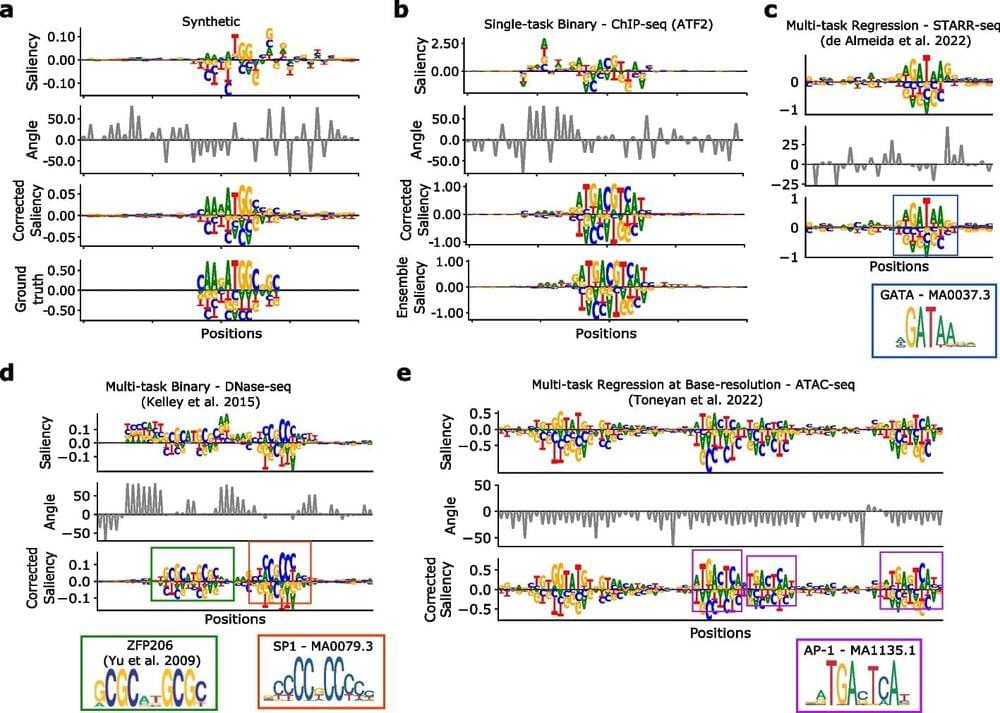

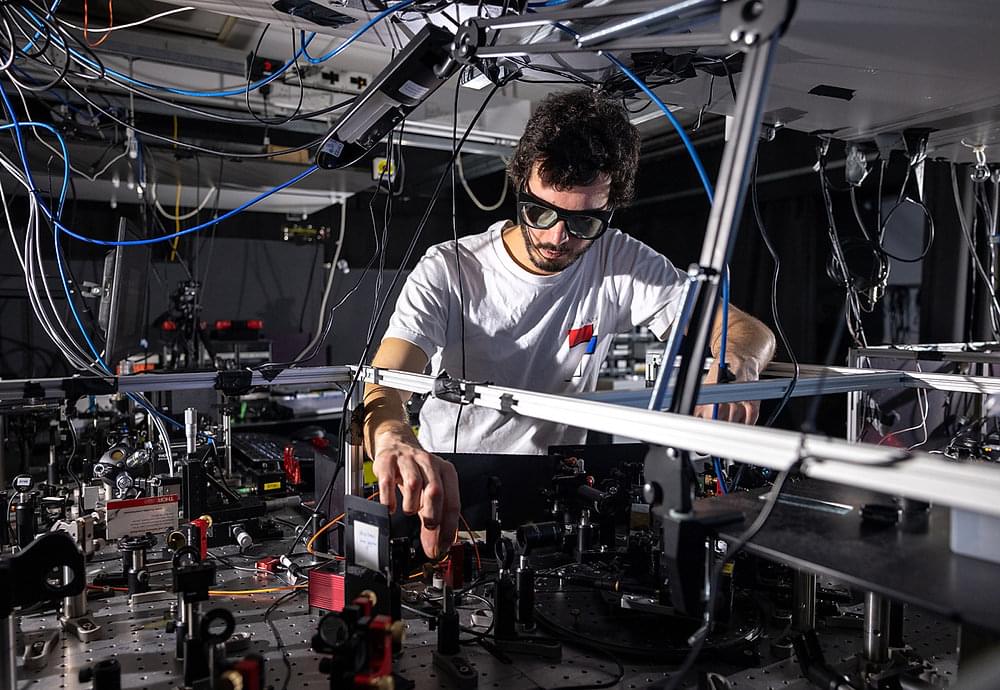

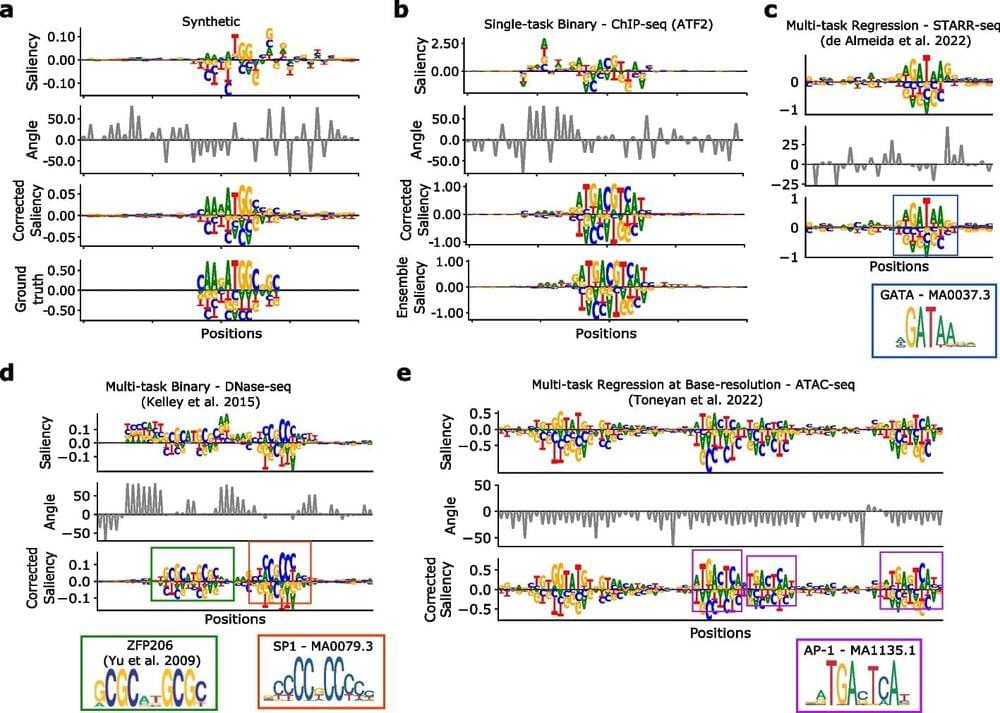

Cold Spring Harbor Laboratory (CSHL) Assistant Professor Peter Koo has found that scientists using popular computational tools to interpret AI predictions are picking up too much “noise,” or extra information, when analyzing DNA. And he’s found a way to fix this. Now, with just a couple new lines of code, scientists can get more reliable explanations out of powerful AIs known as deep neural networks. That means they can continue chasing down genuine DNA features. Those features might just signal the next breakthrough in health and medicine. But scientists won’t see the signals if they’re drowned out by too much noise.

So, what causes the meddlesome noise? It’s a mysterious and invisible source like digital “dark matter.” Physicists and astronomers believe most of the universe is filled with dark matter, a material that exerts gravitational effects but that no one has yet seen. Similarly, Koo and his team discovered the data that AI is being trained on lacks critical information, leading to significant blind spots. Even worse, those blind spots get factored in when interpreting AI predictions of DNA function. The study is published in the journal Genome Biology.