Four years ago, Google started to see the real potential for deploying neural networks to support a large number of new services. During that time it was also clear that, given the existing hardware, if people did voice searches for three minutes per day or dictated to their phone for short periods, Google would have to double the number of datacenters just to run machine learning models.

The need for a new architectural approach was clear, Google distinguished hardware engineer, Norman Jouppi, tells The Next Platform, but it required some radical thinking. As it turns out, that’s exactly what he is known for. One of the chief architects of the MIPS processor, Jouppi has pioneered new technologies in memory systems and is one of the most recognized names in microprocessor design. When he joined Google over three years ago, there were several options on the table for an inference chip to churn services out from models trained on Google’s CPU and GPU hybrid machines for deep learning but ultimately Jouppi says he never excepted to return back to what is essentially a CISC device.

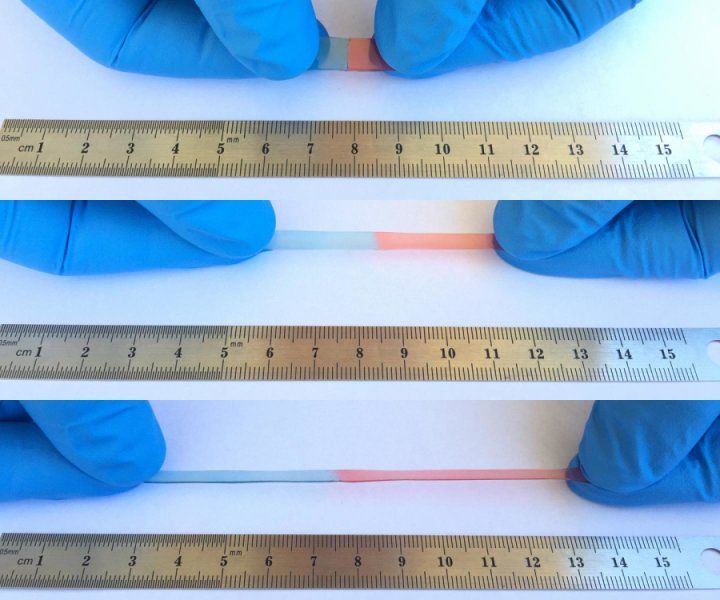

We are, of course, talking about Google’s Tensor Processing Unit (TPU), which has not been described in much detail or benchmarked thoroughly until this week. Today, Google released an exhaustive comparison of the TPU’s performance and efficiencies compared with Haswell CPUs and Nvidia Tesla K80 GPUs. We will cover that in more detail in a separate article so we can devote time to an in-depth exploration of just what’s inside the Google TPU to give it such a leg up on other hardware for deep learning inference. You can take a look at the full paper, which was just released, and read on for what we were able to glean from Jouppi that the paper doesn’t reveal.

Continue reading “First In-Depth Look at Google’s TPU Architecture” »