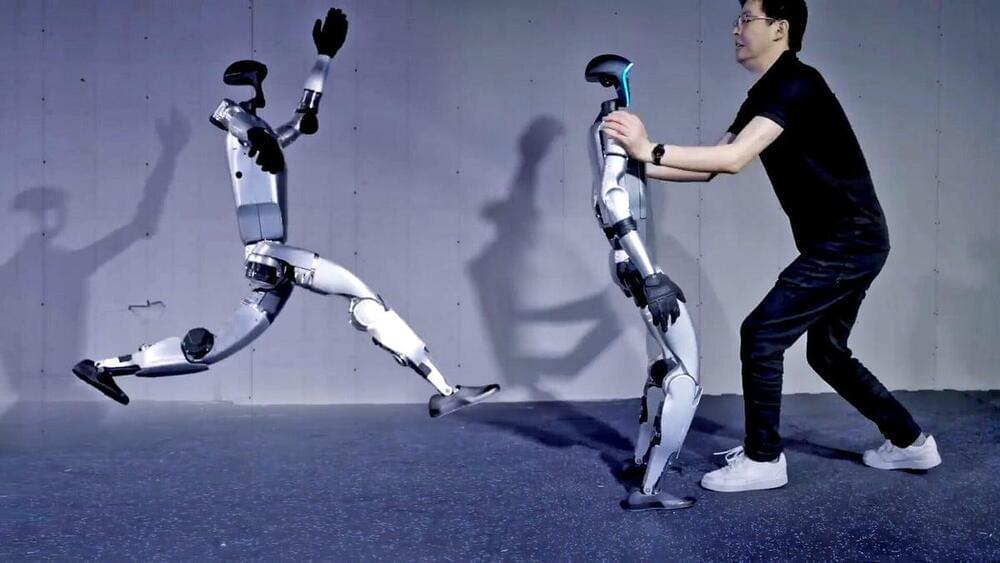

The pace of engineering and science is speeding up, rapidly leading us toward a “Technological Singularity” — a point in time when superintelligent machines achieve and improve so much so fast, traditional humans can no longer operate at the forefront. However, if all goes well, human beings may still flourish greatly in their own ways in this unprecedented era.

If humanity is going to not only survive but prosper as the Singularity unfolds, we will need to understand that the Technological Singularity is an Experiential Singularity as well, and rapidly evolve not only our technology but our level of compassion, ethics and consciousness.

The aim of The Consciousness Explosion is to help curious and open-minded readers wrap their brains around these dramatic emerging changes– and empower readers with tools to cope and thrive as they unfold.