I recently saw the film Transcendence with a close friend. If you can get beyond Johnny Depp’s siliconised mugging of Marlon Brando and Rebecca Hall’s waddling through corridors of quantum computers, Transcendence provides much to think about. Even though Christopher Nolan of Inception fame was involved in the film’s production, the pyrotechnics are relatively subdued – at least by today’s standards. While this fact alone seems to have disappointed some viewers, it nevertheless enables you to focus on the dialogue and plot. The film is never boring, even though nothing about it is particularly brilliant. However, the film stays with you, and that’s a good sign. Mark Kermode at the Guardian was one of the few reviewers who did the film justice.

The main character, played by Depp, is ‘Will Caster’ (aka Ray Kurzweil, but perhaps also an allusion to Hans Castorp in Thomas Mann’s The Magic Mountain). Caster is an artificial intelligence researcher based at Berkeley who, with his wife Evelyn Caster (played by Hall), are trying to devise an algorithm capable of integrating all of earth’s knowledge to solve all of its its problems. (Caster calls this ‘transcendence’ but admits in the film that he means ‘singularity’.) They are part of a network of researchers doing similar things. Although British actors like Hall and the key colleague Paul Bettany (sporting a strange Euro-English accent) are main players in this film, the film itself appears to transpire entirely within the borders of the United States. This is a bit curious, since a running assumption of the film is that if you suspect a malevolent consciousness uploaded to the internet, then you should shut the whole thing down. But in this film at least, ‘the whole thing’ is limited to American cyberspace.

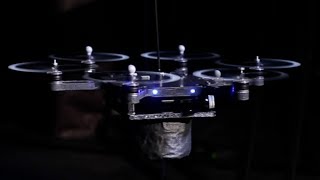

Before turning to two more general issues concerning the film, which I believe may have led both critics and viewers to leave unsatisfied, let me draw attention to a couple of nice touches. First, the leader of the ‘Revolutionary Independence from Technology’ (RIFT), whose actions propel the film’s plot, explains that she used to be an advanced AI researcher who defected upon witnessing the endless screams of a Rhesus monkey while its entire brain was being digitally uploaded. Once I suspended my disbelief in the occurrence of such an event, I appreciate it as a clever plot device for showing how one might quickly convert from being radically pro- to anti-AI, perhaps presaging future real-world targets for animal rights activists. Second, I liked the way in which quantum computing was highlighted and represented in the film. Again, what we see is entirely speculative, yet it highlights the promise that one day it may be possible to read nature as pure information that can be assembled according to need to produce what one wants, thereby rendering our nanotechnology capacities virtually limitless. 3D printing may be seen as a toy version of this dream.

Now on to the two more general issues, which viewers might find as faults, but I think are better treated as what the Greeks called aporias (i.e. open questions):

Continue reading “What to make of the film 'Transcendence'? Show it in classrooms.” »

JESSE McKINLEY — NYTimes

JESSE McKINLEY — NYTimes