Stanford University PhD candidate, Song Han, who works under advisor and networking pioneer, Dr. Bill Dally, responded in a most soft-spoken and thoughtful way to the question of whether the coupled software and hardware architecture he developed might change the world.

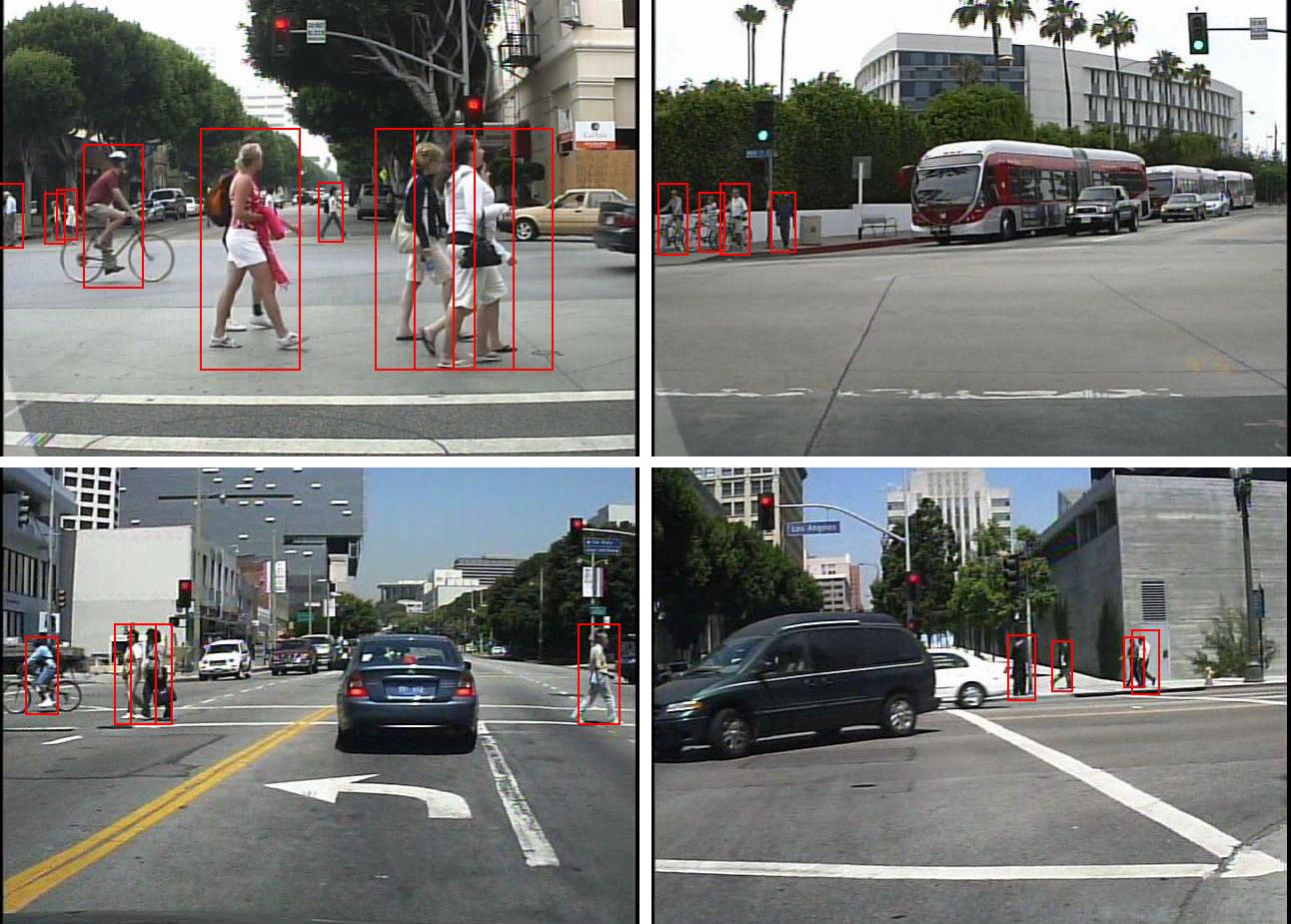

In fact, instead of answering the question directly, he pointed to the range of applications, both in the present and future, that will be driven by near real-time inference for complex deep neural networks—all a roundabout way of showing not just why what he is working toward is revolutionary, but why the missing pieces he is filling in have kept neural network-fed services at a relative constant.

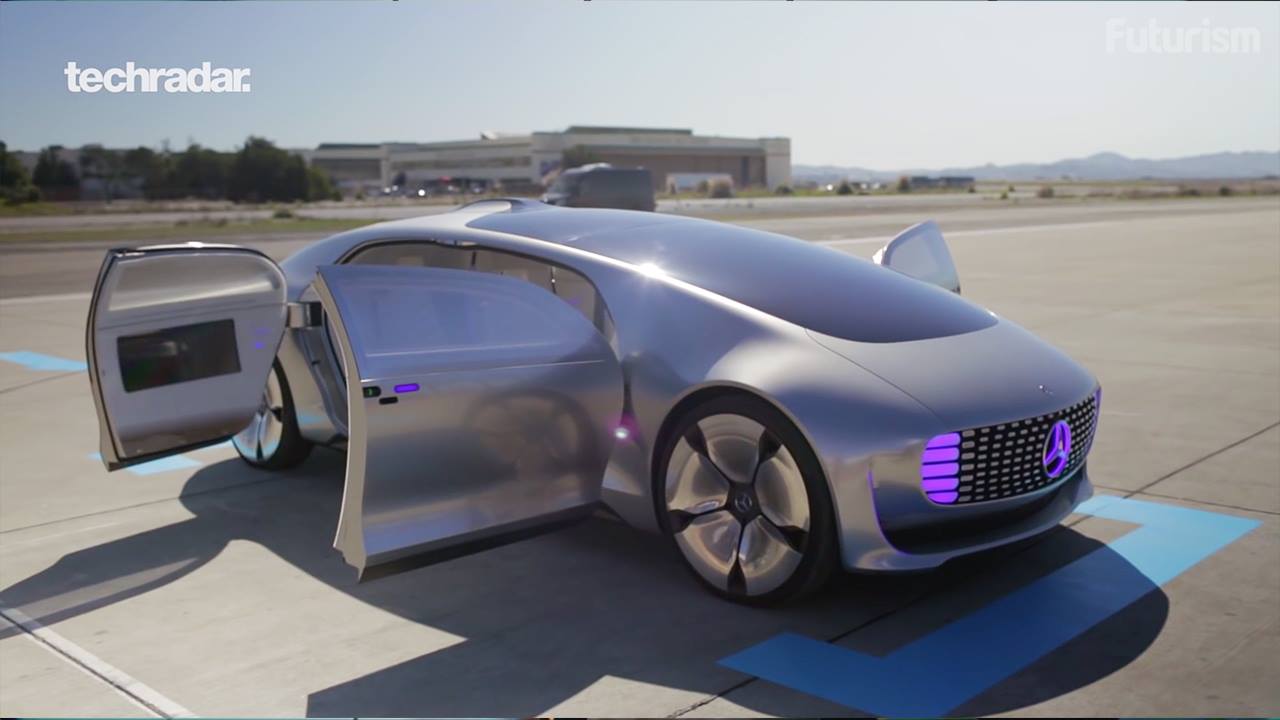

There is one large barrier to that future Han considers imminent—one pushed by an existing range of neural network-driven applications powering all aspects of the consumer economy and, over time, the enterprise. And it’s less broadly technical than it is efficiency-driven. After all, considering the mode of service delivery of these applications, often lightweight, power-aware devices, how much computation can be effectively packed into the memory of such devices—and at what cost to battery life or overall power? Devices aside, these same concerns, at a grander level of scale, are even more pertinent at the datacenter where some bulk of the inference is handled.

Read more