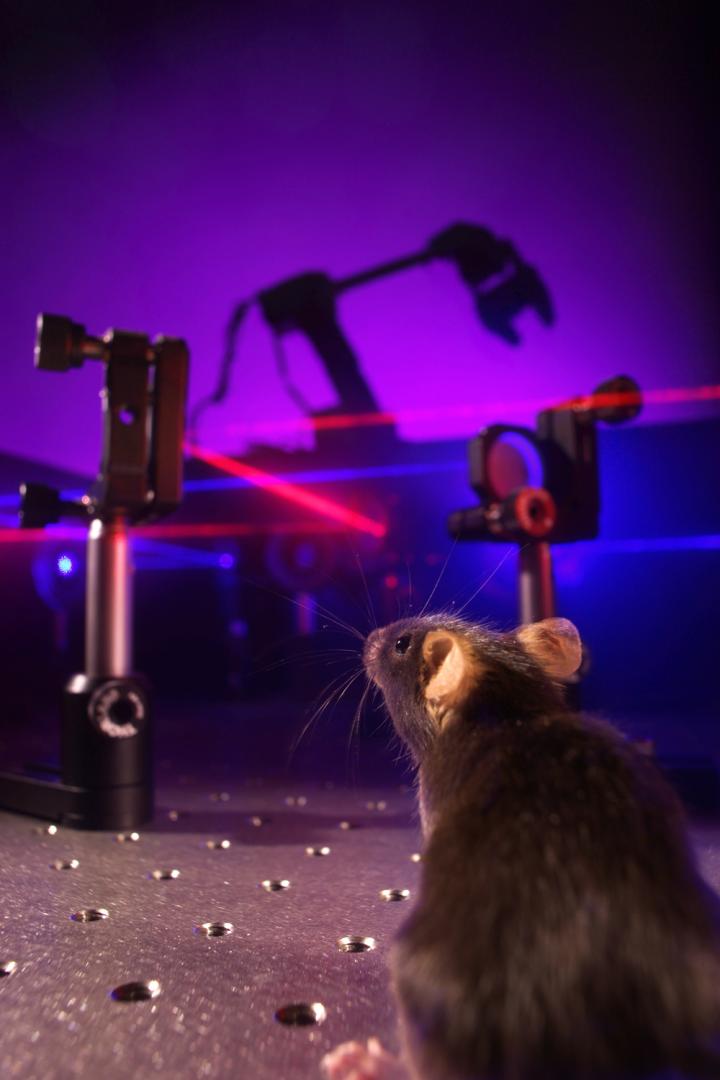

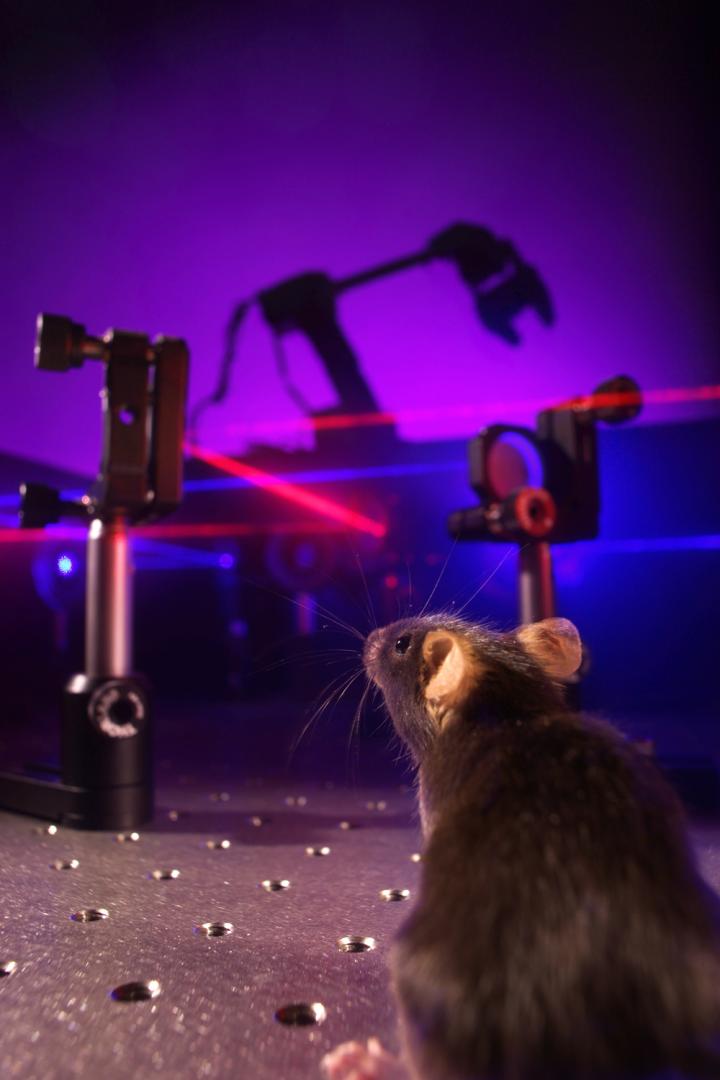

Since the early seventies, scientists have been developing brain-machine interfaces; the main application being the use of neural prosthesis in paralyzed patients or amputees. A prosthetic limb directly controlled by brain activity can partially recover the lost motor function. This is achieved by decoding neuronal activity recorded with electrodes and translating it into robotic movements. Such systems however have limited precision due to the absence of sensory feedback from the artificial limb. Neuroscientists at the University of Geneva (UNIGE), Switzerland, asked whether it was possible to transmit this missing sensation back to the brain by stimulating neural activity in the cortex. They discovered that not only was it possible to create an artificial sensation of neuroprosthetic movements, but that the underlying learning process occurs very rapidly. These findings, published in the scientific journal Neuron, were obtained by resorting to modern imaging and optical stimulation tools, offering an innovative alternative to the classical electrode approach.

Motor function is at the heart of all behavior and allows us to interact with the world. Therefore, replacing a lost limb with a robotic prosthesis is the subject of much research, yet successful outcomes are rare. Why is that? Until this moment, brain-machine interfaces are operated by relying largely on visual perception: the robotic arm is controlled by looking at it. The direct flow of information between the brain and the machine remains thus unidirectional. However, movement perception is not only based on vision but mostly on proprioception, the sensation of where the limb is located in space. “We have therefore asked whether it was possible to establish a bidirectional communication in a brain-machine interface: to simultaneously read out neural activity, translate it into prosthetic movement and reinject sensory feedback of this movement back in the brain”, explains Daniel Huber, professor in the Department of Basic Neurosciences of the Faculty of Medicine at UNIGE.

Providing artificial sensations of prosthetic movements.

Continue reading “Brain-machine interfaces: Bidirectional communication at last” »