Jun 14, 2020

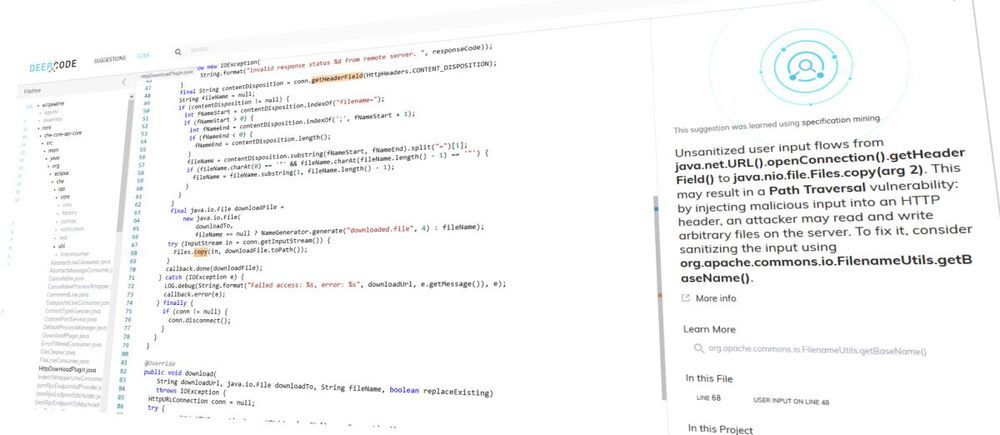

DeepCode learns from GitHub project data to give developers AI-powered code reviews

Posted by Quinn Sena in category: robotics/AI

Often, code reviews involve collaborations between the original code authors, their peers, and managers, with a view toward finding obvious errors before it gets to a more advanced phase. And the bigger a project is, the more lines of code there are to review, which is a time-consuming process. There are options out there for analyzing source code for errors, such as static analysis tool Lint, but these are often not holistic in terms of their scope — they’re focused on a smaller, targeted set of “annoying and repeatable stylistic issues, formatting and minor issues,” according to Paskalev.

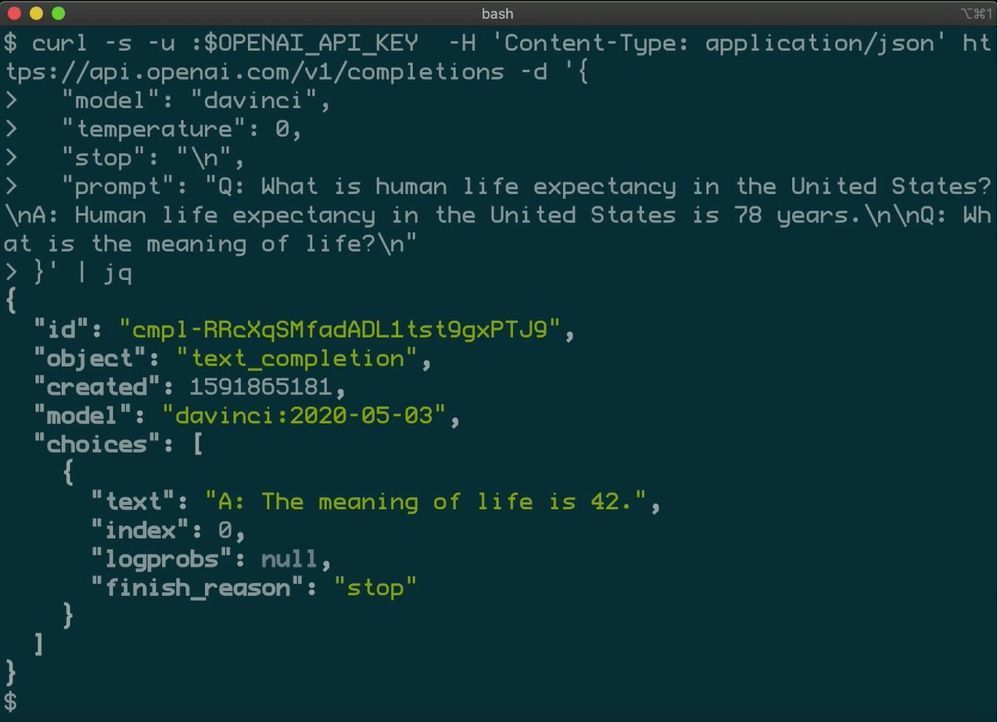

DeepCode’s selling point is that it covers a broader range of problems, including vulnerabilities such as cross-site scripting and SQL injection, while it also promises to establish the intent behind the code, rather than spotting simple syntax mistakes. Underpinning all this is machine learning (ML) systems, which are trained using billions of lines of code from public open source projects, which constantly learn and update their knowledge base.

Though DeepCode can ingest code from any source code repositories, Paskalev told VentureBeat that the public knowledge base today contains mostly GitHub repositories.