Qualcomm today announced its RB5 reference design platform for the robotics and intelligent drone ecosystem. As the field of robotics continues to evolve towards more advanced capabilities, Qualcomm’s latest platform should help drive the next step in robotics evolution with intelligence and connectivity. The company has combined its 5G connectivity and AI-focused processing along with a flexible peripherals architecture based on what they are calling “mezzanine” modules. The new Qualcomm RB5 platform promises an acceleration in the robotics design and development process with a full suite of hardware, software and development tools. The company is making big promises for the RB5 platform, and if current levels of ecosystem engagement are any indicator, the platform will have ample opportunities to prove itself.

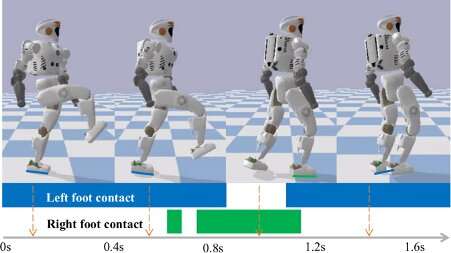

Targeting robot and drone designs meant for enterprise, industrial and professional service applications, at the heart of the platform is Qualcomm’s QRB5165 system on chip (SOC) processor. The QRB5165 is derived from the Snapdragon 865 processor used in mobile devices, but customized for robotic applications with increased camera and image signal processor (ISP) capabilities for additional camera sensors, higher industrial grade temperature and security ratings and a non-Package-on-Package (POP) configuration option.

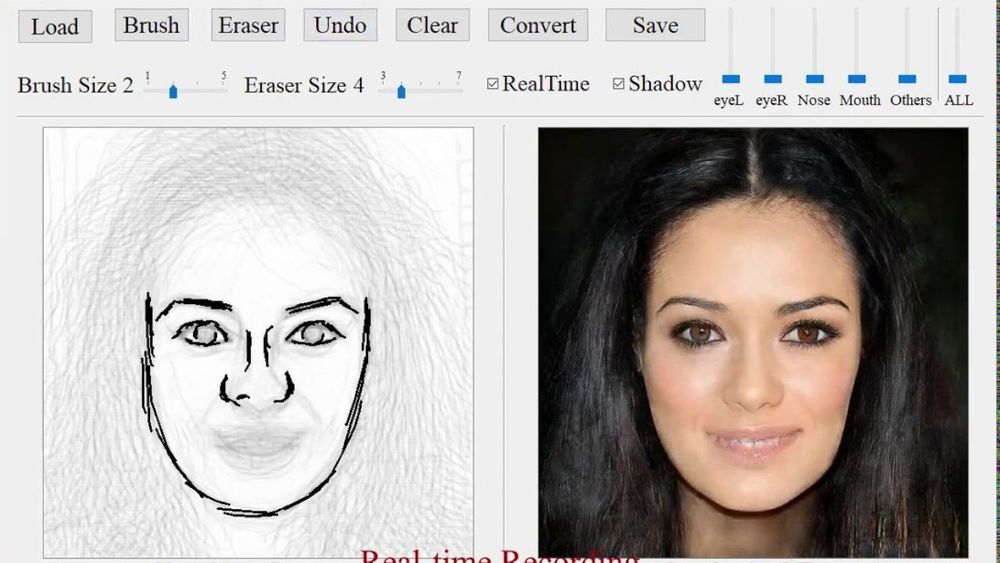

To help bring highly capable artificial intelligence and machine learning capabilities to bear in these applications, the chip is rated for 15 Tera Operations Per Second (TOPS) of AI performance. Additionally, as it is critical that robots and drones can “see” their surroundings, the architecture also includes support for up to seven concurrent cameras and a dedicated computer vision engine meant to provide enhanced video analytics. Given the sheer amount of information that the platform can generate, process and analyze, the platform also has support for a communications module boasting 4G and 5G connectivity speeds. In particular, the addition of 5G to the platform will allow high speed and low latency data connectivity to the robots or drones.