Archive for the ‘information science’ category: Page 295

Jul 8, 2016

IARPA wants big data intel tools that can predict the future

Posted by Karen Hurst in category: information science

IARPA is interested in proposals ranging four broad topics – anticipatory intelligence, analysis, operations and collection – to better integrate intelligence.

Jul 8, 2016

Microsoft Testing DNA’s Data Storage Ability With Record-Breaking Results

Posted by Karen Hurst in categories: computing, genetics, information science, internet, quantum physics

Biocomputing/ living circuit computing/ gene circuitry are the longer term future beyond Quantum. Here is another one of the many building blocks.

The tiny molecule responsible for transmitting the genetic data for every living thing on earth could be the answer to the IT industry’s quest for a more compact storage medium. In fact, researchers from Microsoft and the University of Washington recently succeeded in storing 200 MB of data on a few strands of DNA, occupying a small dot on a test tube many times smaller than the tip of a pencil.

The Internet in a Shoebox.

Continue reading “Microsoft Testing DNA’s Data Storage Ability With Record-Breaking Results” »

Jun 30, 2016

DARPA Completes Integration of Live Data Feeds Into Space Surveillance Network; Jeremy Raley Comments

Posted by Karen Hurst in categories: information science, military, robotics/AI, satellites, surveillance

The Defense Advanced Research Projects Agency has finished its work to integrate live data feeds from several sources into the U.S. Space Surveillance Network run by the Air Force in an effort to help space monitoring teams check when satellites are at risk.

The Defense Advanced Research Projects Agency has finished its work to integrate live data feeds from several sources into the U.S. Space Surveillance Network run by the Air Force in an effort to help space monitoring teams check when satellites are at risk.

SSN is a global network of 29 military radar and optical telescopes and DARPA added seven space data providers to the network to help monitor the space environment under its OrbitOutlook program, the agency said Wednesday.

DARPA plans to test the automated algorithms developed to determine relevant data from the integrated feed in order to help SSA experts carry out their mission.

Jun 28, 2016

No need in supercomputers

Posted by Karen Hurst in categories: business, cybercrime/malcode, information science, particle physics, quantum physics, robotics/AI, singularity, supercomputing

Great that they didn’t have to use a super computer to do their prescribed, lab controlled experiments. However, to limit QC to a super computer and experimental computations only is a big mistake; I cannot stress this enough. QC is a new digital infrastructure that changes our communications, cyber security, and will eventually (in the years to come) provide consumers/ businesses/ and governments with the performance they will need for AI, Biocomputing, and Singularity.

A group of physicists from the Skobeltsyn Institute of Nuclear Physics, the Lomonosov Moscow State University, has learned to use personal computer for calculations of complex equations of quantum mechanics, usually solved with help of supercomputers. This PC does the job much faster. An article about the results of the work has been published in the journal Computer Physics Communications.

Senior researchers Vladimir Pomerantcev and Olga Rubtsova, working under the guidance of Professor Vladimir Kukulin (SINP MSU) were able to use on an ordinary desktop PC with GPU to solve complicated integral equations of quantum mechanics — previously solved only with the powerful, expensive supercomputers. According to Vladimir Kukulin, personal computer does the job much faster: in 15 minutes it is doing the work requiring normally 2–3 days of the supercomputer time.

Jun 28, 2016

DARPA approaches industry for new battlefield network algorithms and network protocols

Posted by Karen Hurst in categories: computing, information science, military, mobile phones

Very nice.

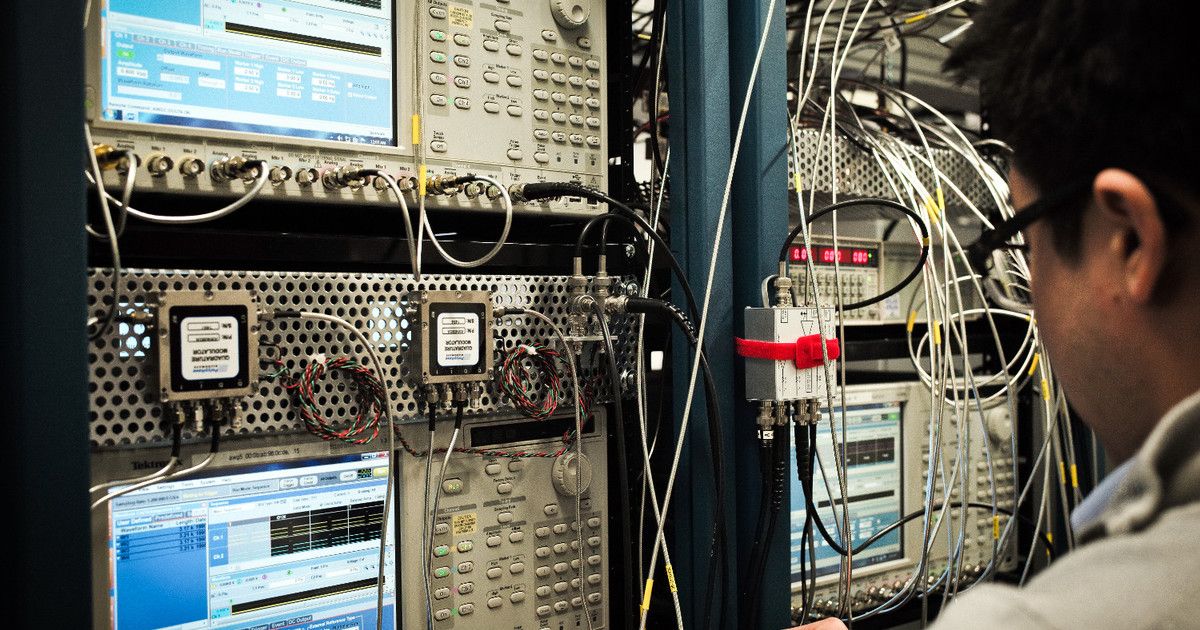

ARLINGTON, Va., 27 June 2016. U.S. military researchers are asking industry for new algorithms and protocols for large, mission-aware, computer, communications, and battlefield network systems that physically are dispersed over large forward-deployed areas.

Officials of the U.S. Defense Advanced Research Projects Agency (DARPA) in Arlington, Va., issued a broad agency announcement on Friday (DARPA-BAA-16–41) for the Dispersed Computing project, which seeks to boost application and network performance of dispersed computing architectures by orders of magnitude with new algorithms and protocol stacks.

Jun 23, 2016

Genetic algorithms can improve quantum simulations

Posted by Klaus Baldauf in categories: computing, genetics, information science, quantum physics

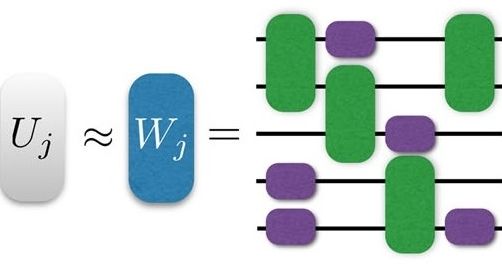

(Phys.org)—Inspired by natural selection and the concept of “survival of the fittest,” genetic algorithms are flexible optimization techniques that can find the best solution to a problem by repeatedly selecting for and breeding ever “fitter” generations of solutions.

Now for the first time, researchers Urtzi Las Heras et al. at the University of the Basque Country in Bilbao, Spain, have applied genetic algorithms to digital quantum simulations and shown that genetic algorithms can reduce quantum errors, and may even outperform existing optimization techniques. The research, which is published in a recent issue of Physical Review Letters, was led by Ikerbasque Prof. Enrique Solano and Dr. Mikel Sanz in the QUTIS group.

In general, quantum simulations can provide a clearer picture of the dynamics of systems that are impossible to understand using conventional computers due to their high degree of complexity. Whereas computers calculate the behavior of these systems, quantum simulations approximate or “simulate” the behavior.

Continue reading “Genetic algorithms can improve quantum simulations” »

Jun 21, 2016

Measuring Planck’s constant, NIST’s watt balance brings world closer to new kilogram

Posted by Karen Hurst in categories: information science, particle physics, quantum physics

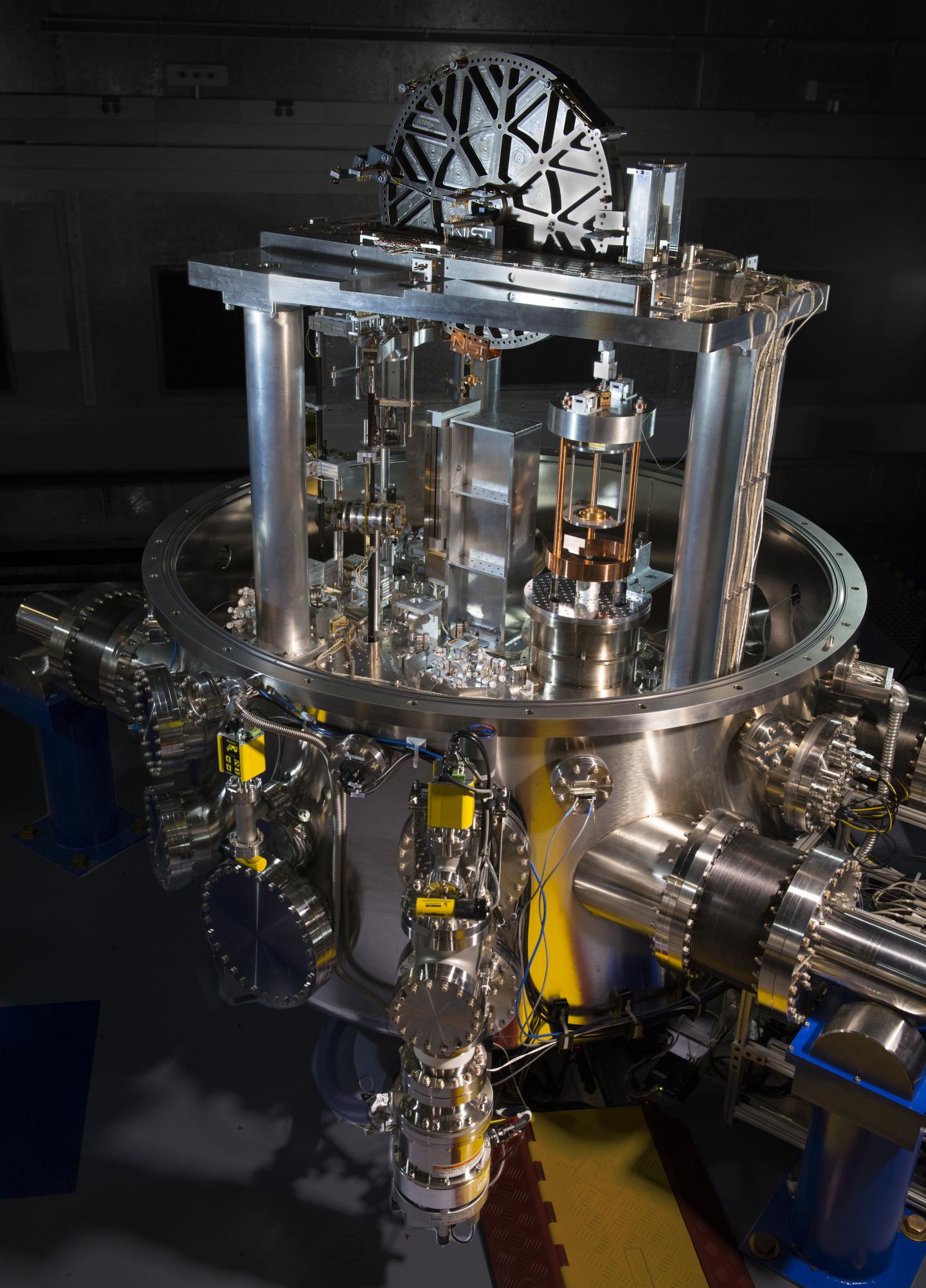

A high-tech version of an old-fashioned balance scale at the National Institute of Standards and Technology (NIST) has just brought scientists a critical step closer toward a new and improved definition of the kilogram. The scale, called the NIST-4 watt balance, has conducted its first measurement of a fundamental physical quantity called Planck’s constant to within 34 parts per billion — demonstrating the scale is accurate enough to assist the international community with the redefinition of the kilogram, an event slated for 2018.

The redefinition-which is not intended to alter the value of the kilogram’s mass, but rather to define it in terms of unchanging fundamental constants of nature-will have little noticeable effect on everyday life. But it will remove a nagging uncertainty in the official kilogram’s mass, owing to its potential to change slightly in value over time, such as when someone touches the metal artifact that currently defines it.

Planck’s constant lies at the heart of quantum mechanics, the theory that is used to describe physics at the scale of the atom and smaller. Quantum mechanics began in 1900 when Max Planck described how objects radiate energy in tiny packets known as “quanta.” The amount of energy is proportional to a very small quantity called h, known as Planck’s constant, which subsequently shows up in almost all equations in quantum mechanics. The value of h — according to NIST’s new measurement — is 6.62606983×10−34 kg?m2/s, with an uncertainty of plus or minus 22 in the last two digits.

Jun 21, 2016

Tech Companies Mull Storing Data in DNA

Posted by Karen Hurst in categories: biotech/medical, information science

As conventional storage technologies struggle to keep up with big data, interest grows in a biological alternative.

Jun 20, 2016

DARPA wants to design an army of ultimate automated data scientists

Posted by Karen Hurst in categories: information science, internet, neuroscience, robotics/AI

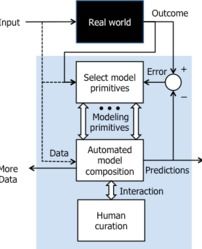

Because of a plethora of data from sensor networks, Internet of Things devices and big data resources combined with a dearth of data scientists to effectively mold that data, we are leaving many important applications – from intelligence to science and workforce management – on the table.

It is a situation the researchers at DARPA want to remedy with a new program called Data-Driven Discovery of Models (D3M). The goal of D3M is to develop algorithms and software to help overcome the data-science expertise gap by facilitating non-experts to construct complex empirical models through automation of large parts of the model-creation process. If successful, researchers using D3M tools will effectively have access to an army of “virtual data scientists,” DARPA stated.

+More on Network World: Feeling jammed? Not like this I bet+

Continue reading “DARPA wants to design an army of ultimate automated data scientists” »