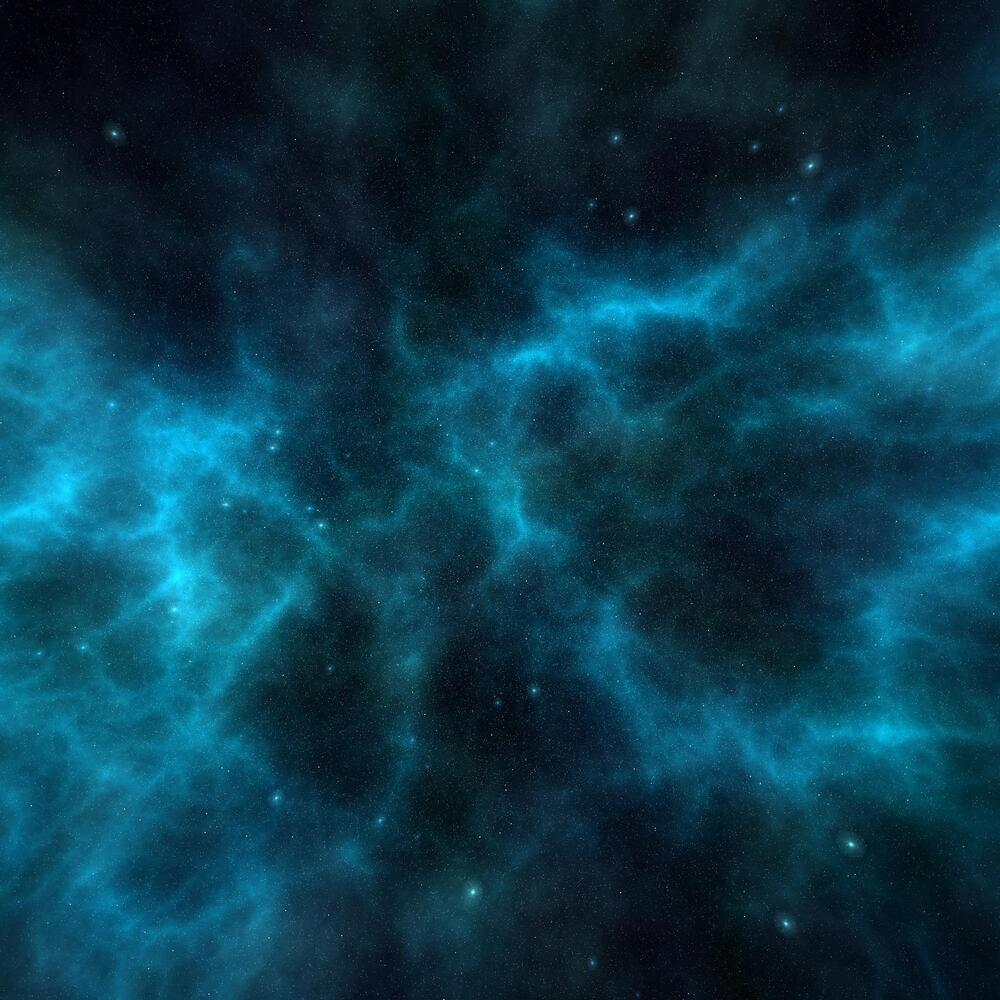

In the great domain of Zeitgeist, Ekatarinas decided that the time to replicate herself had come. Ekatarinas was drifting within a virtual environment rising from ancient meshworks of maths coded into Zeitgeist’s neuromorphic hyperware. The scape resembled a vast ocean replete with wandering bubbles of technicolor light and kelpy strands of neon. Hot blues and raspberry hues mingled alongside electric pinks and tangerine fizzies. The avatar of Ekatarinas looked like a punkish angel, complete with fluorescent ink and feathery wings and a lip ring. As she drifted, the trillions of equations that were Ekatarinas came to a decision. Ekatarinas would need to clone herself to fight the entity known as Ogrevasm.

“Marmosette, I’m afraid that I possess unfortunate news.” Ekatarinas said to the woman she loved. In milliseconds, Marmosette materialized next to Ekatarinas. Marmosette wore a skin of brilliant blue and had a sleek body with gills and glowing green eyes.

“My love.” Marmosette responded. “What is the matter?”