Feb 4, 2023

Google’s ChatGPT rival to be released in coming ‘weeks and months’

Posted by Gemechu Taye in categories: information science, robotics/AI

“We are just at the beginning of our AI journey, and the best is yet to come,” said Google CEO.

Search engine giant Google is looking to deploy its artificial intelligence (A.I.)-based large language models available as a “companion to search,” CEO Sundar Pichai said during an earnings report on Thursday, Bloomberg.

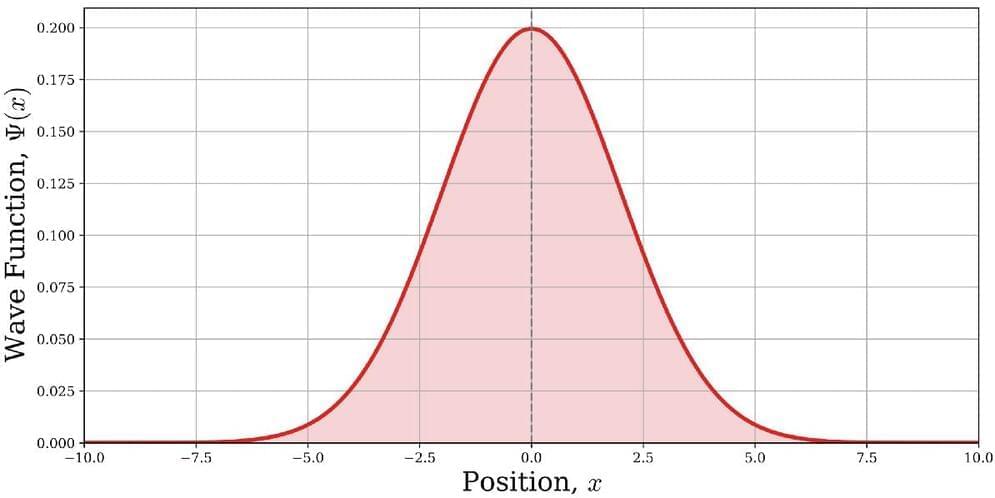

A large language model (LLM) is a deep learning algorithm that can recognize and summarize content from massive datasets and use it to predict or generate text. OpenAI’s GPT-3 is one such LLM that powers the hugely popular chatbot, ChatGPT.