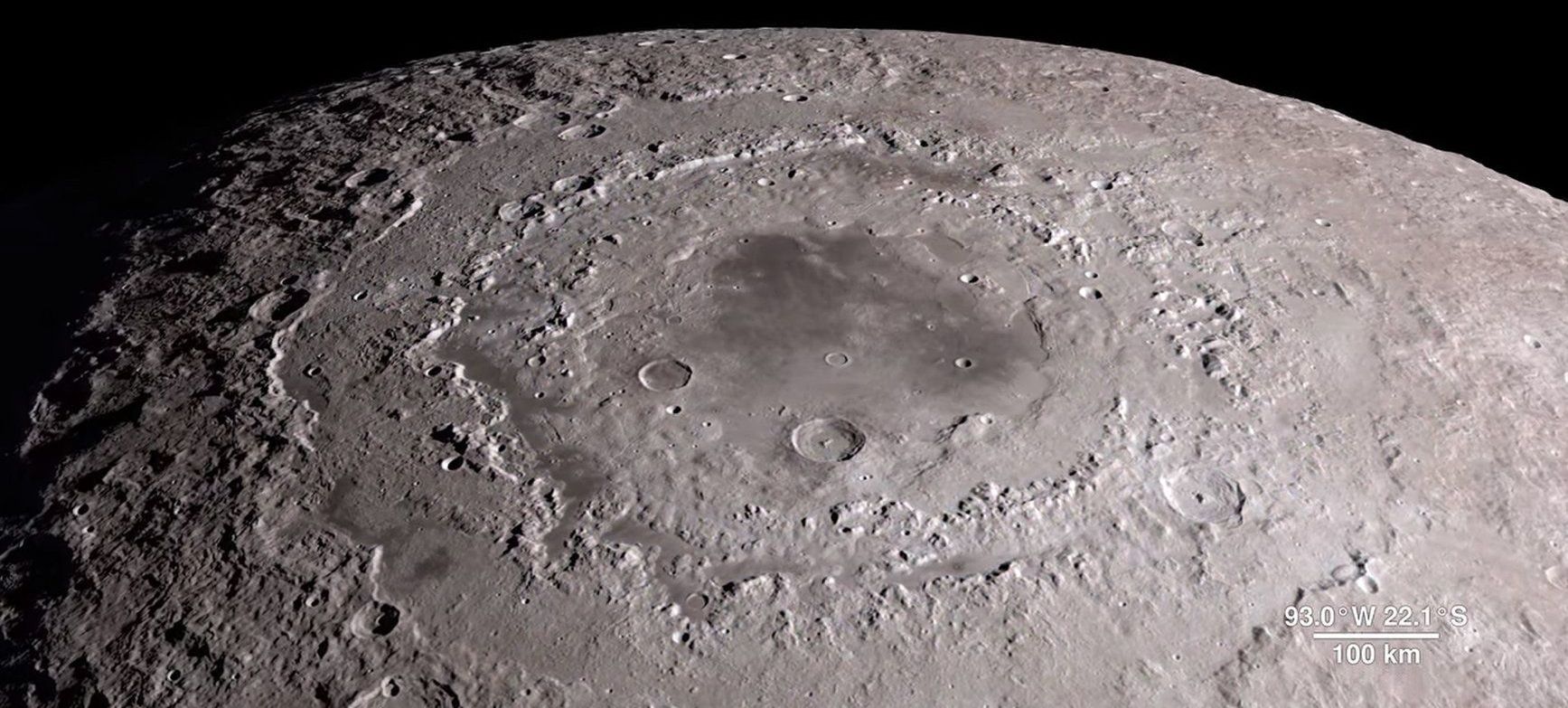

A new look at the moon via 4-k video fly-throughs from LRO data and imaging. The finale is a drop down to Taurus-Littrow to see a highly magnified image of the…Apollo 17 LM descent stage. Stunning! #NASA #Apollo #LunarReconnaissanceOrbiter #moon https://www.space.com/40274-nasa-moon-in-4k-video-tour.html…

Images from NASA’s Lunar Reconnaissance Orbiter (LRO) are not only helping planners with future human missions to the moon, but they are also revealing new information about the moon’s evolution and structure.

A new NASA video, posted on YouTube, features more than half a dozen locations of interest in stunning 4K resolution, much of it courtesy of LRO data. NASA also highlighted the individual sites in a Tumblr post that delves deeper into their geology, morphology and significance.

LRO has been circling the moon since 2009 and has made a range of discoveries at Earth’s closest large celestial neighbor. [More Amazing Moon Photos from NASA’s LRO].