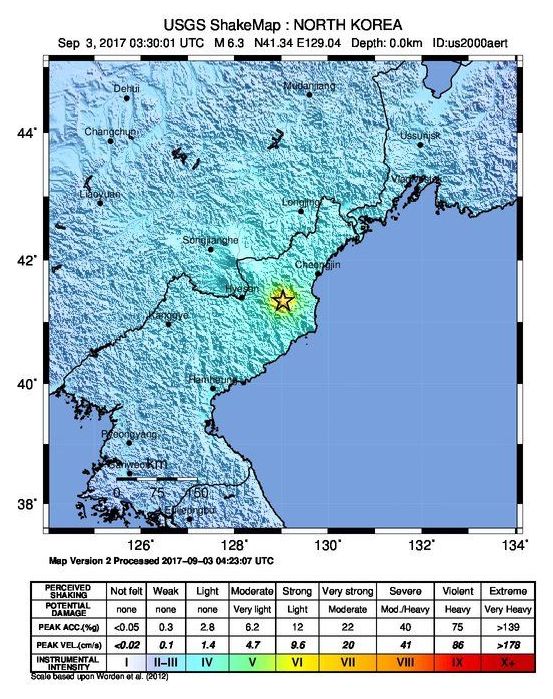

This grim vision of a possible future comes from the latest studies about how nuclear war could alter world climate. They build on long-standing work about a ‘nuclear winter’ — severe global cooling that researchers predict would follow a major nuclear war, such as thousands of bombs flying between the United States and Russia. But much smaller nuclear conflicts, which are more likely to occur, could also have devastating effects around the world.

As geopolitical tensions rise in nuclear-armed states, scientists are modelling the global impact of nuclear war.