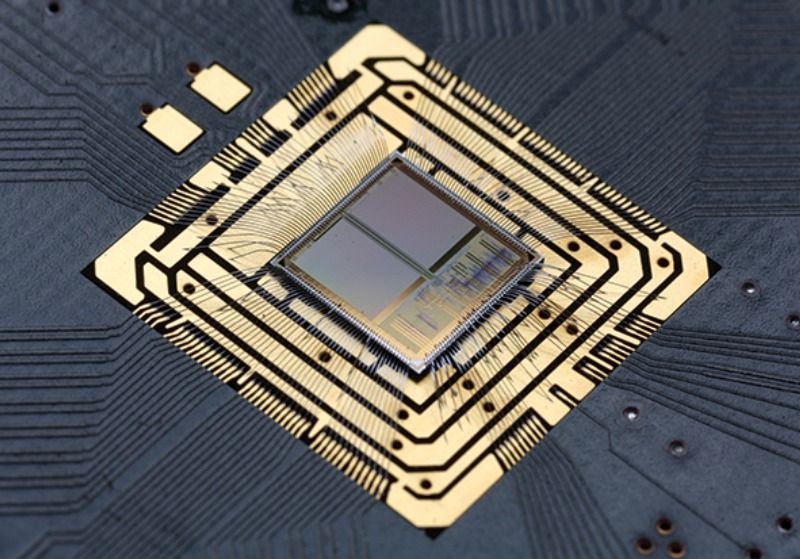

Building a Silicon Brain Computer chips based on biological neurons may help simulate larger and more-complex brain models.

“If we don’t study the mistakes of the future, we’re doomed to repeat them the first time :(” — Ken M, comedian.

[Editor’s Note: Today’s blog post is an excerpt from Mr. Robert J. Hranek’s short story entitled “Angry Engineer,” submitted to the 2019 Mad Scientist Science Fiction Writing Contest. The underlying premise of this contest was that, following months of strained relations and covert hostility with its neighbor Otso, Donovia launched offensive combat operations against Otso on 17 March 2030. Donovia is a wealthy nation that is a near-peer strategic competitor of the United States. The U.S. is a close ally of Otso and is compelled to intervene due to treaty obligations and historical ties. Among the many future innovations addressed in his short story, Mr. Hranek includes a “pre-mortem” in the form of two dozen lessons learned, identifying potential “mistakes of the future” regarding the Battle for Otso, so that we’re not “doomed to repeat them the first time!” Enjoy!]

The U.S. responded to Donovia’s invasion of Otso by initiating combat operations against the aggressors on 1 April 2030 — April Fools’ Day. Thousands of combatants died on both sides, mostly on ships; hundreds more were wounded, primarily from the land battle, and an unverifiable number of casualties occurred worldwide due to the sabotage of power grids and other infrastructure. An accurate civilian count was impossible in the chaos of reestablishing power, computer, and financial systems worldwide.

Bruce Damer is a living legend and international man of mystery – specifically, the mystery of our cosmos, to which he’s devoted his life to exploring: the origins of life, simulating artificial life in computers, deriving amazing new plans for asteroid mining, and cultivating his ability to receive scientific inspiration from “endotripping” (in which he stimulates his brain’s own release of psychoactive compounds known to increase functional connectivity between brain regions). He’s about to work with Google to adapt his origins of life research to simulated models of the increasingly exciting hot springs origin hypothesis he’s been working on with Dave Deamer of UC Santa Cruz for the last several years. And he’s been traveling around the world experimenting with thermal pools, getting extremely close to actually creating new living systems in situ as evidence of their model. Not to mention his talks with numerous national and private space agencies to take the S.H.E.P.H.E.R.D. asteroid mining scheme into space to kickstart the division and reproduction of our biosphere among/between the stars…

The biggest rubik’s cube… ever, being solved by a computer.

Please watch the new updated video instead (unless you really enjoy YouTube’s AudioSwap music): https://www.youtube.com/watch?v=0cedyW6JdsQ

Enjoy!!!

*I FORGOT THE NAME OF THE PROGRAM, I HAD IT A LONG TIME AGO!

The only program I can find (but it is not the same one as the video) is ACube 3 available here:

http://software.rubikscube.info/JACube/index.html

But I can’t test it because its not working on my computer.

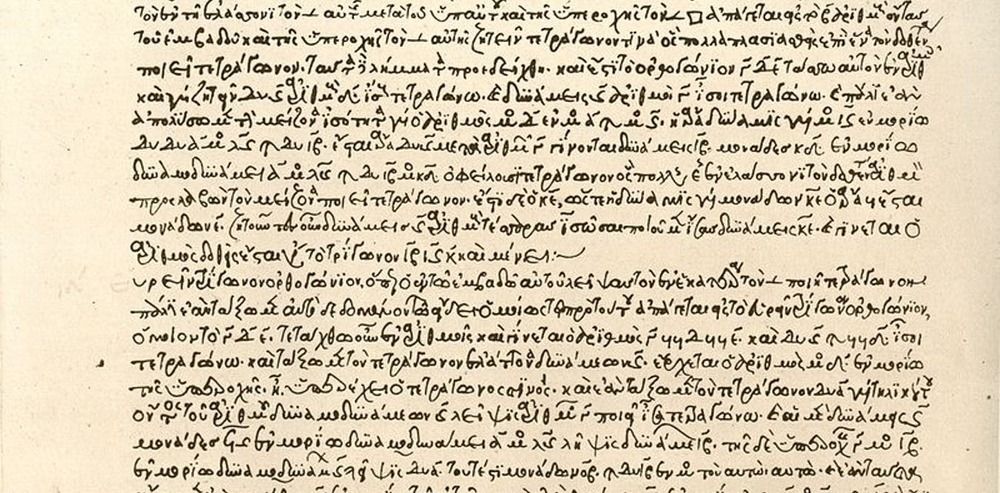

Andrew Wiles’ proof of Fermat’s Last Theorem is a famous example. Pierre de Fermat claimed in 1637 – in the margin of a copy of “Arithmetica,” no less – to have solved the Diophantine equation xⁿ + yⁿ = zⁿ, but offered no justification. When Wiles proved it over 300 years later, mathematicians immediately took notice. If Wiles had developed a new idea that could solve Fermat, then what else could that idea do? Number theorists raced to understand Wiles’ methods, generalizing them and finding new consequences.

No single method exists that can solve all Diophantine equations. Instead, mathematicians cultivate various techniques, each suited for certain types of Diophantine problems but not others. So mathematicians classify these problems by their features or complexity, much like biologists might classify species by taxonomy.

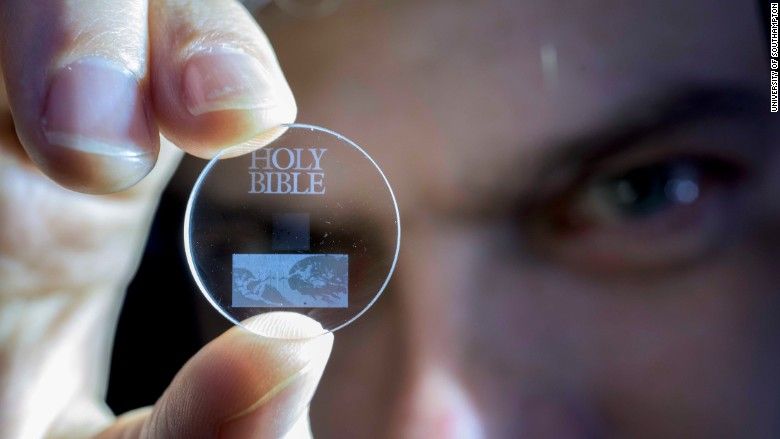

Researchers in the U.K. have developed a way of storing digital data inside tiny structures contained in glass.

The storage technology is so stable and safe that it can survive for billions of years, scientists at the University of Southampton said this week.

That’s a lot longer than your average computer hard drive.

Glaciers are set to disappear completely from almost half of World Heritage sites if business-as-usual emissions continue, according to the first-ever global study of World Heritage glaciers.

The sites are home to some of the world’s most iconic glaciers, such as the Grosser Aletschgletscher in the Swiss Alps, Khumbu Glacier in the Himalayas and Greenland’s Jakobshavn Isbrae.

The study in the AGU journal Earth’s Future and co-authored by scientists from the International Union for Conservation of Nature (IUCN) combines data from a global glacier inventory, a review of existing literature and sophisticated computer modeling to analyze the current state of World Heritage glaciers, their recent evolution, and their projected mass change over the 21st century.

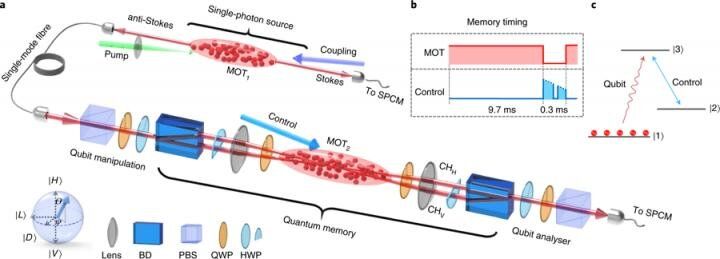

Like memory in conventional computers, quantum memory components are essential for quantum computers—a new generation of data processors that exploit quantum mechanics and can overcome the limitations of classical computers. With their potent computational power, quantum computers may push the boundaries of fundamental science to create new drugs, explain cosmological mysteries, or enhance accuracy of forecasts and optimization plans. Quantum computers are expected to be much faster and more powerful than their traditional counterparts as information is calculated in qubits, which, unlike the bits used in classical computers, can represent both zero and one in a simultaneous superstate.

Photonic quantum memory allows for the storage and retrieval of flying single-photon quantum states. However, production of such highly efficient quantum memory remains a major challenge as it requires a perfectly matched photon-matter quantum interface. Meanwhile, the energy of a single photon is too weak and can be easily lost into the noisy sea of stray light background. For a long time, these problems suppressed quantum memory efficiencies to below 50 percent—a threshold value crucial for practical applications.

Now, for the first time, a joint research team led by Prof. Du Shengwang from HKUST, Prof. Zhang Shanchao from SCNU, Prof. Yan Hui from SCNU and Prof. Zhu Shi-Liang from SCNU and Nanjing University has found a way to boost the efficiency of photonic quantum memory to over 85 percent with a fidelity of over 99 percent.

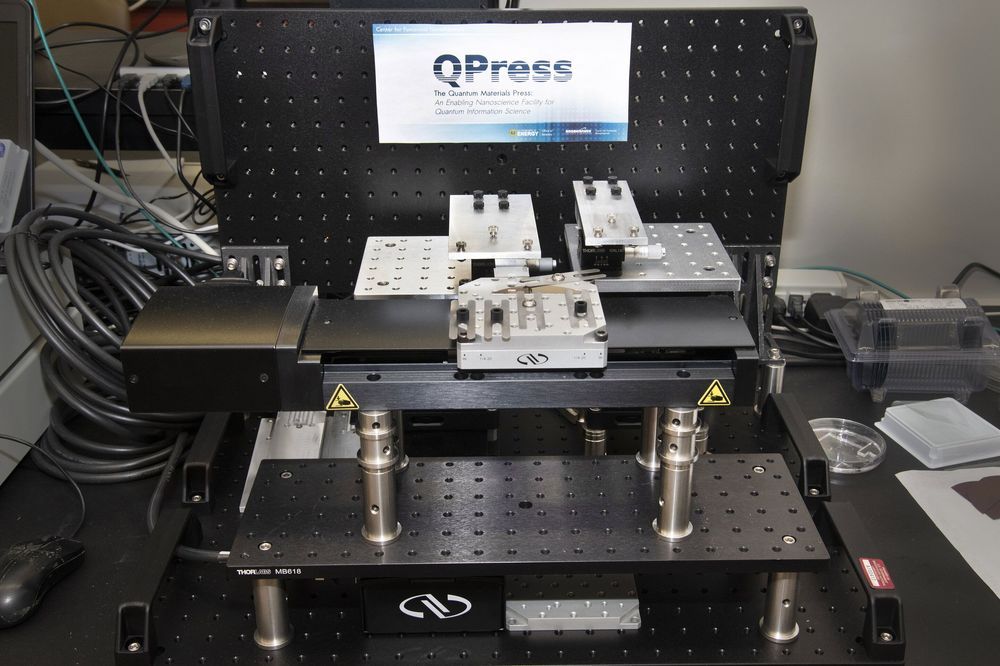

Checking out a stack of books from the library is as simple as searching the library’s catalog and using unique call numbers to pull each book from their shelf locations. Using a similar principle, scientists at the Center for Functional Nanomaterials (CFN)—a U.S. Department of Energy (DOE) Office of Science User Facility at Brookhaven National Laboratory—are teaming with Harvard University and the Massachusetts Institute of Technology (MIT) to create a first-of-its-kind automated system to catalog atomically thin two-dimensional (2-D) materials and stack them into layered structures. Called the Quantum Material Press, or QPress, this system will accelerate the discovery of next-generation materials for the emerging field of quantum information science (QIS).

Structures obtained by stacking single atomic layers (“flakes”) peeled from different parent bulk crystals are of interest because of the exotic electronic, magnetic, and optical properties that emerge at such small (quantum) size scales. However, flake exfoliation is currently a manual process that yields a variety of flake sizes, shapes, orientations, and number of layers. Scientists use optical microscopes at high magnification to manually hunt through thousands of flakes to find the desired ones, and this search can sometimes take days or even a week, and is prone to human error.

Once high-quality 2-D flakes from different crystals have been located and their properties characterized, they can be assembled in the desired order to create the layered structures. Stacking is very time-intensive, often taking longer than a month to assemble a single layered structure. To determine whether the generated structures are optimal for QIS applications—ranging from computing and encryption to sensing and communications—scientists then need to characterize the structures’ properties.