Scientists describe an electronic method that could one day enable brain computers.

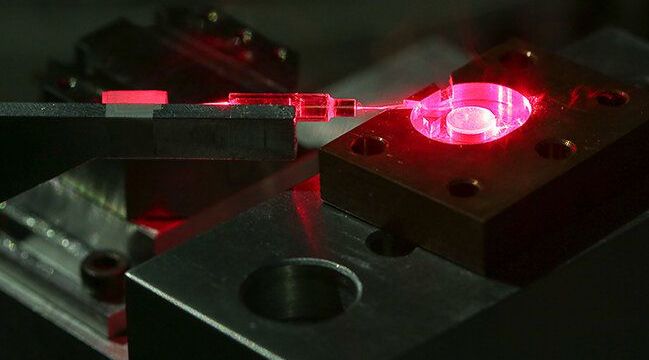

A team of University of Otago/Dodd-Walls Centre scientists have created a novel device that could enable the next generation of faster, more energy efficient internet. Their breakthrough results have been published in the world’s premiere scientific journal Nature this morning.

The internet is one of the single biggest consumers of power in the world. With data capacity expected to double every year and the physical infrastructure used to encode and process data reaching its limits, there is huge pressure to find new solutions to increase the speed and capacity of the internet.

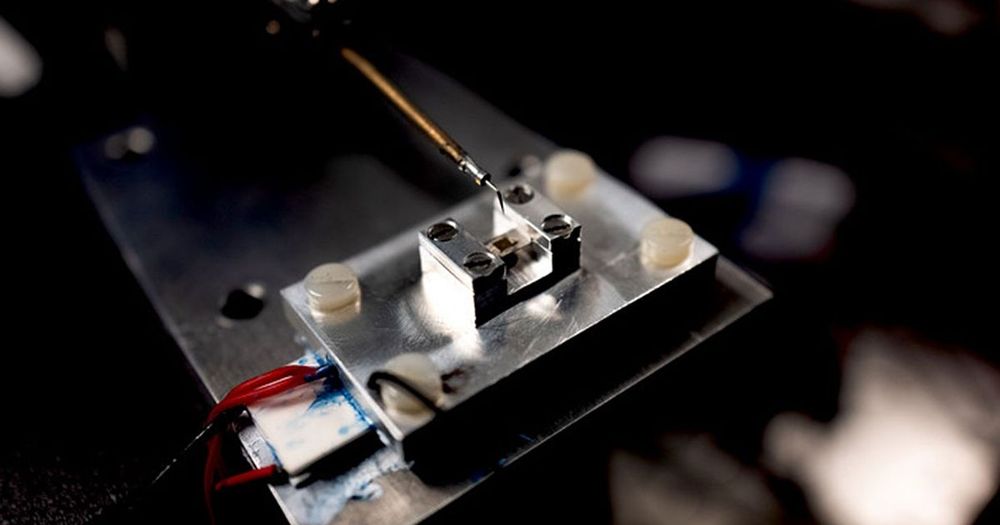

Principal Investigator Dr. Harald Schwefel and Dr. Madhuri Kumari’s research has found an answer. They have created a device called a microresonator optical frequency comb made out of a tiny disc of crystal. The device transforms a single colour of laser light into a rainbow of 160 different frequencies – each beam totally in sync with each other and perfectly stable. One such device could replace hundreds of power-consuming lasers currently used to encode and send data around the world.

So yes, Microsoft may (at long last) be redesigning Windows 10 updates in a more responsible manner. But allowing users to block dangerous updates is just one part of the solution. Not sending dangerous updates to users computers every few months seems equally important to me.

___

Follow Gordon on Twitter and Facebook.

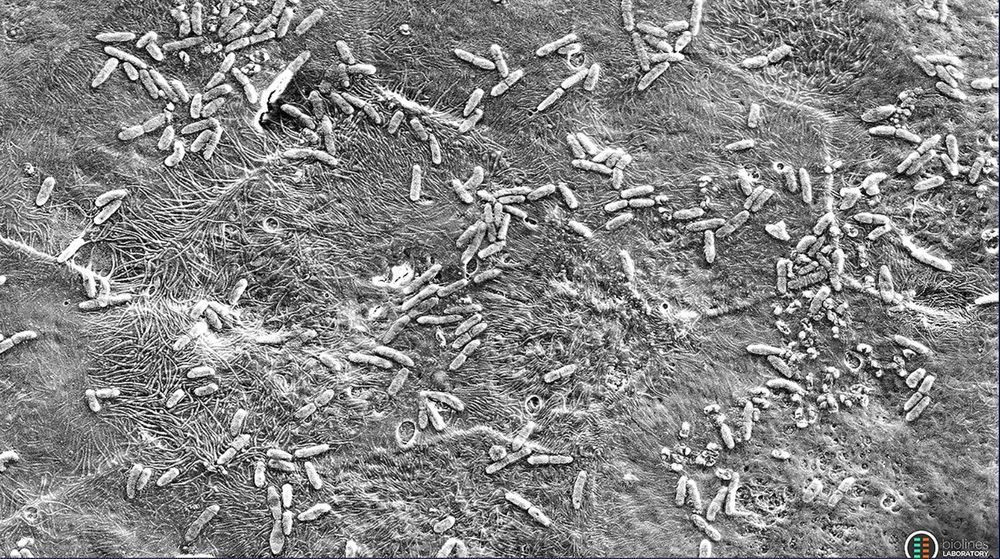

On April 25, a SpaceX Falcon 9 rocket will launch cargo to the space station and two organs-on-a-chip experiments designed by University of Pennsylvania scientists. They want to understand why so many astronauts get infections while in space. NASA has reported that 15 of the 29 Apollo astronauts had bacterial or viral infections. Between 1989 and 1999, more than 26 space shuttle astronauts had infections.

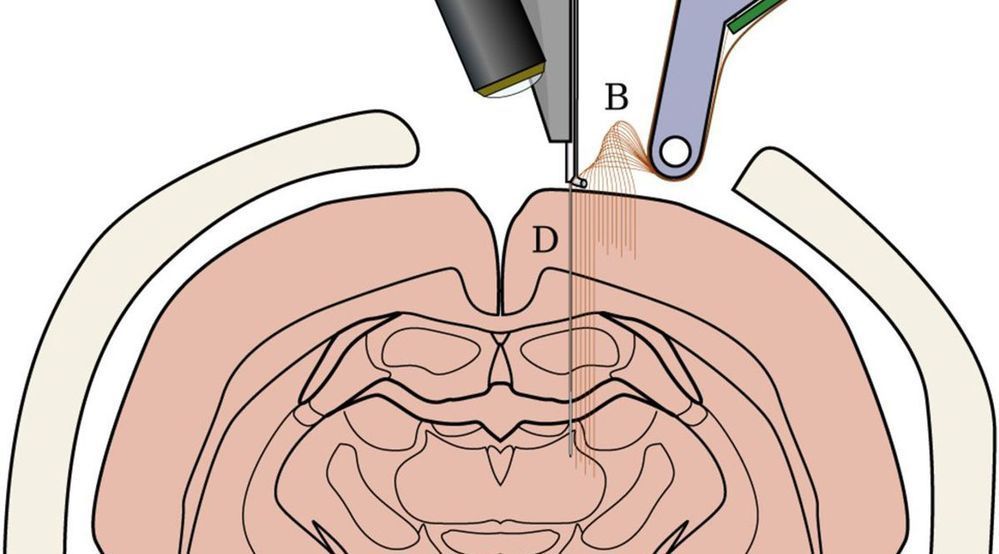

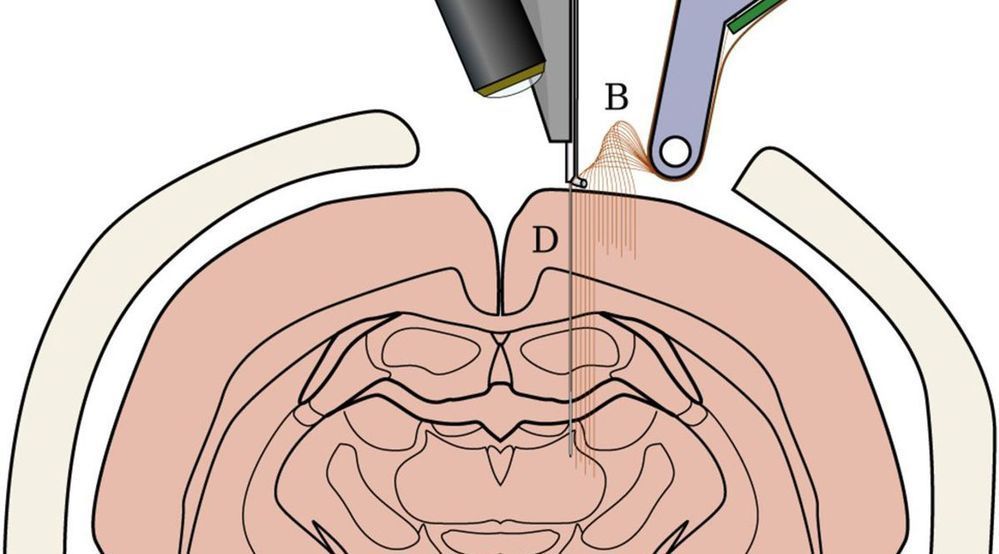

Huh and his team have created two separate experiments for this first launch. The first essentially mimics an infection inside a human airway, to see what happens to the bacteria, and the surrounding cells, in orbit. Huh’s BIOLines lab created the actual chips.

A lung chip is made of a polymer, and a permeable membrane is the platform for the human cells. For the lung-on-a-chip, one side of the membrane is coated with lung cells, to process the air, and capillary cells on the other, to provide the blood flow. The membrane is stretched and released to provide the bellows-like effect of real lungs.

A world-record result in reducing errors in semiconductor ‘spin qubits’, a type of building block for quantum computers, has been achieved using the theoretical work of quantum physicists at the University of Sydney Nano Institute and School of Physics.

The experimental result by University of New South Wales engineers demonstrated error rates as low as 0.043 percent, lower than any other spin qubit. The joint research paper by the Sydney and UNSW teams was published this week in Nature Electronics and is the journal’s cover story for April.

“Reducing errors in quantum computers is needed before they can be scaled up into useful machines,” said Professor Stephen Bartlett, a corresponding author of the paper.

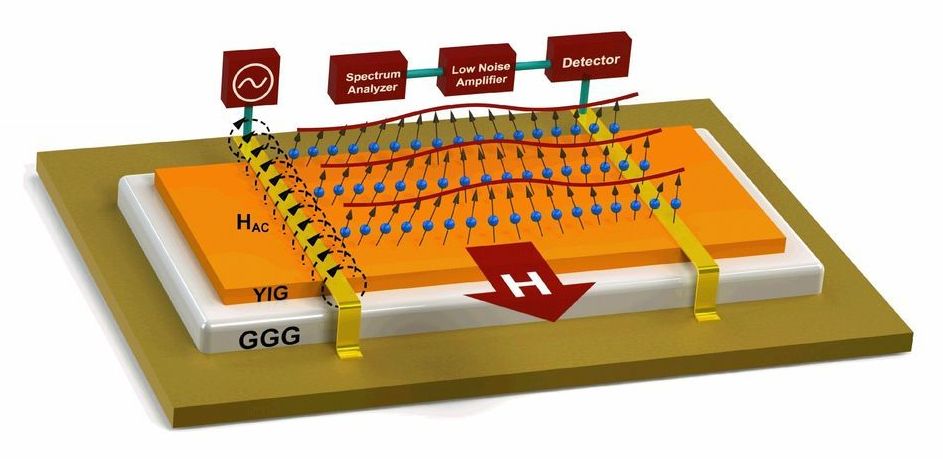

Electronic devices such as transistors are getting smaller and will soon hit the limits of conventional performance based on electrical currents.

Devices based on magnonic currents—quasi-particles associated with waves of magnetization, or spin waves, in certain magnetic materials—would transform the industry, though scientists need to better understand how to control them.

Engineers at the University of California, Riverside, have made an important step toward the development of practical magnonic devices by studying, for the first time, the level of noise associated with propagation of magnon current.

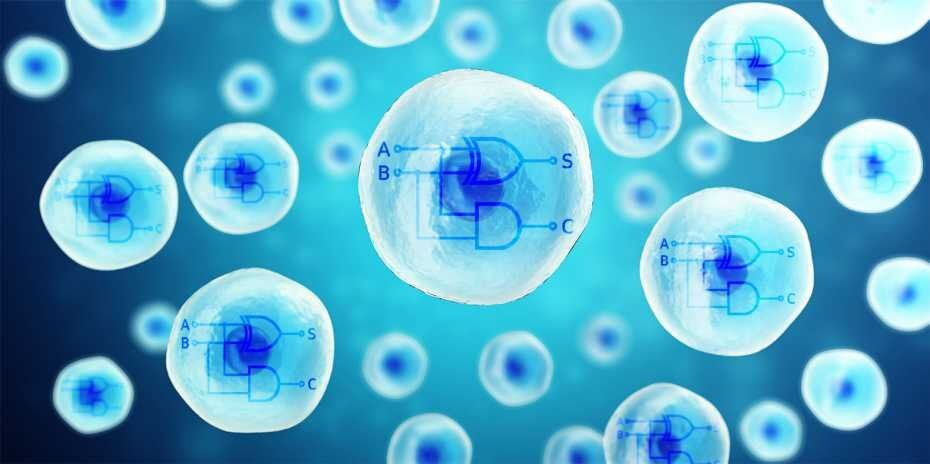

ETH researchers have integrated two CRISPR-Cas9-based core processors into human cells. This represents a huge step towards creating powerful biocomputers.

Controlling gene expression through gene switches based on a model borrowed from the digital world has long been one of the primary objectives of synthetic biology. The digital technique uses what are known as logic gates to process input signals, creating circuits where, for example, output signal C is produced only when input signals A and B are simultaneously present.

To date, biotechnologists had attempted to build such digital circuits with the help of protein gene switches in cells. However, these had some serious disadvantages: they were not very flexible, could accept only simple programming, and were capable of processing just one input at a time, such as a specific metabolic molecule. More complex computational processes in cells are thus possible only under certain conditions, are unreliable, and frequently fail.