Over the past decades, electronics engineers and material scientists worldwide have been investigating the potential of various materials for fabricating transistors, devices that amplify or switch electrical signals in electronic devices. Two-dimensional (2D) semiconductors have been known to be particularly promising materials for fabricating the new electronic devices.

Despite their advantages, the use of these materials in electronics greatly depends on their integration with high-quality dielectrics, insulating materials or materials that are poor conductors of electrical current. These materials, however, can be difficult to deposit on 2D semiconductor substrates.

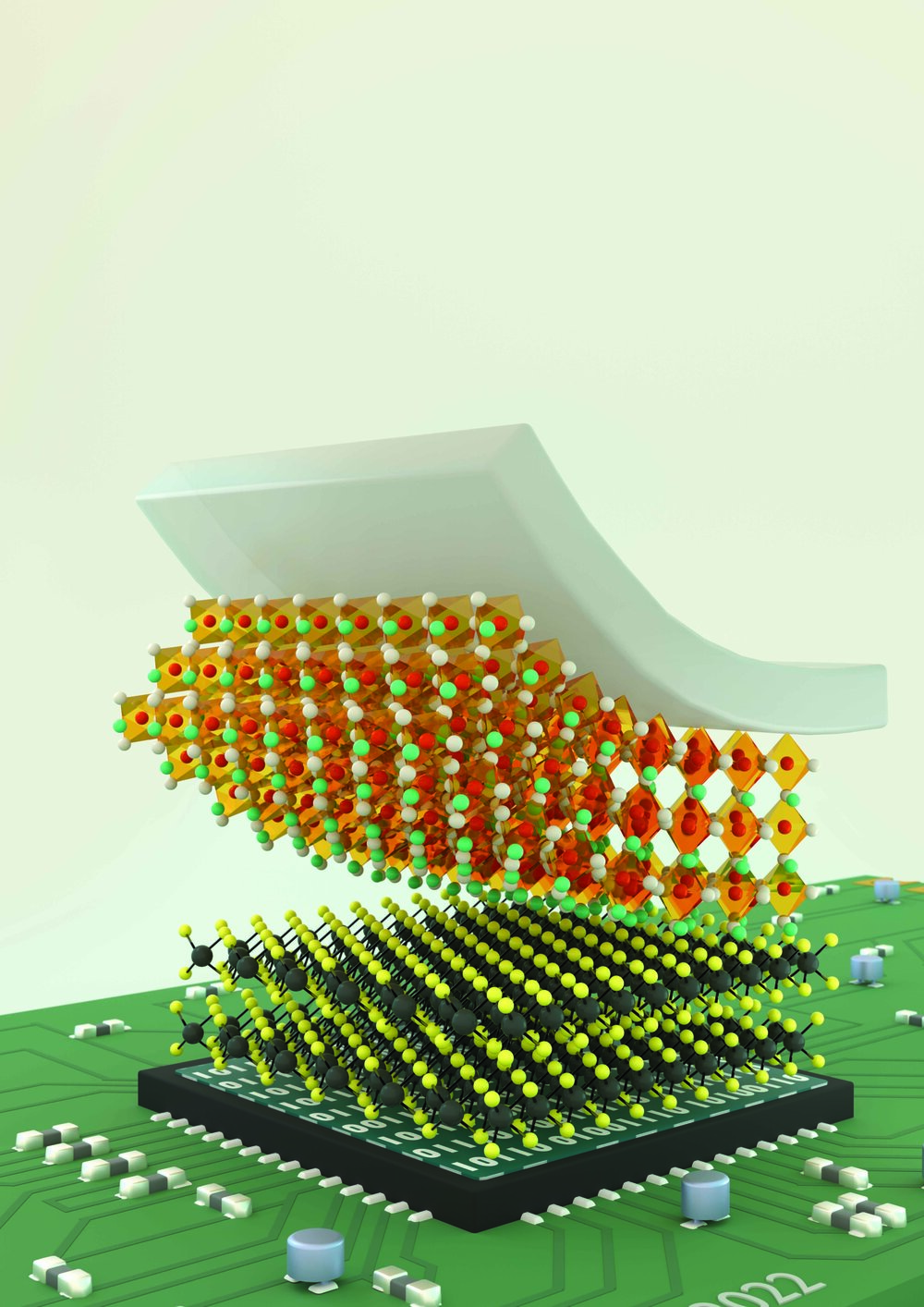

Researchers at Nanyang Technological University, Peking University, Tsinghua University, and the Beijing Academy of Quantum Information Sciences have recently demonstrated the successful integration of single-crystal strontium titrate, a high-κ perovskite oxide, with 2D semiconductors, using van der Waals forces. Their paper, published in Nature Electronics, could open new possibilities for the development of new types of transistors and electronic components.