Insects, with their remarkable ability to undergo complete metamorphosis, have long fascinated scientists seeking to understand the underlying genetic mechanisms governing this transformative process.

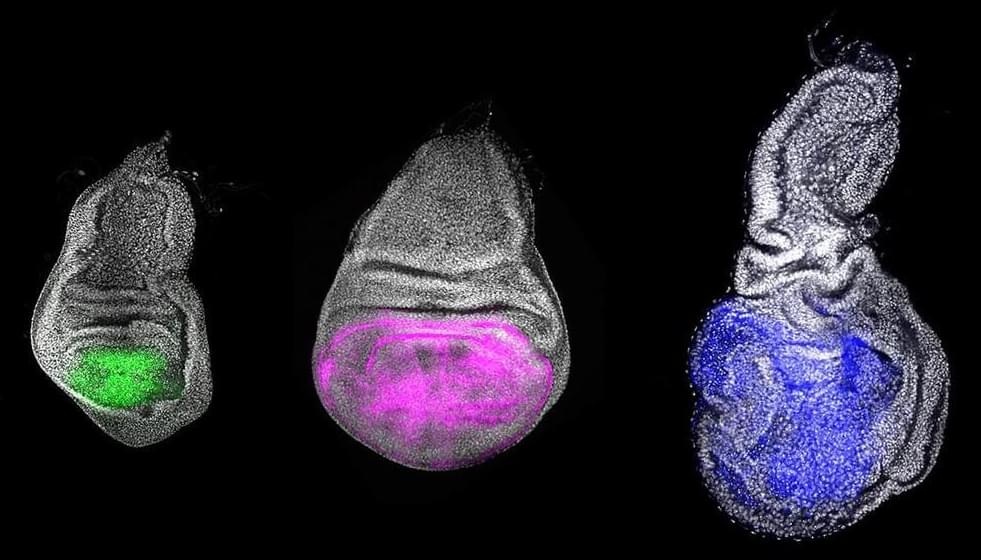

Now, a recent study conducted by the Institute for Evolutionary Biology (IBE, CSIC-UPF) and the IRB Barcelona has shed light on the crucial role of three genes – Chinmo, Br-C and E93 – in orchestrating the stages of insect development. Published in eLife, this research provides valuable insights into the evolutionary origins of metamorphosis and sheds new light on the role of these genes in growth, development and cancer regulation [1].

Longevity. Technology: Chinmo might sound like a Pokémon character, but the truth is much more interesting. Conserved throughout the evolution of insects, scientists think it, and the more conventionally-named Br-C and E93, could play a key role in the evolution of metamorphosis, acting as the hands of the biological clock in insects. A maggot is radically different from the fly into which it changes – could understanding and leveraging the biology involved one day allow us to change cultured skin cells into replacement organs or to stop tumors in their early stages of formation? No, Dr Seth Brundle, you can buzz off.