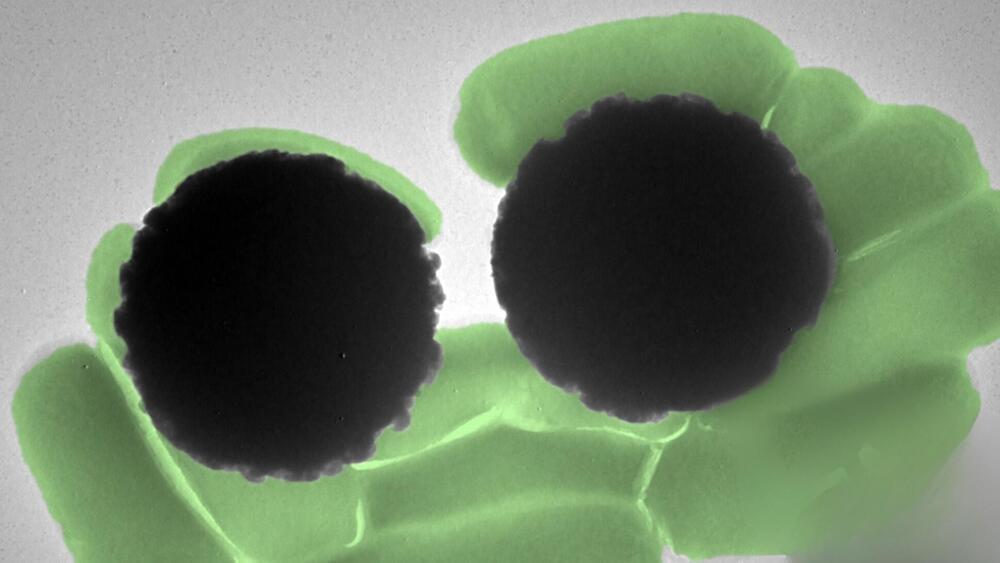

The findings suggest that adenosine base editing raised the expression of fetal hemoglobin to higher, more stable, and more uniform levels than other genome editing technologies that use CRISPR/Cas9 nuclease in human hematopoietic stem cells.

“Ultimately, we showed that not all genetic approaches are equal,” said Jonathan Yen, PhD, genome engineering group director at St. Jude Children’s Research Hospital. “Base editors may be able to create more potent and precise edits than other technologies. But we must do more safety testing and optimization.”

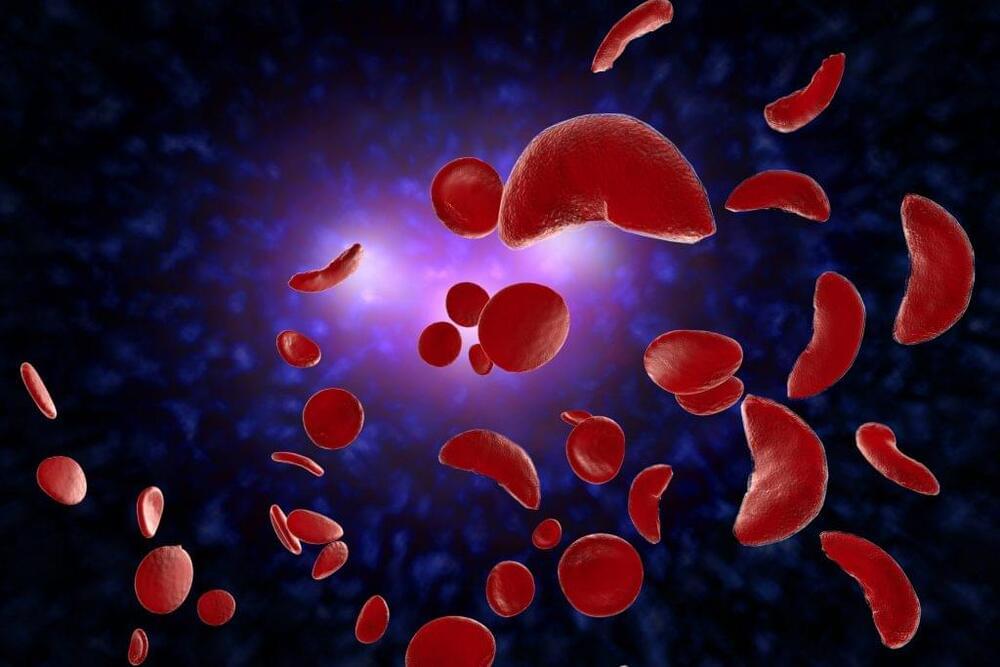

SCD and beta-thalassemia are blood disorders caused by mutations in the gene encoding hemoglobin affecting millions of people. Restoring gene expression of an alternative hemoglobin subunit active in a developing fetus has previously shown therapeutic benefit in SCD and beta-thalassemia patients. The researchers wanted to find and optimize genomic technology to edit the fetal hemoglobin gene.

Adult hemoglobin, expressed primarily after birth, contains four protein subunits—two beta-globin and two alpha-globin. Mutations in the beta-globin gene cause sickle cell disease and beta-thalassemia. But humans have another hemoglobin subunit gene (gamma-globin), which is expressed during fetal development instead of beta-globin. Gamma-globin combines with alpha-globin to form fetal hemoglobin. Normally around birth, gamma-globin expression is turned off, and beta-globin is turned on, switching from fetal to adult hemoglobin. Genome editing technologies can introduce mutations that turn the gamma-globin gene back on, thereby increasing fetal hemoglobin production, which can effectively substitute for defective adult hemoglobin production.