Axolotls can completely rebuild their thymus, a key immune organ

Neuromorphic computers modeled after the human brain can now solve the complex equations behind physics simulations — something once thought possible only with energy-hungry supercomputers. The breakthrough could lead to powerful, low-energy supercomputers while revealing new secrets about how our brains process information.

Meta-analysis of 115 studies evaluating cognitive function in people with Schizophrenia confirms that processing speed, especially as measured by symbol coding tasks, remains among the most impaired cognitive domains compared to controls.

This impairment was reliably more severe than that observed in most other tested cognitive domains, suggesting processing speed may be central to broader cognitive deficits in this population and may relate to altered brain connectivity.

This meta-analysis provides an updated review of the evidence for a central processing speed impairment in people with schizophrenia.

It’s mucus season—the time of year this sticky goo makes an appearance in the form of runny noses and phlegmy coughs. While most people are only aware of mucus when they are sick, their organs are blanketed with the stuff year-round. And, when it comes to the microbes living in our bodies, mucus is incredibly important. It provides a spatial and nutritional niche for diverse organisms to thrive, while also preventing them from getting too close to host tissues. Mucus also regulates microbial growth, metabolism and virulence, ultimately controlling the composition of microbial communities throughout the body. As such, scientists are looking at how to exploit mucus-microbe interactions to foster human health.

Mucus is found in creatures spanning the tree of life, from corals to people. In humans and other mammals, the slick goop coats epithelial tissues, including those in the mouth, lungs, gut and urogenital tract. In these regions, mucus protects cells from physical and enzymatic stress, heals wounds and selectively filters particles that can pass through to underlying tissues.

CGAS forms condensates on cytosolic double-stranded (ds)DNA and initiates inflammatory responses. Lueck et al. find that, although cGAS forms condensates on various nucleic acids, it enters a hydrogel-like state only with dsDNA via dimerization. The gel-like cGAS condensate not only protects bound dsDNA from exonucleases but also facilitates catalysis.

Weight loss is a well-recognized but poorly understood non-motor feature of Parkinson’s disease (PD). Many patients progressively lose weight as the disease advances, often alongside worsening motor symptoms and quality of life. Until now, it was unclear whether this reflected muscle loss, poor nutrition, or deeper metabolic changes. New research shows that PD-related weight loss is driven mainly by a selective loss of body fat, while muscle mass is largely preserved, and is accompanied by a fundamental shift in how the body produces energy.

Although PD is classically viewed as a neurological disorder, increasing evidence points to widespread metabolic dysfunction. Patients often experience fatigue and nutritional decline, yet dietary advice has largely focused on boosting calories. The new findings challenge this conventional view, showing that weight loss in PD reflects a failure of the body’s standard energy-producing pathways rather than reduced food intake alone. The findings are published in the Journal of Neurology, Neurosurgery & Psychiatry.

The study was led by Professor Hirohisa Watanabe from the Department of Neurology at Fujita Health University, School of Medicine, Japan, along with Dr. Atsuhiro Higashi and Dr. Yasuaki Mizutani from Fujita Health University. The team aimed to clarify what exactly is lost when patients with PD lose weight and why the body is forced to change its energy strategy.

https://www.patreon.com/9699306/join.

https://www.youtube.com/channel/UC-8G5xVNXvt7eQKzeFWr9Vw/join.

I thought it was about time that we got excited by some clever use of science in fiction. We may not be able to build a Rocinante tomorrow but all the elements are based on real science that we have been able to demonstrate.

No breaking the laws of physics here!! A fairly unique thing for this channel.

Keep an eye out for the shorts series which will go into a bit more detail on some of these topics. And if you want to know more about fission based propulsion then join the nerd club through YouTube memberships or on Patreon.

Thanks for watching, stay nerdy!

Chapters:

00:00 — The best science in fiction.

04:20 — Solomons test flight.

06:42 — It is rocket science!

10:26 — Nuclear reactions.

16:47 — Fusion.

21:00 — The challenges.

25:47 — Is anyone building it?

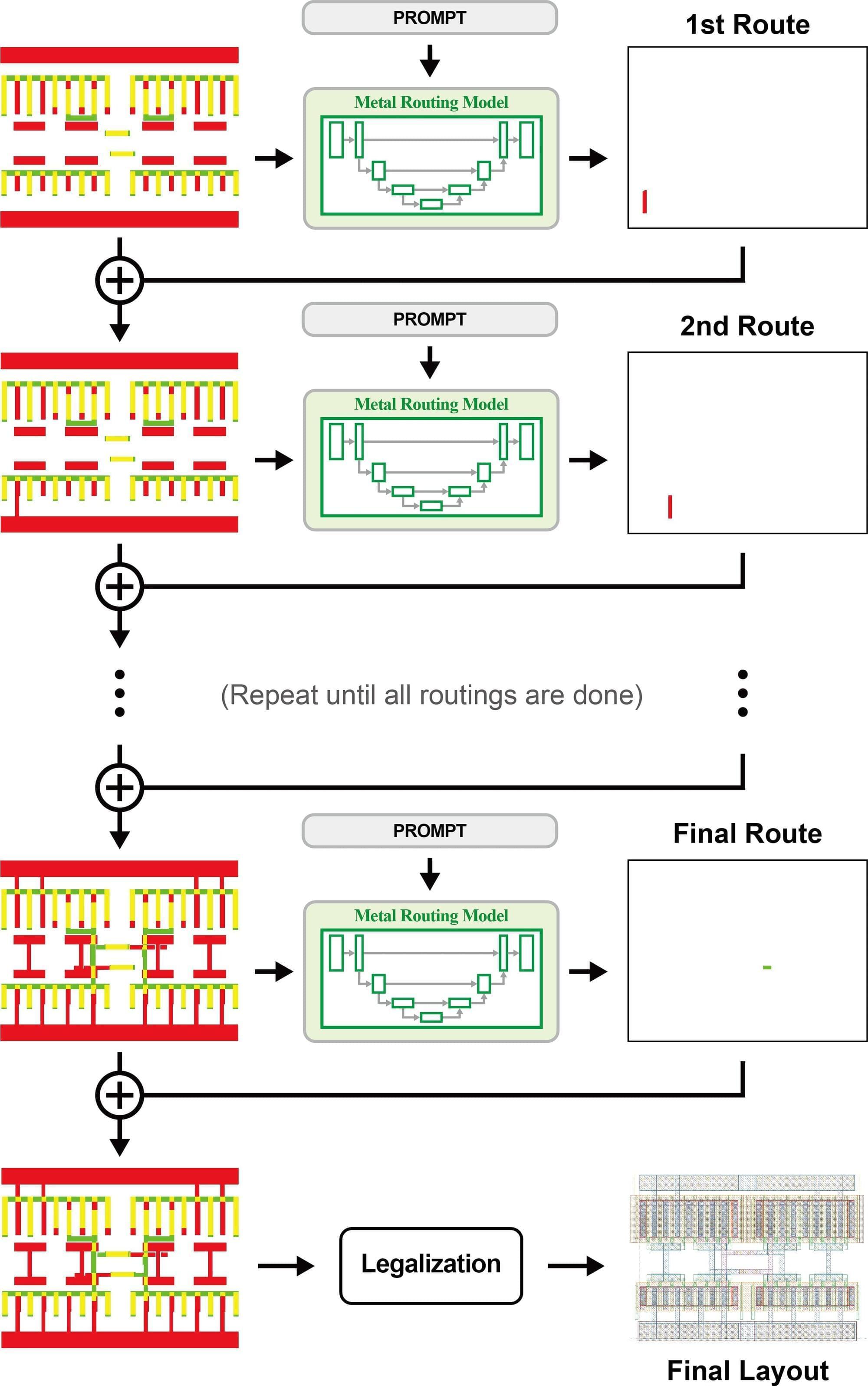

Researchers at Pohang University of Science and Technology (POSTECH) have developed an artificial intelligence approach that addresses a key bottleneck in analog semiconductor layout design, a process that has traditionally depended heavily on engineers’ experience. The work was recently published in the journal IEEE Transactions on Circuits and Systems I: Regular Papers.

Semiconductors are used in a wide range of technologies, including smartphones, vehicles, and AI servers. However, analog layout design remains difficult to automate because designers must manually arrange structures that determine performance and reliability while meeting a large number of design rules.

Automation has been especially challenging in analog design because layouts are too complex and design strategies differ significantly by circuit. In addition, training data is scarce, since layout data is typically treated as proprietary and is rarely shared outside companies.

Aliens will make use of paraconsistent logic.

Is math truly universal—or just human? Explore how alien minds might think, count, and reason in ways we don’t recognize as mathematics at all.

Get Nebula using my link for 50% off an annual subscription: https://go.nebula.tv/isaacarthur.

Watch my exclusive video The Future of Interstellar Communication: https://nebula.tv/videos/isaacarthur–… out Joe Scott’s Oldest & Newest: https://nebula.tv/videos/joescott-old… 🚀 Join this channel to get access to perks: / @isaacarthursfia 🛒 SFIA Merchandise: https://isaac-arthur-shop.fourthwall… 🌐 Visit our Website: http://www.isaacarthur.net 🎬 Join Nebula: https://go.nebula.tv/isaacarthur ❤️ Support us on Patreon:

/ isaacarthur ⭐ Support us on Subscribestar: https://www.subscribestar.com/isaac-a… 👥 Facebook Group:

/ 1,583,992,725,237,264 📣 Reddit Community:

/ isaacarthur 🐦 Follow on Twitter / X:

/ isaac_a_arthur 💬 SFIA Discord Server:

/ discord Credits: Alien Mathematics Written, Produced & Narrated by: Isaac Arthur Select imagery/video supplied by Getty Images Music by Epidemic Sound: http://nebula.tv/epidemic & Stellardrone Chapters 0:00 Intro 2:02 Why We Expect Mathematics to Be Universal 6:32 Math Is Not the Same Even for Humans 10:47 How Alien Biology Could Reshape Their Mathematics 16:44 Alien Logic: When the Rules Themselves Don’t Match 20:37 Oldest & Newest 21:41 Can We Ever Bridge the Mathematical Gap?

Check out Joe Scott’s Oldest & Newest: https://nebula.tv/videos/joescott-old…

🚀 Join this channel to get access to perks: / @isaacarthursfia.

🛒 SFIA Merchandise: https://isaac-arthur-shop.fourthwall…

🌐 Visit our Website: http://www.isaacarthur.net.

🎬 Join Nebula: https://go.nebula.tv/isaacarthur.

Antibodies modulate ongoing and future B cell responses. Cyster and Wilson review the various mechanisms whereby antibody feedback shapes B cell responses and present a framework for conceptualizing the ways antigen-specific antibody may influence immunity in conditions as diverse as infectious disease, autoimmunity, and cancer.