Boyle, 85 Commercial Road, Melbourne, Victoria, Australia. Phone: 61.3.9282.2111; Email: [email protected].

Find articles by Soon, M. in: | Google Scholar

1Burnet Institute, Melbourne, Victoria, Australia.

Boyle, 85 Commercial Road, Melbourne, Victoria, Australia. Phone: 61.3.9282.2111; Email: [email protected].

Find articles by Soon, M. in: | Google Scholar

1Burnet Institute, Melbourne, Victoria, Australia.

Francesco J. DeMayo & team discover uterine ZMIZ1 co-regulates estrogen receptor to establish and maintain pregnancy and general uterine health via cell growth responses and preventing uterine fibrosis:

The figure shows epithelial cell DNA synthesis (reflected by EdU incorporation) was inhibited by Zmiz1 deletion.

1Pregnancy & Female Reproduction Group, Reproductive and Development al Biology Lab, NIEHS, Research Triangle Park, North Carolina, USA.

2Inotiv-RTP, Durham, North Carolina, USA.

3Division of Hematology-Oncology, Department of Internal Medicine, University of Michigan School of Medicine, Ann Arbor, Michigan, USA.

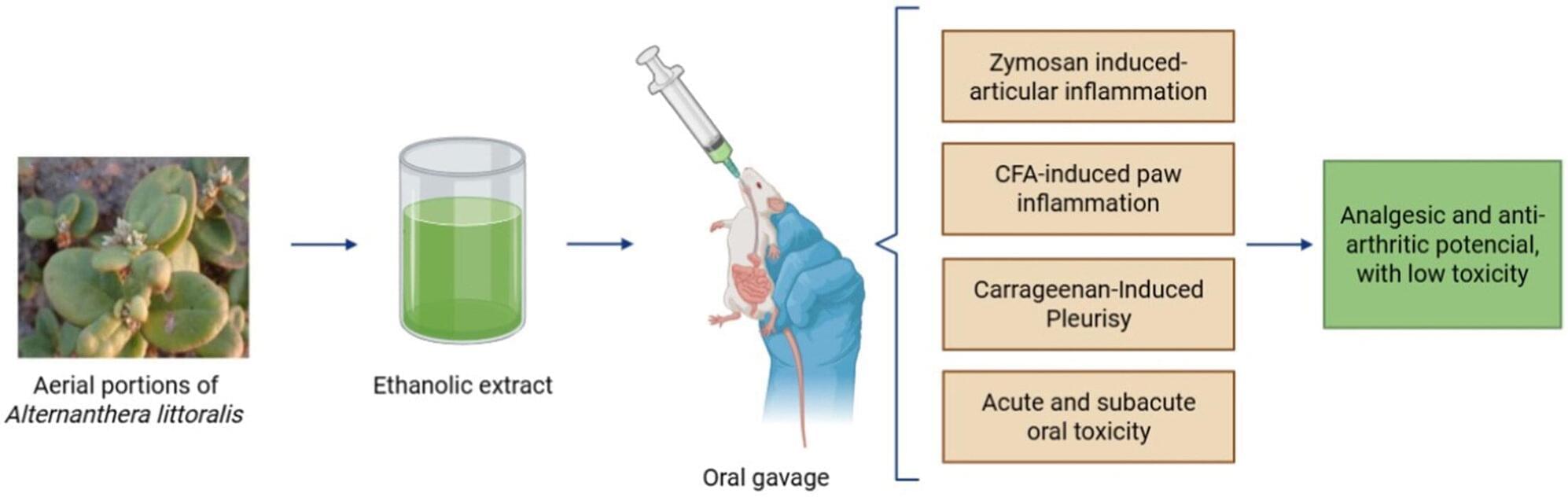

In Brazil, researchers from the Federal University of Grande Dourados (UFGD), the State University of Campinas (UNICAMP), and São Paulo State University (UNESP) have conducted a study that confirmed the safety and anti-inflammatory, analgesic, and anti-arthritic properties of the Joseph’s Coat plant (Alternanthera littoralis).

Native to the Brazilian coast, this plant has been used in folk medicine to combat inflammation, microbial infections, and parasitic diseases. Until now, there has been little pharmacological evidence to support these applications or analyze their safety.

The study is published in the Journal of Ethnopharmacology.

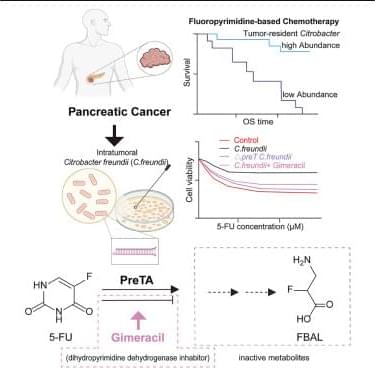

Xu et al. report that intratumoral Citrobacter enrichment correlates with poor overall survival in pancreatic cancer patients. An intratumoral Citrobacter freundii strain metabolizes 5-fluorouracil (5-FU) into inactive products via PreTA. Gimeracil, an inhibitor of human dihydropyrimidine dehydrogenase, preserves 5-FU efficacy by blocking PreTA activity.

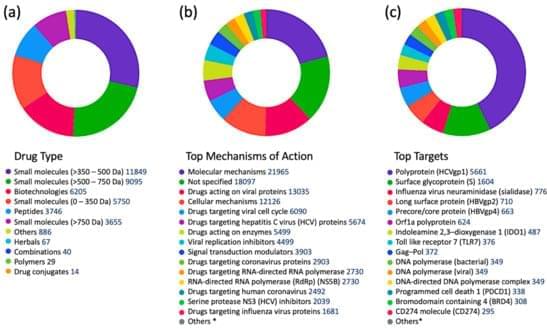

Despite the great technological and medical advances in fighting viral diseases, new therapies for most of them are still lacking, and existing antivirals suffer from major limitations regarding drug resistance and a limited spectrum of activity. In fact, most approved antivirals are directly acting antiviral (DAA) drugs, which interfere with viral proteins and confer great selectivity towards their viral targets but suffer from resistance and limited spectrum. Nowadays, host-targeted antivirals (HTAs) are on the rise, in the drug discovery and development pipelines, in academia and in the pharmaceutical industry. These drugs target host proteins involved in the virus life cycle and are considered promising alternatives to DAAs due to their broader spectrum and lower potential for resistance.

Mind transfers, nanotech, and robotic innovations take center stage in this visionary 2026 book.

face_with_colon_three #Awesome

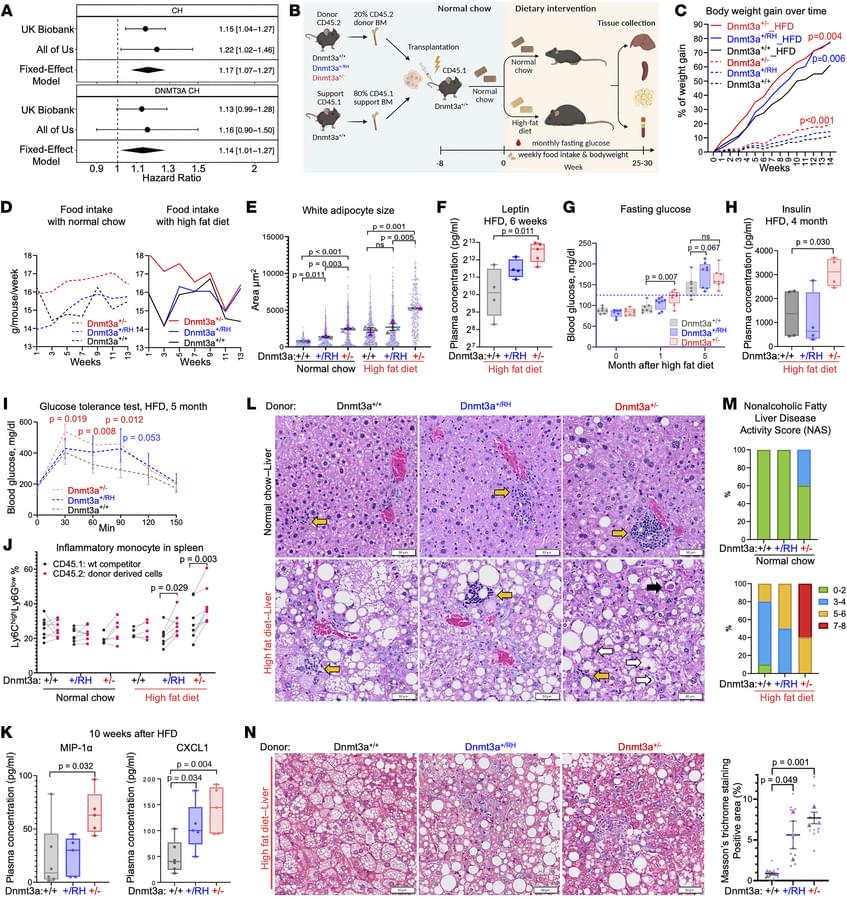

Guryanova, 1,200 Newell Drive, PO Box 100,267, Gainesville, Florida, 32,610, USA. Phone: 352.294.8590; Email: [email protected].

Find articles by Yan, B. in: | Google Scholar

1University of Florida College of Medicine, Gainesville, Florida, USA.

With the help of artificial intelligence, an international team of researchers has made the first major inroad to date toward a new and more effective way to fight the monkeypox virus (MPXV), which causes a painful and sometimes deadly disease that can be especially dangerous for children, pregnant women and immunocompromised people.

Reporting in the journal Science Translational Medicine, the team found that when mice were injected with a viral surface protein recommended by AI, the animals produced antibodies that neutralized MPXV, suggesting the breakthrough could be used in a new mpox vaccine or antibody therapy.

In 2022, mpox began to spread around the world, causing flulike symptoms and painful rashes and lesions for more than 150,000 people, while causing almost 500 deaths. Vaccines developed to fight smallpox were repurposed amid the outbreak to help the most vulnerable patients, but that vaccine is complicated and costly, due to its manufacture from a whole, weakened virus.