Quantum computers differ fundamentally from classical ones. Instead of using bits (0s and 1s), they employ “qubits,” which can exist in multiple states simultaneously due to quantum phenomena like superposition and entanglement.

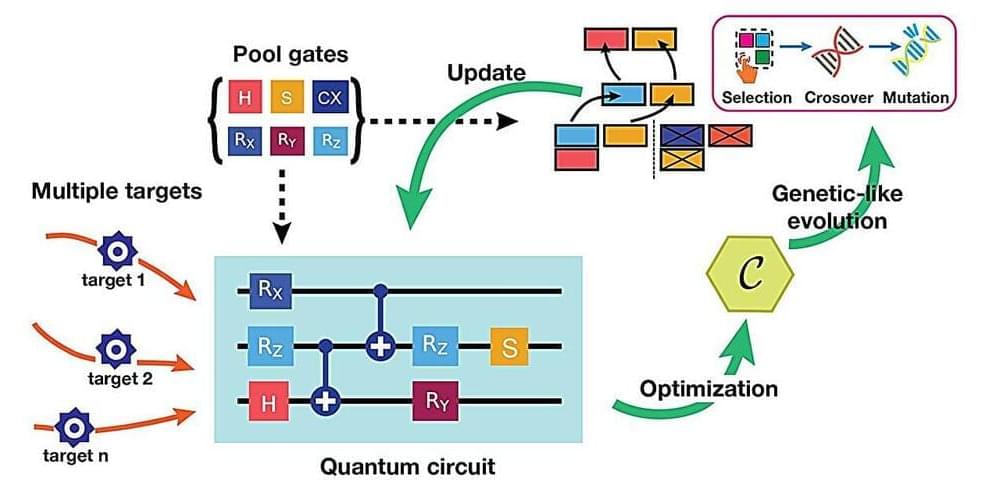

For a quantum computer to simulate dynamic processes or process data, among other essential tasks, it must translate complex input data into “quantum data” that it can understand. This process is known as quantum compilation.

Essentially, quantum compilation “programs” the quantum computer by converting a particular goal into an executable sequence. Just as the GPS app converts your desired destination into a sequence of actionable steps you can follow, quantum compilation translates a high-level goal into a precise sequence of quantum operations that the quantum computer can execute.