Drones Might Work Longer With Some Bird-Inspired Modifications Small drones have a problem — their battery life runs out relatively quickly. A team of roboticists says it has created special landing gear that can help conserve precious battery life.

The Navy just awarded Boeing a contract to build a giant robot submarine, called the Orca Extra-Large Unmanned Undersea Vehicle, which it says will prowl the depths of the ocean autonomously for months at a time.

The U.S. Naval Institute says the sub will be used for “mine countermeasures, anti-submarine warfare, anti-surface warfare, electronic warfare and strike missions.”

Do you hear that? It’s the sound of Google executives practicing their lines ahead of Google I/O. The company’s annual developer conference in Mountain View, California, kicks off this Tuesday. The three-day event gives Google a chance to show off its latest work and set the tone for the year to come.

Can’t make it to the Shoreline Amphitheater? You can watch the entire keynote on the event page or on the Google Developers YouTube channel. It begins at 10 am PT (1 pm ET) on May 7 and should last for about 90 minutes. We’ll liveblog the whole thing here on WIRED.com.

Google I/O is technically a developer’s conference, and there should be plenty of talk about all the fun things developers can build using Google’s latest tools. But it’s also an opportunity to get consumers excited about what’s cooking in Mountain View. Last year, the company used the conference to debut its “digital wellness” initiative and a suite of new visual search tools for Google Lens. It also introduced Duplex, the eerily realistic AI assistant that can make dinner reservations and schedule haircuts like a human would.

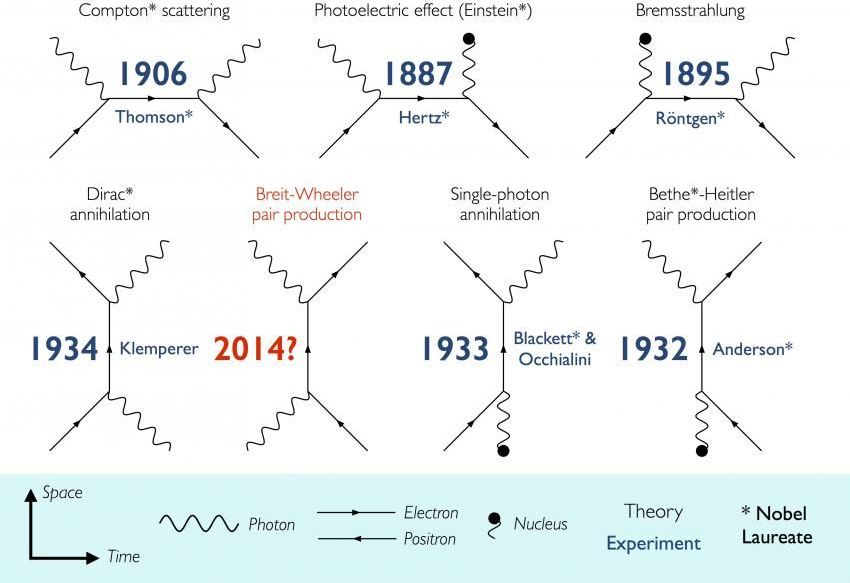

2014 Basically a real replicator could be possible with this discovery.

Imperial College London physicists have discovered how to create matter from light — a feat thought impossible when the idea was first theorised 80 years ago.

In just one day over several cups of coffee in a tiny office in Imperial’s Blackett Physics Laboratory, three physicists worked out a relatively simple way to physically prove a theory first devised by scientists Breit and Wheeler in 1934.

Breit and Wheeler suggested that it should be possible to turn light into matter by smashing together only two particles of light (photons), to create an electron and a positron – the simplest method of turning light into matter ever predicted. The calculation was found to be theoretically sound but Breit and Wheeler said that they never expected anybody to physically demonstrate their prediction. It has never been observed in the laboratory and past experiments to test it have required the addition of massive high-energy particles.