And this shows one of the many ways in which the Economic Singularity is rushing at us. The 🦾🤖 Bots are coming soon to a job near you.

NVIDIA unveiled a suite of services, models, and computing platforms designed to accelerate the development of humanoid robots globally. Key highlights include:

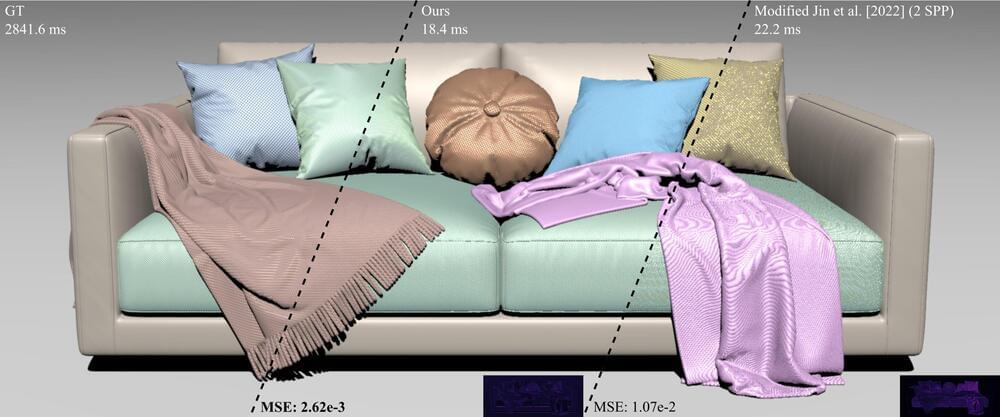

- NVIDIA NIM™ Microservices: These containers, powered by NVIDIA inference software, streamline simulation workflows and reduce deployment times. New AI microservices, MimicGen and Robocasa, enhance generative physical AI in Isaac Sim™, built on @NVIDIAOmniverse

- NVIDIA OSMO Orchestration Service: A cloud-native service that simplifies and scales robotics development workflows, cutting cycle times from months to under a week.

- AI-Enabled Teleoperation Workflow: Demonstrated at #SIGGRAPH2024, this workflow generates synthetic motion and perception data from minimal human demonstrations, saving time and costs in training humanoid robots.

NVIDIA’s comprehensive approach includes building three computers to empower the world’s leading robot manufacturers: NVIDIA AI and DGX to train foundation models, Omniverse to simulate and enhance AIs in a physically-based virtual environment, and Jetson Thor, a robot supercomputer. The introduction of NVIDIA NIM microservices for robot simulation generative AI further accelerates humanoid robot development.