This is a rewritten story I’m publishing for the first time on The Huffington Post. It’s part of my goal to get other communities involved with supporting transhumanism:

This is a rewritten story I’m publishing for the first time on The Huffington Post. It’s part of my goal to get other communities involved with supporting transhumanism:

My new story for Vice Motherboard on how in the near future we will edit our realities to suit our tastes and desires:

I am currently in Europe, talking about humanity’s future via science, technology, and design. Onward transhumanist vision!

Nicky Ashwell, 29, from London, can now carry out tasks with both hands for first time with hand developed by prosthetic experts Steeper

“The Biological Technologies Office (BTO), which opened in April 2014, aims to support extremely ambitious — some say fantastical — technologies ranging from powered exoskeletons for soldiers to brain implants that can control mental disorders. DARPA’s plan for tackling such projects is being carried out in the same frenetic style that has defined the agency’s research in other fields.” Read more

Fun article below on upcoming Financial Times event. Transhumanism and AI will be a part of the discussions at the event. They’re going to have lots of weird technology there, as well as robots wandering around.

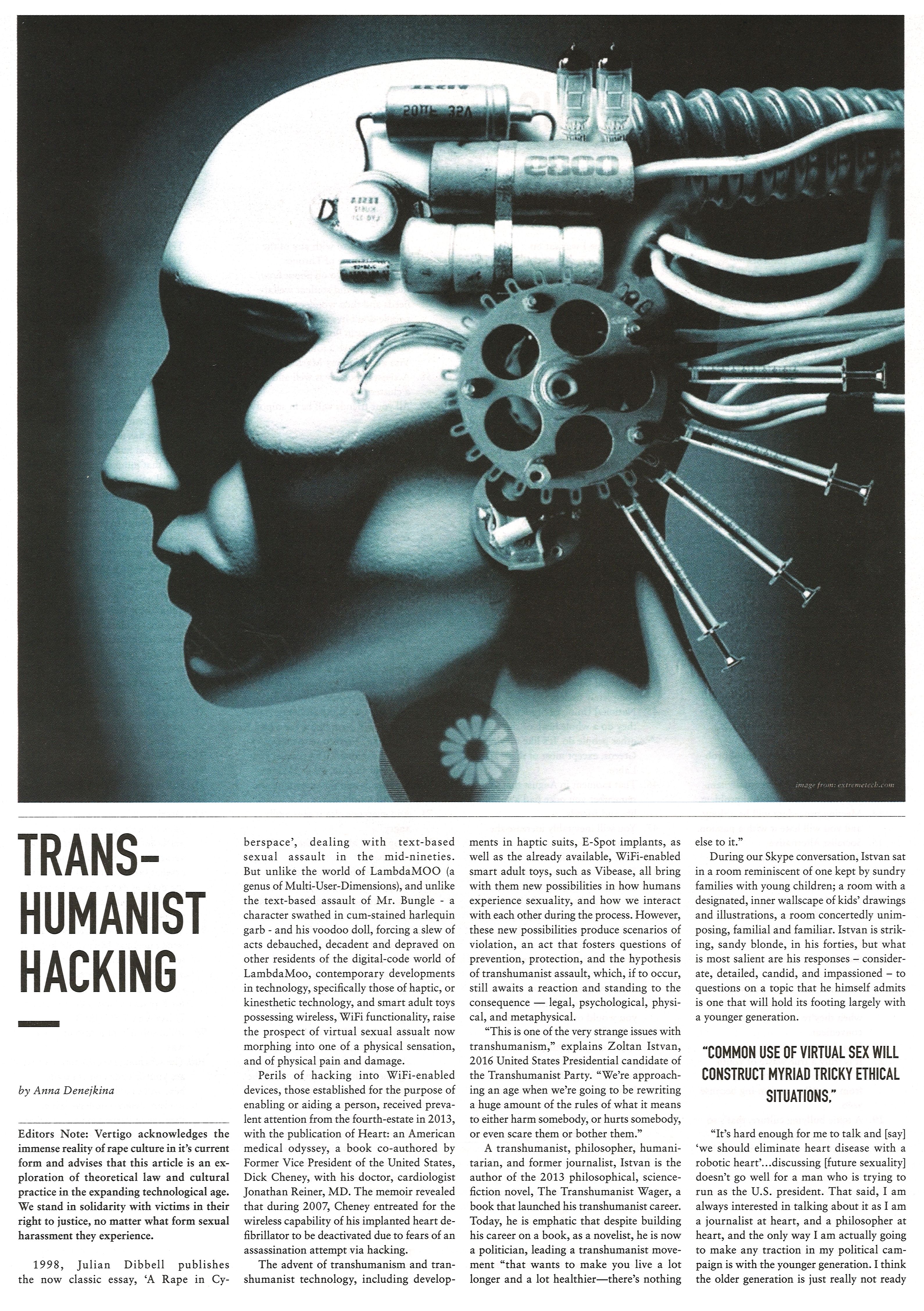

An interesting story on transhumanism, virtual rape, and the thorny field of developing laws to protect people in virtual reality. This story first appeared in Australian print magazine Vertigo: