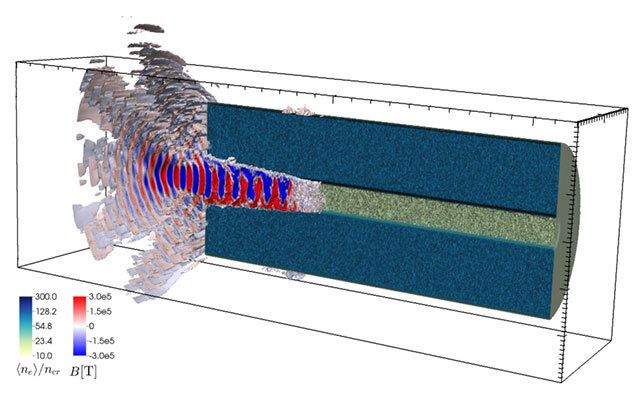

While intense magnetic fields are naturally generated by neutron stars, researchers have been striving to achieve similar results for many years. UC San Diego mechanical and aerospace engineering graduate student Tao Wang recently demonstrated how an extremely strong magnetic field, similar to that on the surface of a neutron star, can be not only generated but also detected using an X-ray laser inside a solid material.

Wang carried out his research with the help of simulations conducted on the Comet supercomputer at the San Diego Supercomputer Center (SDSC) as well as Stampede and Stampede2 at the Texas Advanced Computing Center (TACC). All resources are part of a National Science Foundation program called the Extreme Science and Engineering Discovery Environment (XSEDE).

“Wang’s findings were critical to our recently published study’s overall goal of developing a fundamental understanding of how multiple laser beams of extreme intensity interact with matter,” said Alex Arefiev, a professor of mechanical and aerospace engineering at the UC San Diego Jacobs School of Engineering.