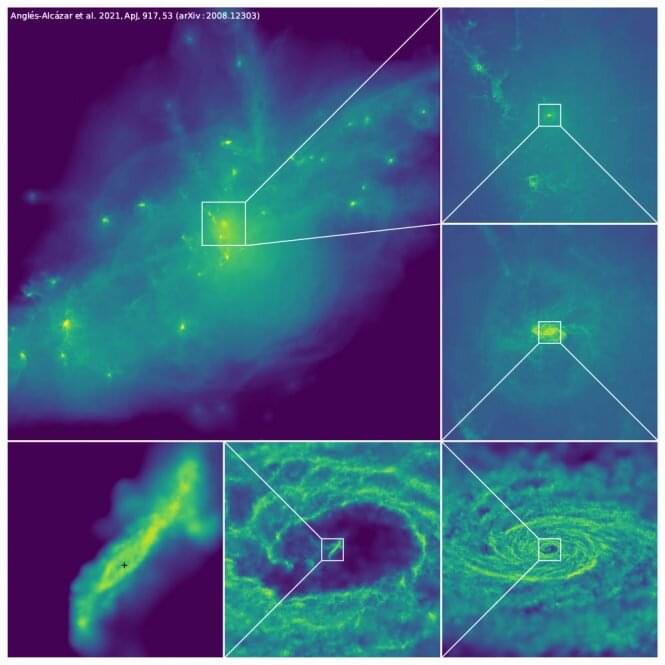

At the center of galaxies, like our own Milky Way, lie massive black holes surrounded by spinning gas. Some shine brightly, with a continuous supply of fuel, while others go dormant for millions of years, only to reawaken with a serendipitous influx of gas. It remains largely a mystery how gas flows across the universe to feed these massive black holes.

UConn Assistant Professor of Physics Daniel Anglés-Alcázar, lead author on a paper published today in The Astrophysical Journal, addresses some of the questions surrounding these massive and enigmatic features of the universe by using new, high-powered simulations.

“Supermassive black holes play a key role in galaxy evolution and we are trying to understand how they grow at the centers of galaxies,” says Anglés-Alcázar. “This is very important not just because black holes are very interesting objects on their own, as sources of gravitational waves and all sorts of interesting stuff, but also because we need to understand what the central black holes are doing if we want to understand how galaxies evolve.”