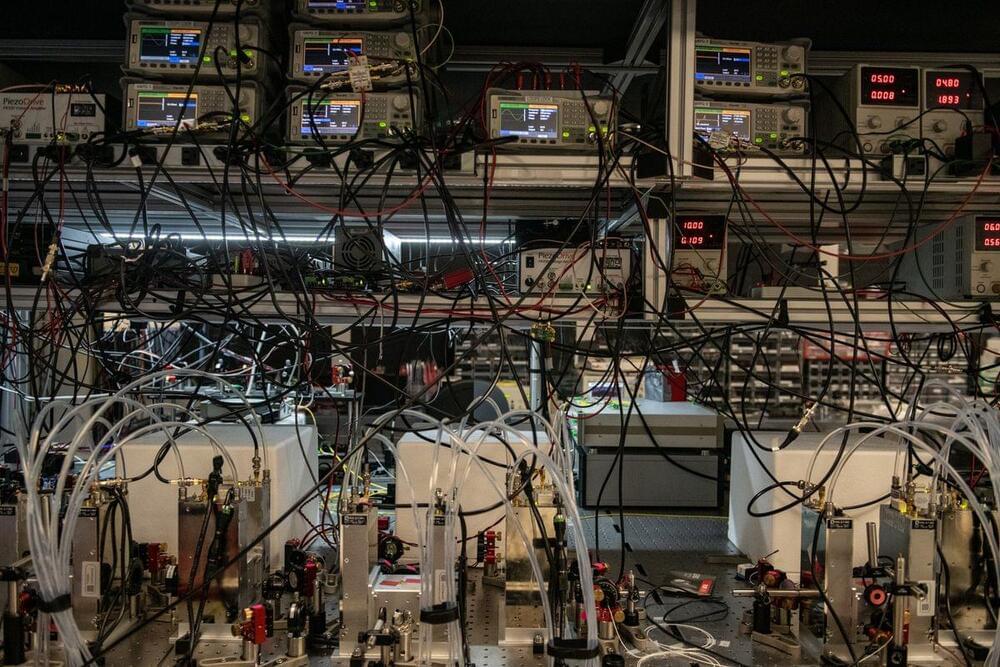

Qubits are a basic building block for quantum computers, but they’re also notoriously fragile—tricky to observe without erasing their information in the process. Now, new research from the University of Colorado Boulder and the National Institute of Standards and Technology (NIST) could be a leap forward for handling qubits with a light touch.

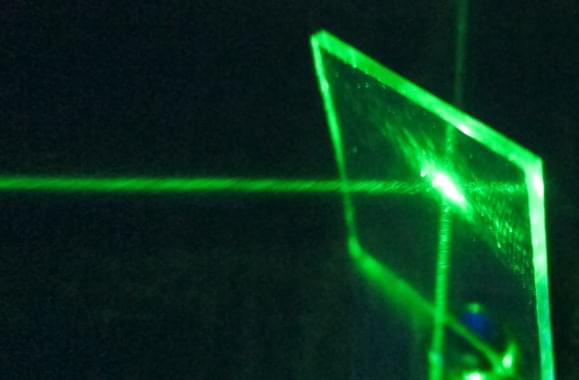

In the study, a team of physicists demonstrated that it could read out the signals from a type of qubit called a superconducting qubit using laser light, and without destroying the qubit at the same time.

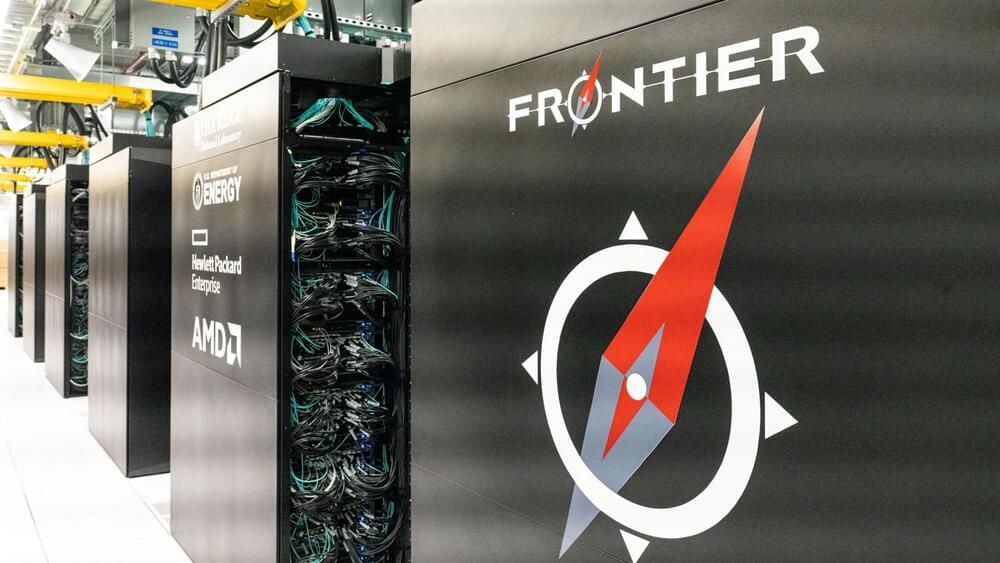

The group’s results could be a major step toward building a quantum internet, the researchers say. Such a network would link up dozens or even hundreds of quantum chips, allowing engineers to solve problems that are beyond the reach of even the fastest supercomputers around today. They could also, theoretically, use a similar set of tools to send unbreakable codes over long distances.