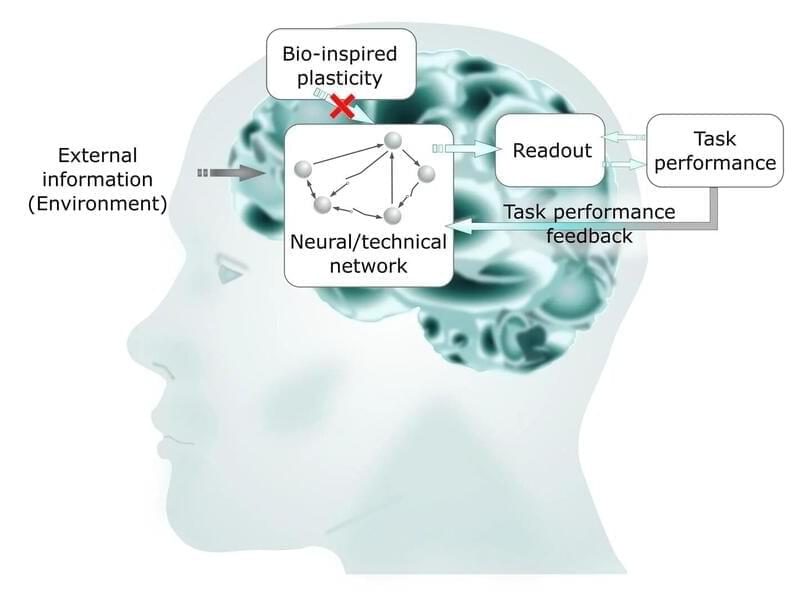

With mathematical modeling, a research team has now succeeded in better understanding how the optimal working state of the human brain, called criticality, is achieved. Their results mean an important step toward biologically-inspired information processing and new, highly efficient computer technologies and have been published in Scientific Reports.

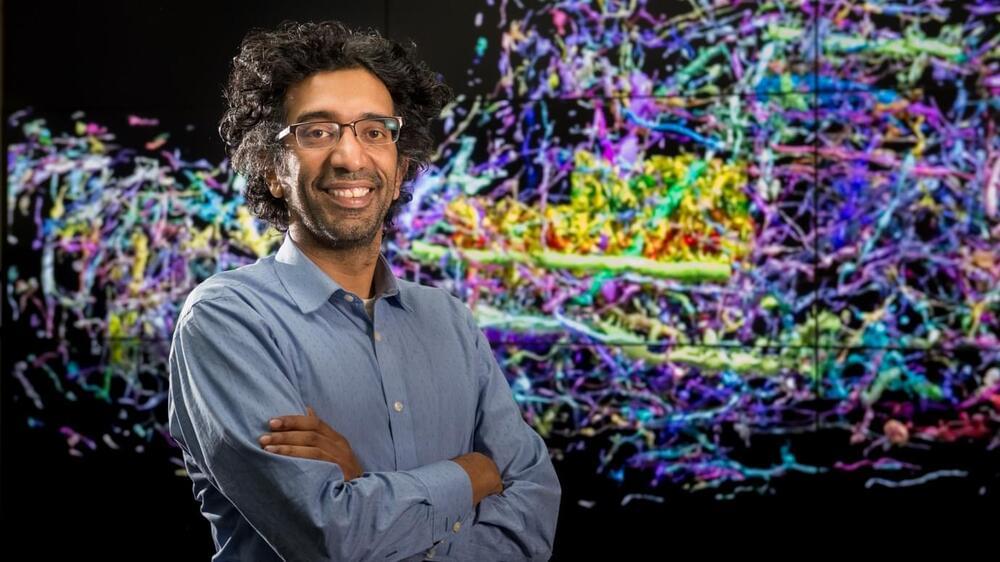

“In particular tasks, supercomputers are better than humans, for example in the field of artificial intelligence. But they can’t manage the variety of tasks in everyday life —driving a car first, then making music and telling a story at a get-together in the evening,” explains Hermann Kohlstedt, professor of nanoelectronics. Moreover, today’s computers and smartphones still consume an enormous amount of energy.

“These are no sustainable technologies—while our brain consumes just 25 watts in everyday life,” Kohlstedt continues. The aim of their interdisciplinary research network, “Neurotronics: Bio-inspired Information Pathways,” is therefore to develop new electronic components for more energy-efficient computer architectures. For this purpose, the alliance of engineering, life and natural sciences investigates how the human brain is working and how that has developed.