A neuromorphic supercomputer called DeepSouth will be capable of 228 trillion synaptic operations per second, which is on par with the estimated number of operations in the human brain.

A neuromorphic supercomputer called DeepSouth will be capable of 228 trillion synaptic operations per second, which is on par with the estimated number of operations in the human brain.

Simulations of binary neutron star mergers suggest that future detectors will distinguish between different models of hot nuclear matter.

Researchers used supercomputer simulations to explore how neutron star mergers affect gravitational waves, finding a key relationship with the remnant’s temperature. This study aids future advancements in detecting and understanding hot nuclear matter.

Exploring neutron star mergers and gravitational waves.

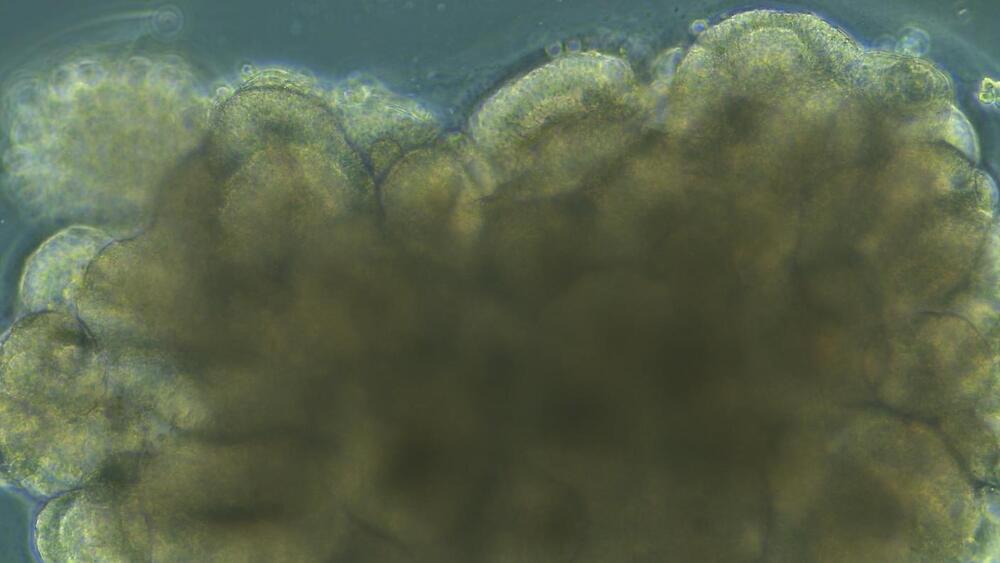

The mini-brain functioned like both the central processing unit and memory storage of a supercomputer. It received input in the form of electrical zaps and outputted its calculations through neural activity, which was subsequently decoded by an AI tool.

When trained on soundbites from a pool of people—transformed into electrical zaps—Brainoware eventually learned to pick out the “sounds” of specific people. In another test, the system successfully tackled a complex math problem that’s challenging for AI.

The system’s ability to learn stemmed from changes to neural network connections in the mini-brain—which is similar to how our brains learn every day. Although just a first step, Brainoware paves the way for increasingly sophisticated hybrid biocomputers that could lower energy costs and speed up computation.

Researchers at Western Sydney University in Australia have teamed up with tech giants Intel and Dell to build a massive supercomputer intended to simulate neural networks at the scale of the human brain.

They say the computer, dubbed DeepSouth, is capable of emulating networks of spiking neurons at a mind-melting 228 trillion synaptic operations per second, putting it on par with the estimated rate at which the human brain completes operations.

The project was announced at this week’s NeuroEng Workshop hosted by Western Sydney’s International Centre for Neuromorphic Systems (ICNS), a forum for luminaries in the field of computational neuroscience.

📸 Look at this post on Facebook https://www.facebook.com/share/U5sBEHBUhndiJJDz/?mibextid=xfxF2i

In the realm of computing technology, there is nothing quite as powerful and complex as the human brain. With its 86 billion neurons and up to a quadrillion synapses, the brain has unparalleled capabilities for processing information. Unlike traditional computing devices with physically separated units, the brain’s efficiency lies in its ability to serve as both a processor and memory device. Recognizing the potential of harnessing the brain’s power, researchers have been striving to create more brain-like computing systems.

Efforts to mimic the brain’s activity in artificial systems have been ongoing, but progress has been limited. Even one of the most powerful supercomputers in the world, Riken’s K Computer, struggled to simulate just a fraction of the brain’s activity. With its 82,944 processors and a petabyte of main memory, it took 40 minutes to simulate just one second of the activity of 1.73 billion neurons connected by 10.4 trillion synapses. This represented only one to two percent of the brain’s capacity.

In recent years, scientists and engineers have delved into the realm of neuromorphic computing, which aims to replicate the brain’s structure and functionality. By designing hardware and algorithms that mimic the brain, researchers hope to overcome the limitations of traditional computing and improve energy efficiency. However, despite significant progress, neuromorphic computing still poses challenges, such as high energy consumption and time-consuming training of artificial neural networks.

Tesla is pushing the boundaries of AI and supercomputing with the development of Dojo 2, aiming to build the world’s biggest supercomputer by the end of next year, and setting high goals for performance and cost efficiency.

Questions to inspire discussion.

Who is leading Tesla’s DOJO supercomputer project?

—Peter Bannon is the new leader of Tesla’s DOJO supercomputer project, replacing the previous head, Ganesh Thind.

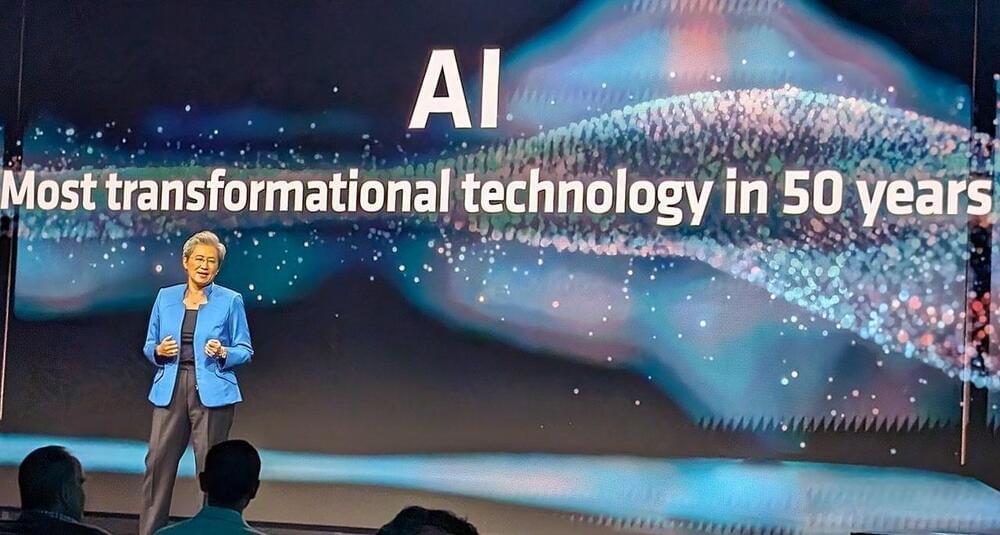

AMD provided a deep-dive look at its latest AI accelerator arsenal for data centers and supercomputers, as well as consumer client devices, but software support, optimization and developer adoption will be key.

Advanced Micro Devices held its Advancing AI event in San Jose this week, and in addition to launching new AI accelerators for the data center, supercomputing and client laptops, the company also laid out its software and ecosystem enablement strategy with an emphasis on open source accessibility. Market demand for AI compute resources is currently outstripping supply from incumbents like Nvidia, so AMD is racing to provide compelling alternatives. Underscoring this emphatically, AMD CEO Dr. Lisa Su, noted that the company is raising its TAM forecast for AI accelerators from the $150 billion number it projected a year ago at this time, to $400 billion by 2027 with a 70% compounded annual growth rate. Artificial Intelligence is obviously a massive opportunity for the major chip players, but it’s really anybody’s guess as to the true potential market demand. AI will be so transformational that it will impact virtually all industries in some way or another. Regardless, the market will likely be welcoming and eager for these new AI silicon engines and tools from AMD.

AMD’s data center group formally launched two major product family offerings this week, known as the MI300X and MI300A, for the enterprise and cloud AI and supercomputing markets, respectively. These two products are purpose-built for their respective applications, but are based on similar chiplet-enabled architectures with advanced 3D packaging techniques and a mix of optimized 5 and 6nm semiconductor chip fab processes. AMD’s High Performance Computing AI accelerator is the Instinct MI300A that is comprised of both the company’s CDNA 3 data center GPU architecture, along with Zen 4 CPU core chiplets (24 EPYC Genoa cores) and 128GB of shared, unified HBM3 memory that both the GPU accelerators and CPU cores have access to, as well as 256MB of Infinity Cache. The chip is comprised of a whopping 146B transistors and offers up to 5.3 TB/s of peak memory bandwidth, with its CPU, GPU, and IO interconnect enabled via AMD’s high speed serial Infinity Fabric.

This AMD accelerator can also run as both a PCIe connected add-in device and a root complex host CPU. All-in, the company is making bold claims for MI300A in HPC, with up to a 4X performance lift versus Nvidia’s H100 accelerator in applications like OpenFOAM for computational fluid dynamics, and up to a 2X performance-per-watt uplift over Nvidia’s GH200 Grace Hopper Superchip. AMD MI300A will also be powering HPE’s El Capitan at the Lawrence Livermore National Laboratory, where it will replace Frontier (also powered by AMD) as the world’s first two-exaflop supercomputer, reportedly making it the fastest, most powerful supercomputer in the world.

No specifications have been revealed, but officials have claimed that it surpasses the capabilities of the famous Tianhe-2 supercomputer.

The National Supercomputing Center (NSC) in Guangzhou, China, has unveiled the Tianhe Xingyi, a homegrown supercomputer, at an industrial event in Guangdong Province, which several media reports have confirmed. The NSC is the parent organization under whose guidance the Tianhe-2 supercomputer was also developed.

Supercomputers are a crucial component of a nation’s progress as they aid in solving the most complex and technical problems. The US has conventionally led the world in hosting the fastest supercomputers, as captured by the TOP500 listings, while also leading in the absolute number of supercomputers available to its researchers.

The high computing prowess of supercomputers can be used to carry out simulations for understanding climate change, carrying out material research, space exploration, and finding cures for various diseases. Of late, supercomputers have assumed importance for developing AI models, and access to advanced supercomputers could be critical in determining who leads the next frontier of information technology.