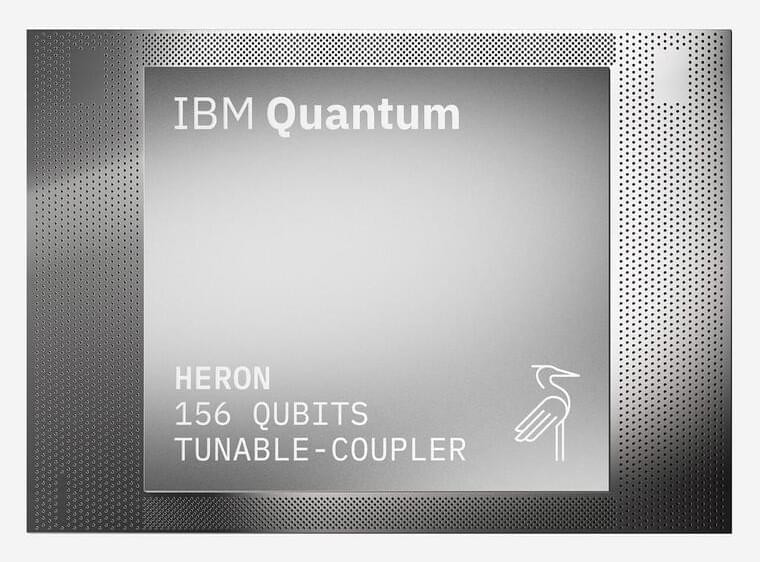

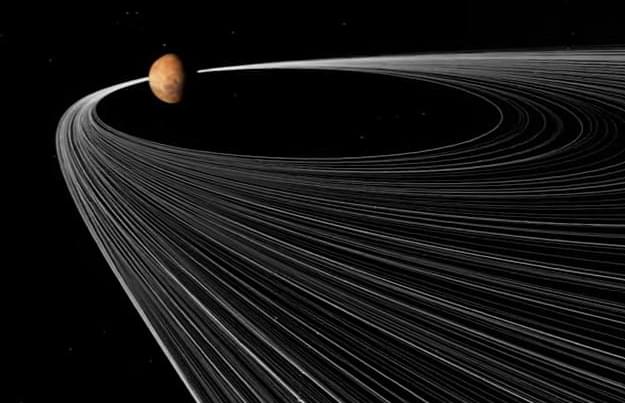

The future of technology often feels like science fiction, and a recent conversation between Sundar Pichai, CEO of Google, and Elon Musk of SpaceX proved just that. With Google unveiling its groundbreaking quantum chip Willow, a bold idea was floated—launching quantum computers into space. This visionary concept could not only transform quantum computing but also push the boundaries of modern science as we know it.

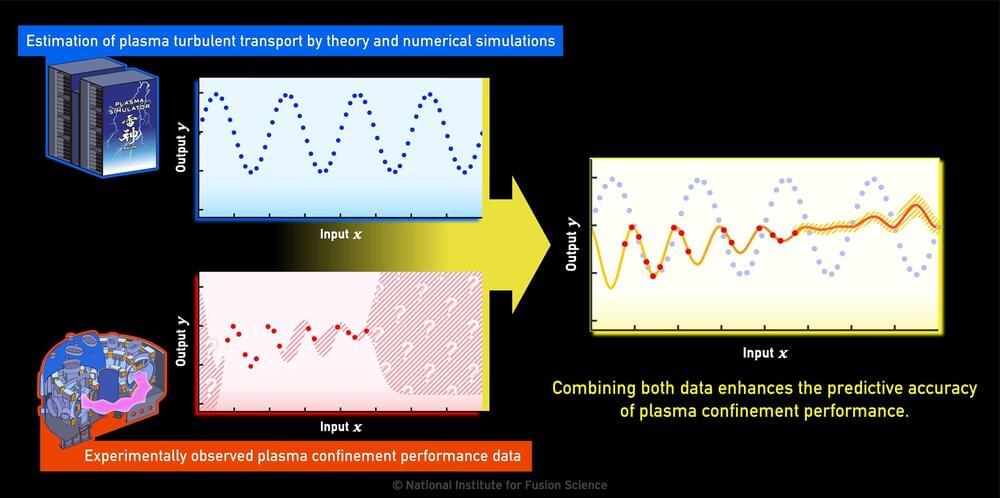

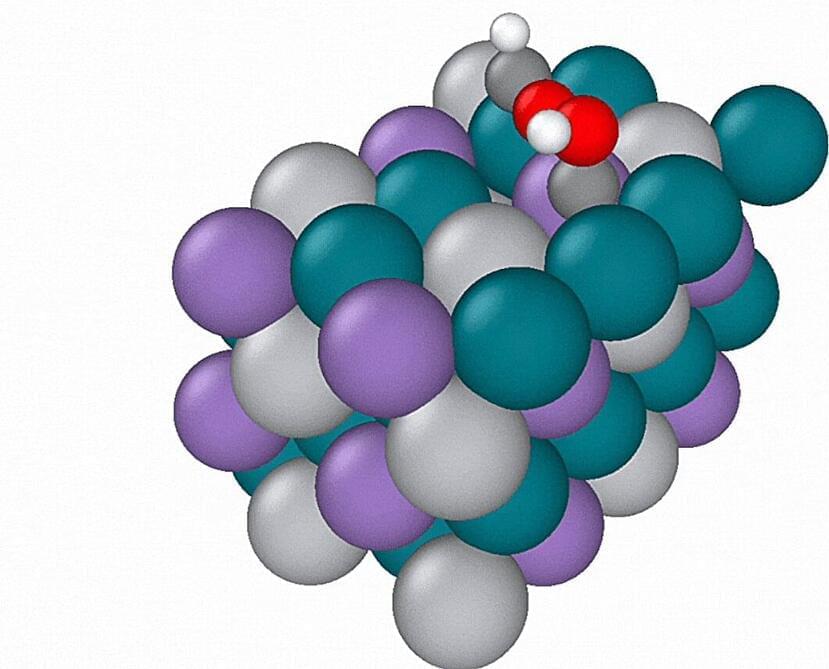

Quantum computing has long promised to solve problems far beyond the reach of traditional computers, and Google’s Willow chip seems to be delivering on that vision. In a recent demonstration, the chip completed a complex calculation in just five minutes—a task that would take classical supercomputers billions of years.

Google’s researchers describe this milestone as exceeding the known scales of physics, potentially unlocking groundbreaking possibilities in scientific research and technological development. But despite its promise, the field of quantum computing faces significant challenges.