The United Arab Emirates’ first foray beyond Earth’s orbit is going so smoothly that the nation’s Hope Mars spacecraft will tackle some bonus observations before it reaches its destination, mission leaders have announced.

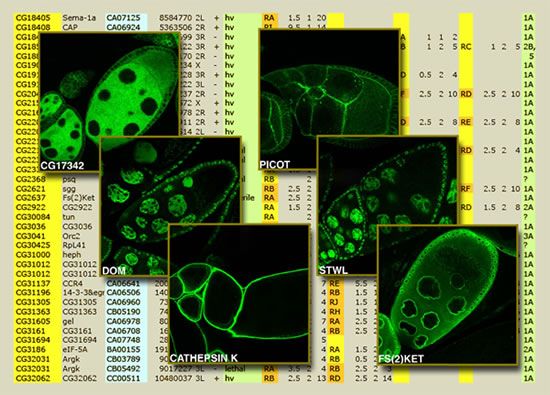

Although Drosophila is an insect whose genome has only about 14,000 genes, roughly half the human count, a remarkable number of these have very close counterparts in humans; some even occur in the same order in the fly’s DNA as in our own. This, plus the organism’s more than 100-year history in the lab, makes it one of the most important models for studying basic biology and disease.

To take full advantage of the opportunities offered by Drosophila, researchers need improved tools to manipulate the fly’s genes with precision, allowing them to introduce mutations to break genes, control their activity, label their protein products, or introduce other inherited genetic changes.

“We now have the genome sequences of lots of different animals — worms, flies, fish, mice, chimps, humans,” says Roger Hoskins of Berkeley Lab’s Life Sciences Division. “Now we want improved technologies for introducing precise changes into the genomes of lab animals; we want efficient genome engineering. Methods for doing this are very advanced in bacteria and yeast. Good methods for worms, flies, and mice have also been around for a long time, and improvements have come along fairly regularly. But with whole genome sequences in hand, the goals are becoming more ambitious.”

“An investment in knowledge pays the best interest” — Benjamin Franklin

On November 24th, IBM is running a free, online Data & AI Conference for India and the Asia Pacific region, covering four tracks:

Host Mark Sackler and panelists discuss the challenges of getting governments and the public on board with one of the basic principles of longevity research: that the cause of all chronic diseases of aging is aging itself.

Last week, SpaceX has launched the Beta for its Starlink internet program. This space-based internet is turning out to be faster than expected, thus having the potential to give many people around the world opportunities to do things that their socio-economic situations would have never allowed them to have.

Here is the petition link: https://www.change.org/p/save-vital-industries-call-for-subs…satellites

Discord Link: https://discord.gg/brYJDEr

Patreon link: https://www.patreon.com/TheFuturistTom

Please follow our instagram at: https://www.instagram.com/the_futurist_tom/

For business inquires, please contact [email protected]

Origami-inspired tissue engineering — using eggshells, plant leaves, marine sponges, and paper as substrates.

Ira Pastor ideaXme life sciences ambassador interviews Dr. Gulden Camci-Unal, Ph.D. Assistant Professor, at the Department Chemical Engineering, Francis College of Engineering, UMass Lowell.

Ira Pastor comments:

A few episodes ago ideaXme hosted the University of Michigan’s Dr. Bruce Carlson. We spoke to him about the interesting topic of the importance of “substrate” in regenerative processes, for both the maintenance of normal tissue functions, and in the migration of cells or changes to tissue architecture that are part of healing processes.

Substrate is broadly defined as the surface or material on, or from which, cells / tissues live, grow, or obtain nourishment, and have both biochemical, as well as biomechanical functions.

KENNEDY SPACE CENTER (FL), October 19, 2020 – The Center for the Advancement of Science in Space (CASIS) and the National Science Foundation (NSF) announced three flight projects that were selected as part of a joint solicitation focused on leveraging the International Space Station (ISS) U.S. National Laboratory to further knowledge in the fields of tissue engineering and mechanobiology. Through this collaboration, CASIS, manager of the ISS National Lab, will facilitate hardware implementation, in-orbit access, and astronaut crew time on the orbiting laboratory. NSF invested $1.2 million in the selected projects, which are seeking to advance fundamental science and engineering knowledge for the benefit of life on Earth.

This is the third collaborative research opportunity between CASIS and NSF focused on tissue engineering. Fundamental science is a major line of business for the ISS National Lab, and by conducting research in the persistent microgravity environment offered by the orbiting laboratory, NSF and the ISS National Lab will drive new advances that will bring value to our nation and spur future inquiries in low Earth orbit.

Microgravity affects organisms—from viruses and bacteria to humans, inducing changes such as altered gene expression and DNA regulation, changes in cellular function and physiology, and 3D aggregation of cells. Spaceflight is advancing research in the fields of pharmaceutical research, disease modeling, regenerative medicine, and many other areas within the life sciences. The selected projects will utilize the ISS National Lab and its unique environment to advance fundamental and transformative research that integrates engineering and life sciences.

OneSkin Technologies is a longevity company started by a team of incredible female PHDs and entrepreneurs, who have been using cutting edge technology to identify the senescent cells that cause your skin to age.

⠀⠀⠀

Discover how they use key peptide molecules to eliminate those senescent cells, making you look and feel 10 years younger.

Subscribe for Peter’s latest tech insights & updates: https://www.diamandis.com/subscribe