Its called Singularity.

This new technology could change what we know about computers.

Friday, October 14, 2016 by: Ethan A. Huff, staff writerTags: trees, social communication, plant science (NaturalNews) If trees could talk, what would they say? Emerging research suggests that if they had mouths, they might just say a whole lot because, believe it or not, trees have brains and intelligence, and are able to communicate with other trees much like humans do with other humans when in social situations.

In Brief:

NASA has announced that all research it has funded will be FREE and accessible to anyone through their new open portal PubSpace.

NASA is opening up its research library to the public in the newly launched web database PubSpace …and it’s absolutely free.

Science & Technology

What a great time to be alive!

Nice POV read.

We know that emerging innovations within cutting-edge science and technology (S&T) areas carry the potential to revolutionize governmental structures, economies, and life as we know it. Yet, others have argued that such technologies could yield doomsday scenarios and that military applications of such technologies have even greater potential than nuclear weapons to radically change the balance of power. These S&T areas include robotics and autonomous unmanned system; artificial intelligence; biotechnology, including synthetic and systems biology; the cognitive neurosciences; nanotechnology, including stealth meta-materials; additive manufacturing (aka 3D printing); and the intersection of each with information and computing technologies, i.e., cyber-everything. These concepts and the underlying strategic importance were articulated at the multi-national level in NATO’s May 2010 New Strategic Concept paper: “Less predictable is the possibility that research breakthroughs will transform the technological battlefield … The most destructive periods of history tend to be those when the means of aggression have gained the upper hand in the art of waging war.”

As new and unpredicted technologies are emerging at a seemingly unprecedented pace globally, communication of those new discoveries is occurring faster than ever, meaning that the unique ownership of a new technology is no longer a sufficient position, if not impossible. They’re becoming cheaper and more readily available. In today’s world, recognition of the potential applications of a technology and a sense of purpose in exploiting it are far more important than simply having access to it.

While the suggestions like those that nanotechnology will enable a new class of weapons that will alter the geopolitical landscape remain unrealized, a number of unresolved security puzzles underlying emerging technologies have implications for international security, defense policy, deterrence, governance, and arms control regimes.

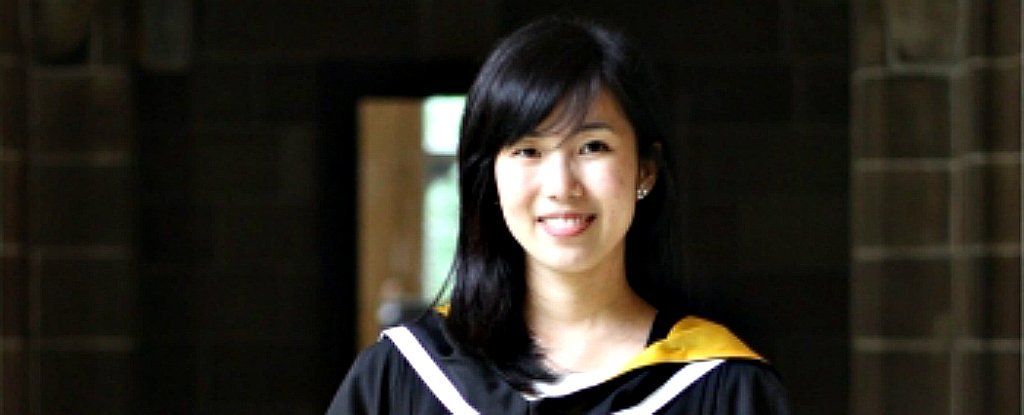

A 25-year-old student has just come up with a way to fight drug-resistant superbugs without antibiotics.

The new approach has so far only been tested in the lab and on mice, but it could offer a potential solution to antibiotic resistance, which is now getting so bad that the United Nations recently declared it a “fundamental threat” to global health.

Antibiotic-resistant bacteria already kill around 700,000 people each year, but a recent study suggests that number could rise to around 10 million by 2050.

In a recent experiment, a Swedish scientist, Fredrik Lanner, a developmental biologist at the Karolinska Institute in Stockholm, attempted to modify the genes of a human embryos injecting a gene-editing tool known as CRISPR-Cas9 into carefully thawed five human embryos donated by couples who had gone through in vitro fertilization (IVF). One did not survive the cooling and thawing process, while another one was severely damaged while being injected. The remaining three embryos, which were two-days old when they were injected, survived in good shape, with one of them dividing immediately after being injected.

Scientists have viewed modifying a human embryo as over the line for safety and ethical concerns. The fear is that Lanner’s work could open the door to others attempting to use genetically modified embryos to make babies. One mistake could introduce a new disease in the human gene pool that can be inherited by future generations. Scientists are also concerned on the possibility of “designer babies,” where parents could choose traits they want for their babies.

Fredrik Lanner (right) of the Karolinska Institute in Stockholm and his student Alvaro Plaza Reyes examine a magnified image of an human embryo that they used to attempt to create genetically modified healthy human embryos. (Credit: Rob Stein/NPR)