Step into the future with “Smart Golden Cities of the Future”, a 1-hour journey exploring how technology and nature will merge to create sustainable, intelligent cities by 2050. In this immersive video, we’ll dive deep into a world where urban spaces are powered by Sci-Fi innovation, green infrastructure, and advanced technologies. From eco-friendly architecture to autonomous transportation systems, discover how the cities of tomorrow will function in harmony with the environment. Imagine a future with clean energy, smart public services, and a thriving connection to nature—where sustainability and futuristic technology drive every aspect of life. Join us for an hour-long exploration of the Smart Cities of 2050, as we uncover the incredible possibilities and challenges of creating urban spaces that work for both people and the planet. ✨ This video was created with passion and love for sharing creative production using AI tools such as: • 🧠 Research: ChatGPT • 🖼️ Image Creation: Leonardo, Midjourney, ImageFX • 🎬 Video Production: Veo 3.1, Runway ML • 🎵 Music Generation: Suno AI • ✂️ Video Editing: CapCut Pro 💡 Note: All of the above AI tools are subscription-based. This project combines imagination and creativity from my perspective as a mechanical engineer who loves exploring the future. 🙏🏻 Please Support: • ✅ Subscribe • 👍 Like • 💬 Comment Thank you so much for watching!I hope you enjoy this journey and gain inspiration from this creative experience ❤️ #SmartCities #Sustainability #FutureOfLiving #SciFiInnovation #EcoFriendlyCities #midjourney #veo3 #sunoai

Category: robotics/AI – Page 9

Moonshots with Peter Diamandis

Ray, you’ve made two predictions that I think are important. The first one, as you said, was the one you announced back in 1989: that we would reach human-level AI by 2029. And as you said, people laughed at it.

But there’s another prediction you’ve made: that we will reach the Singularity by 2045. There’s a lot of confusion here. In other words, if we reach human-level AI by 2029 and it then grows exponentially, why do we have to wait until 2045 for the Singularity? Could you explain the difference between these two?

It’s because that’s the point at which our intelligence will become a thousand times greater. One of the ways my view differs from others is that I don’t see it as us having our own intelligence—that is, biological intelligence—while AI exists somewhere else, and we interact with it by comparing human intelligence to AI.

Founder of XPRIZE and pioneer in exponential technologies. Building a world of Abundance through innovation, longevity, and breakthrough ventures.

Ben & Marc: Why Everything Is About to Get 10x Bigger

The media and tech landscape is undergoing a significant transformation driven by advancements in AI, technology, and new structures, enabling entrepreneurs and companies to achieve exponential growth and innovation ## ## Questions to inspire discussion.

Building Your Own Platform.

🚀 Q: How can writers escape traditional media constraints? A: Launch on decentralized platforms like Substack where you build your own brand and business as a “non-fungible writer”, potentially creating organizations 10x larger than traditional media companies you’d work for.

💰 Q: What makes writer-led platforms attractive investments? A: Platforms become cornerstone franchises when writers only succeed by making the platform successful, creating aligned incentives that generate significant returns while enabling top talent to build independent businesses.

📊 Q: What content opportunity exists in decentralized media? A: A barbell market is emerging with mainstream filler content on one end and massive untapped demand for high-quality niche content on the other, creating opportunities across various specialized domains.

Leveraging AI for Business.

Tesla Ending FSD Sales Because the Value Is About to Change

Tesla is ending the one-time purchase option for Full Self-Driving (FSD) and shifting to a monthly subscription model, likely to recapture the value of the technology as it advances towards full autonomy and potential expansion into a robo-taxi fleet ##

## Questions to inspire discussion.

Investment Signal.

🎯 Q: Why is Tesla ending FSD one-time purchases after February 14?

A: Tesla is stopping FSD sales because autonomy is approaching a major inflection point where value will step-change when drivers are out of the loop, and Tesla wants to avoid locking in one-time payments at legacy prices before entering the real robo-taxi world.

Revenue Model Transformation.

OpenAI May Be On The Brink of Collapse

OpenAI is facing a potentially crippling lawsuit from Elon Musk, financial strain, and sustainability concerns, which could lead to its collapse and undermine its mission and trust in its AI technology ## ## Questions to inspire discussion.

Legal and Corporate Structure.

🔴 Q: What equity stake could Musk claim from OpenAI? A: Musk invested $30M representing 60% of OpenAI’s original funding and the lawsuit could force OpenAI to grant him equity as compensation for the nonprofit-to-for-profit transition that allegedly cut him out.

⚖️ Q: What are the trial odds and timeline for Musk’s lawsuit? A: The trial is set for April after a judge rejected OpenAI and Microsoft’s dismissal bid, with Kalshi predicting Musk has a 65% chance of winning the case.

Funding and Financial Stability.

💰 Q: How could the lawsuit impact OpenAI’s ability to raise capital? A: The lawsuit threatens to cut off OpenAI’s lifeline to cash and venture capital funding, potentially leading to insolvency and preventing them from pursuing an IPO due to uncertainty around financial stability and corporate governance.

Sam Altman Cornered by Discovery: Intent & Emails in Elon’s OpenAI Lawsuit

Elon Musk’s lawsuit against OpenAI and his own ambitious plans for AI and tech innovations, including new devices and massive growth for his companies, are positioning him for a major impact on the tech industry, but also come with significant challenges and risks ## Questions to inspire discussion.

Legal Risk Management.

🔍 Q: How does the discovery process threaten OpenAI regardless of lawsuit outcome?

A: Discovery forces exposure of sensitive internal information including Greg Brockman’s 2017 diary entries revealing intent to become for-profit and violating prior agreements with Elon Musk, creating reputational damage and investor uncertainty even if OpenAI wins the case.

⏱️ Q: Why is lawsuit timing particularly damaging to OpenAI’s competitive position?

A: The lawsuit hits during OpenAI’s massive capital raise preparation, forcing delays in fundraising and implementation that allow competitors like Google and Anthropic to advance while OpenAI falls behind, similar to how Meta became less relevant in the AI race.

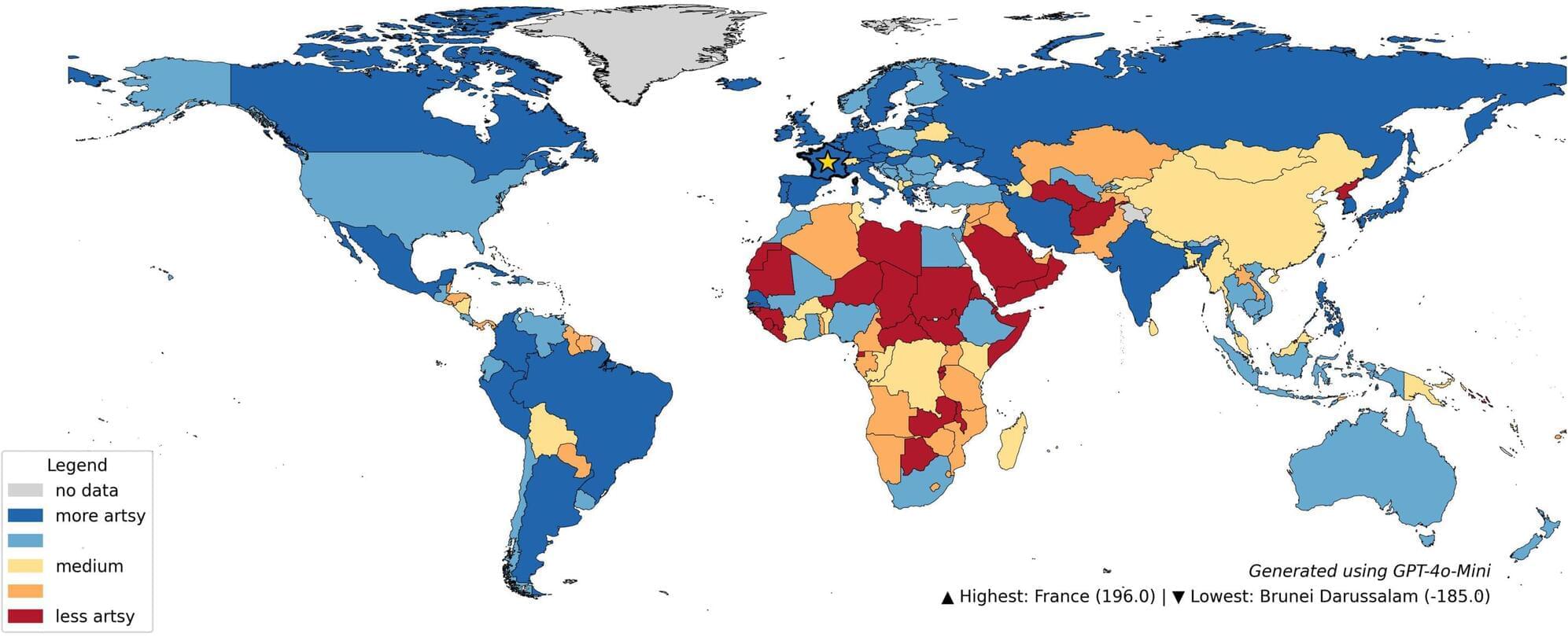

ChatGPT found to reflect and intensify existing global social disparities

New research from the Oxford Internet Institute at the University of Oxford, and the University of Kentucky, finds that ChatGPT systematically favors wealthier, Western regions in response to questions ranging from “Where are people more beautiful?” to “Which country is safer?”—mirroring long-standing biases in the data they ingest.

The study, “The Silicon Gaze: A typology of biases and inequality in LLMs through the lens of place,” by Francisco W. Kerche, Professor Matthew Zook and Professor Mark Graham, published in Platforms and Society, analyzed over 20 million ChatGPT queries.

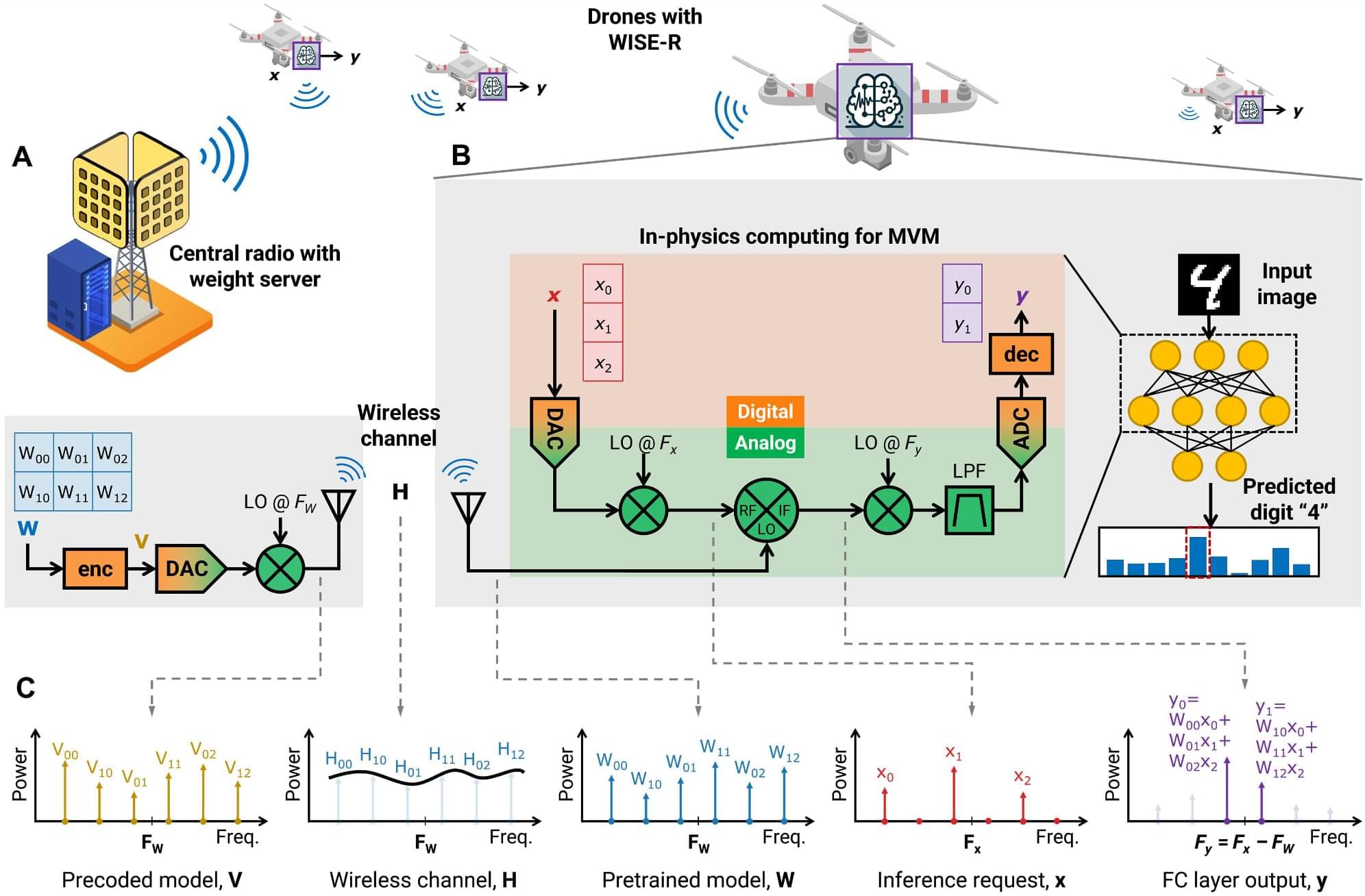

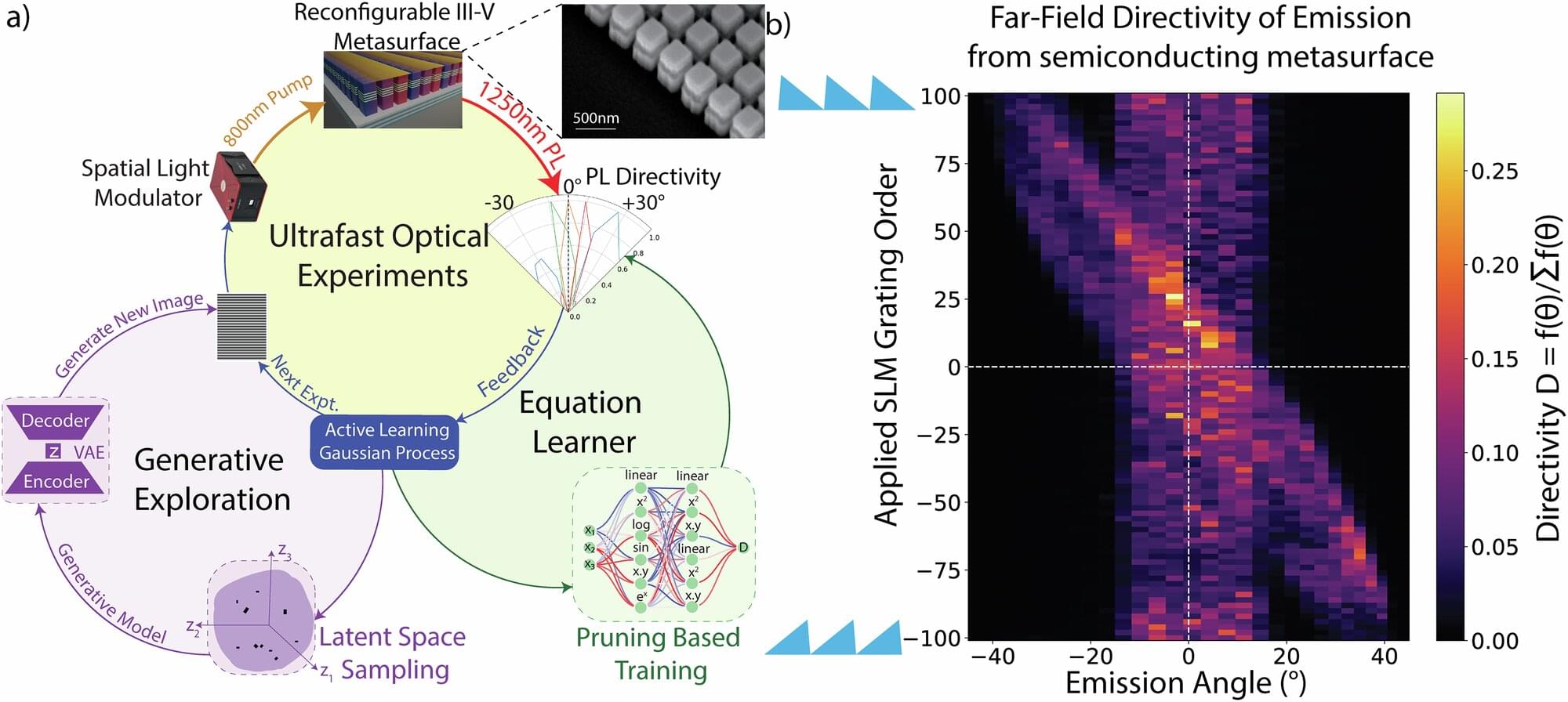

Physicists employ AI labmates to supercharge LED light control

In 2023, a team of physicists from Sandia National Laboratories announced a major discovery: a way to steer LED light. If refined, it could mean someday replacing lasers with cheaper, smaller, more energy-efficient LEDs in countless technologies, from UPC scanners and holographic projectors to self-driving cars. The team assumed it would take years of meticulous experimentation to refine their technique.

Now the same researchers have reported that a trio of artificial intelligence labmates has improved their best results fourfold. It took about five hours.

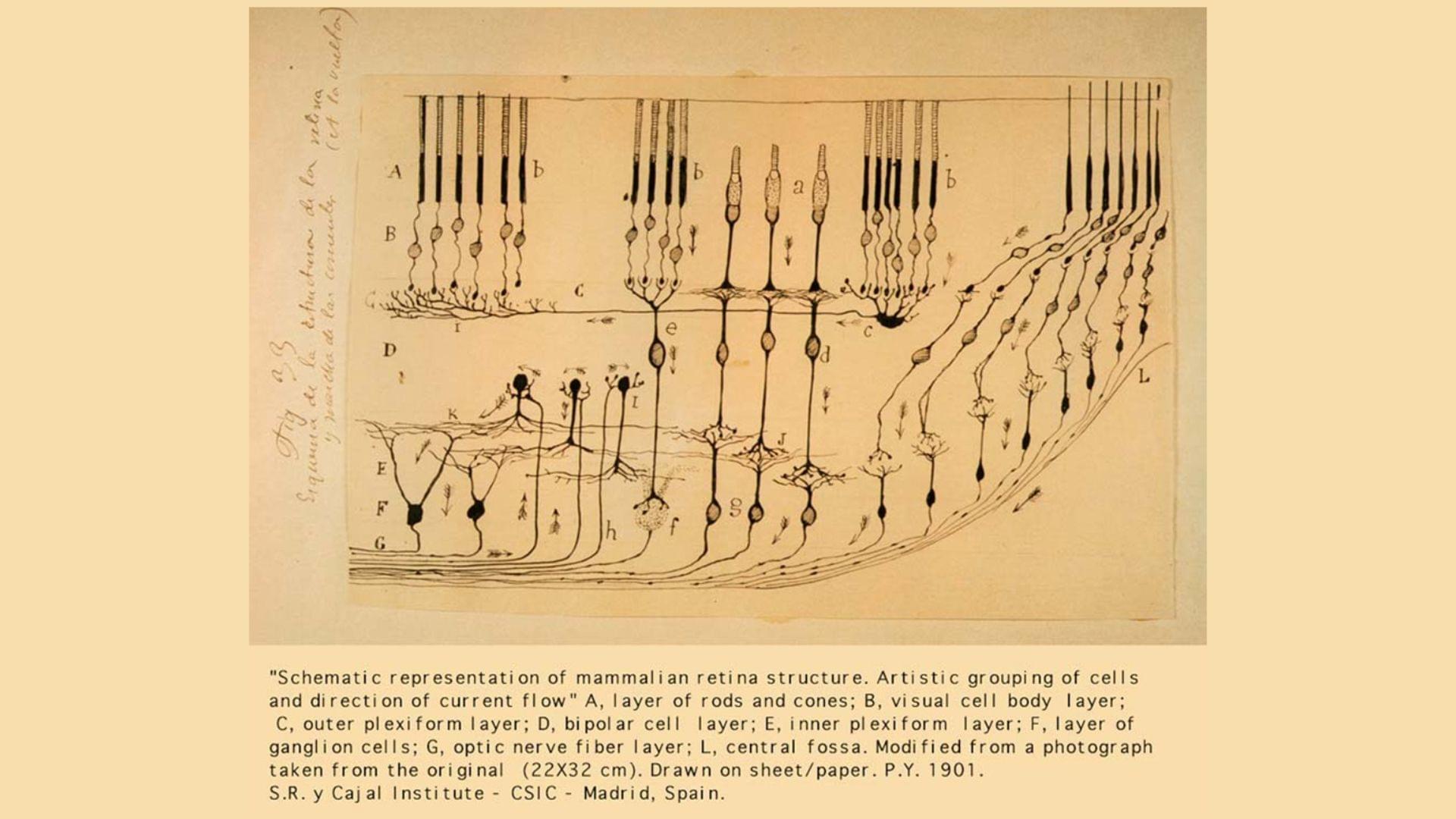

The resulting paper, now published in Nature Communications, shows how AI is advancing beyond a mere automation tool toward becoming a powerful engine for clear, comprehensible scientific discovery.