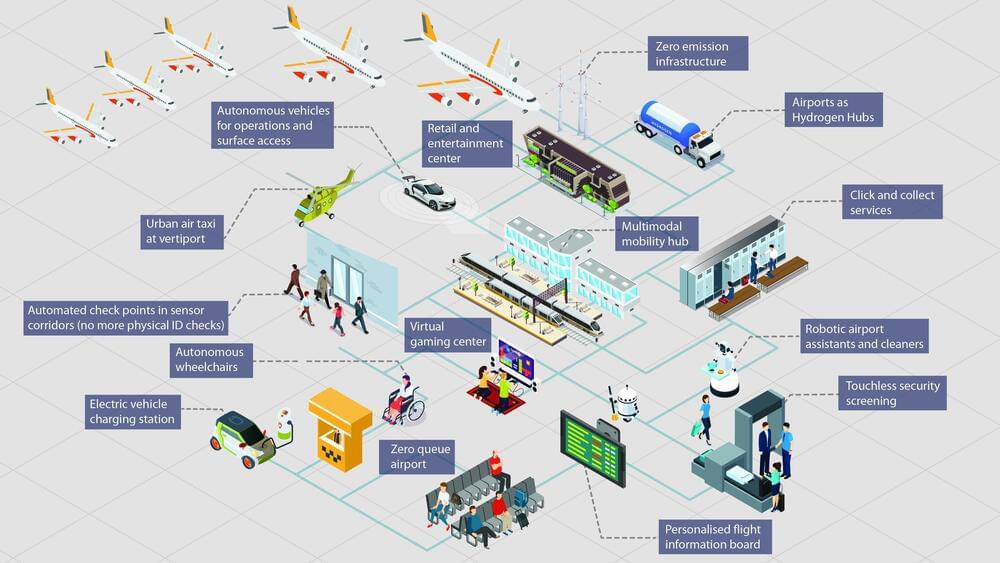

Imagine stepping into an airport where queues are relics of the past, replaced by seamless journeys orchestrated by intelligent machines. This isn’t science fiction – it’s the dawn of Airport 4.0, the cognitive era where airports transform from transit hubs into dynamic, personalized experiences.

As a frequent traveler myself, I’ve spent countless hours navigating the labyrinthine world of airports. The frustration of long lines, the stress of security checks, the wasted time waiting – it’s all too familiar. But Airport 4.0 paints a radically different picture. Facial recognition whisks me past security, AI-powered apps anticipate my needs, and personalized recommendations guide me to hidden gems within the terminal. This isn’t just a convenience; it’s a paradigm shift that unlocks a world of possibilities. Today, as we stand on the brink of the cognitive era, I’m keen to share my insights on how Airport 4.0 is reshaping the future of air travel, making it not just a journey from A to B but an experience in its own right.

A new report on Future of Airports from Markets and Markets Foresighting team delves into what will be a future airport.