Motorized AI webcam makes life feel dynamic again.

Category: robotics/AI – Page 83

🌌 Unifying AI Through the Feynman Path Integral: From Deep Learning to Quantum AI I’m pleased to share a framework that brings many areas of AI into a single mathematical structure inspired by the Feynman path integral —

🌌 Unifying AI Through the Feynman Path Integral: From Deep Learning to Quantum AI https://lnkd.in/g4Cfv6qd I’m pleased to share a framework that brings many areas of AI into a single mathematical structure inspired by the Feynman path integral — a foundational idea in quantum physics. Instead of viewing supervised learning, reinforcement learning, generative models, and quantum machine learning as separate disciplines, this framework shows that they all follow the same underlying principle: Learning is a weighted sum over possible solutions (paths), based on how well each one explains the data. In other words, AI can be viewed the same way Feynman viewed physics: as summing over all possible configurations, weighted by an action functional.

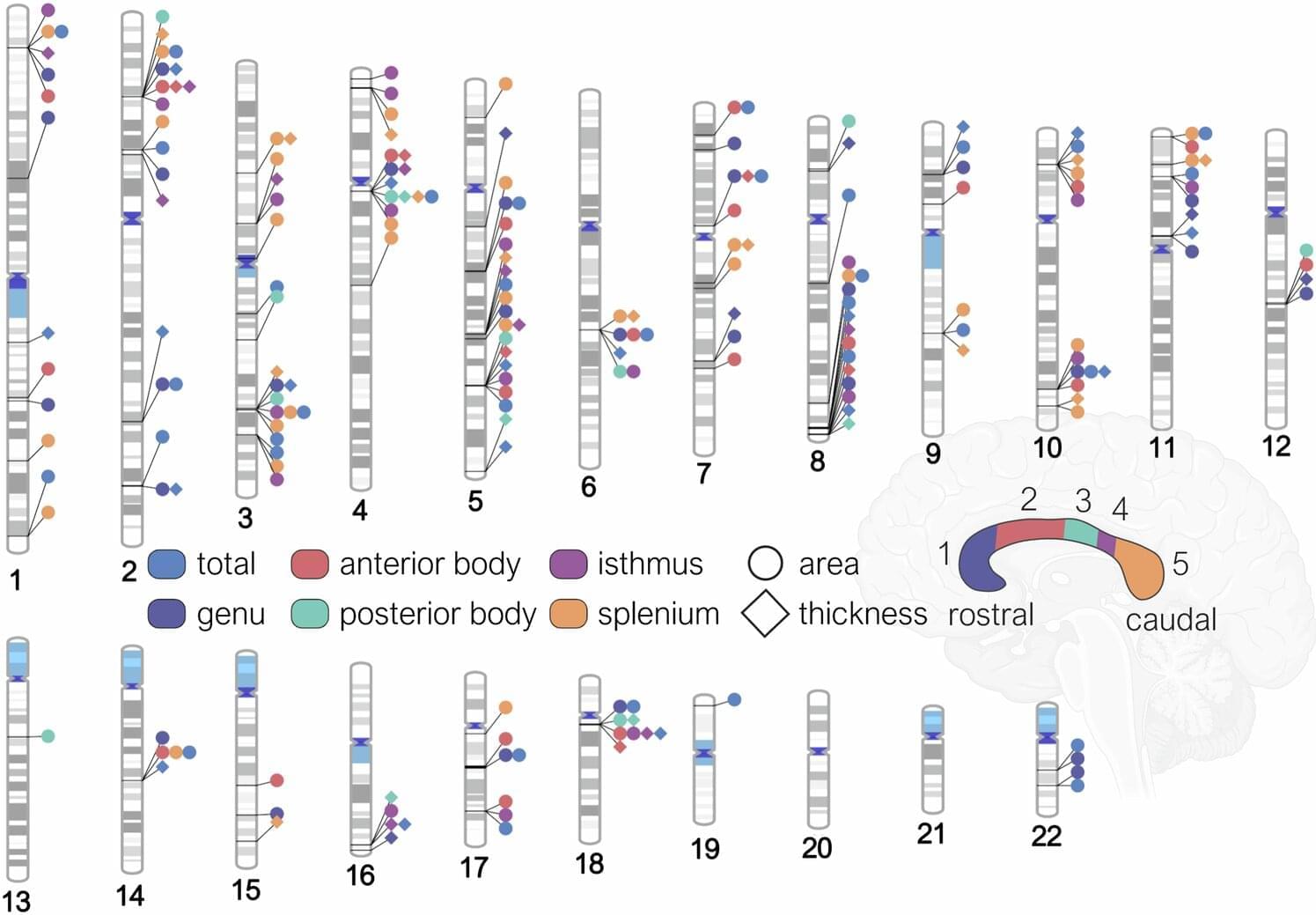

AI tool uncovers genetic blueprint of the brain’s largest communication bridge

For the first time, a research team led by the Mark and Mary Stevens Neuroimaging and Informatics Institute (Stevens INI) at the Keck School of Medicine of USC has mapped the genetic architecture of a crucial part of the human brain known as the corpus callosum—the thick band of nerve fibers that connects the brain’s left and right hemispheres. The findings open new pathways for discoveries about mental illness, neurological disorders and other diseases related to defects in this part of the brain.

The corpus callosum is critical for nearly everything the brain does, from coordinating the movement of our limbs in sync to integrating sights and sounds, to higher-order thinking and decision-making. Abnormalities in its shape and size have long been linked to disorders such as ADHD, bipolar disorder, and Parkinson’s disease. Until now, the genetic underpinnings of this vital structure had remained largely unknown.

In the new study, published in Nature Communications, the team analyzed brain scans and genetic data from over 50,000 people, ranging from childhood to late adulthood, with the help of a new tool the team created that leverages artificial intelligence.

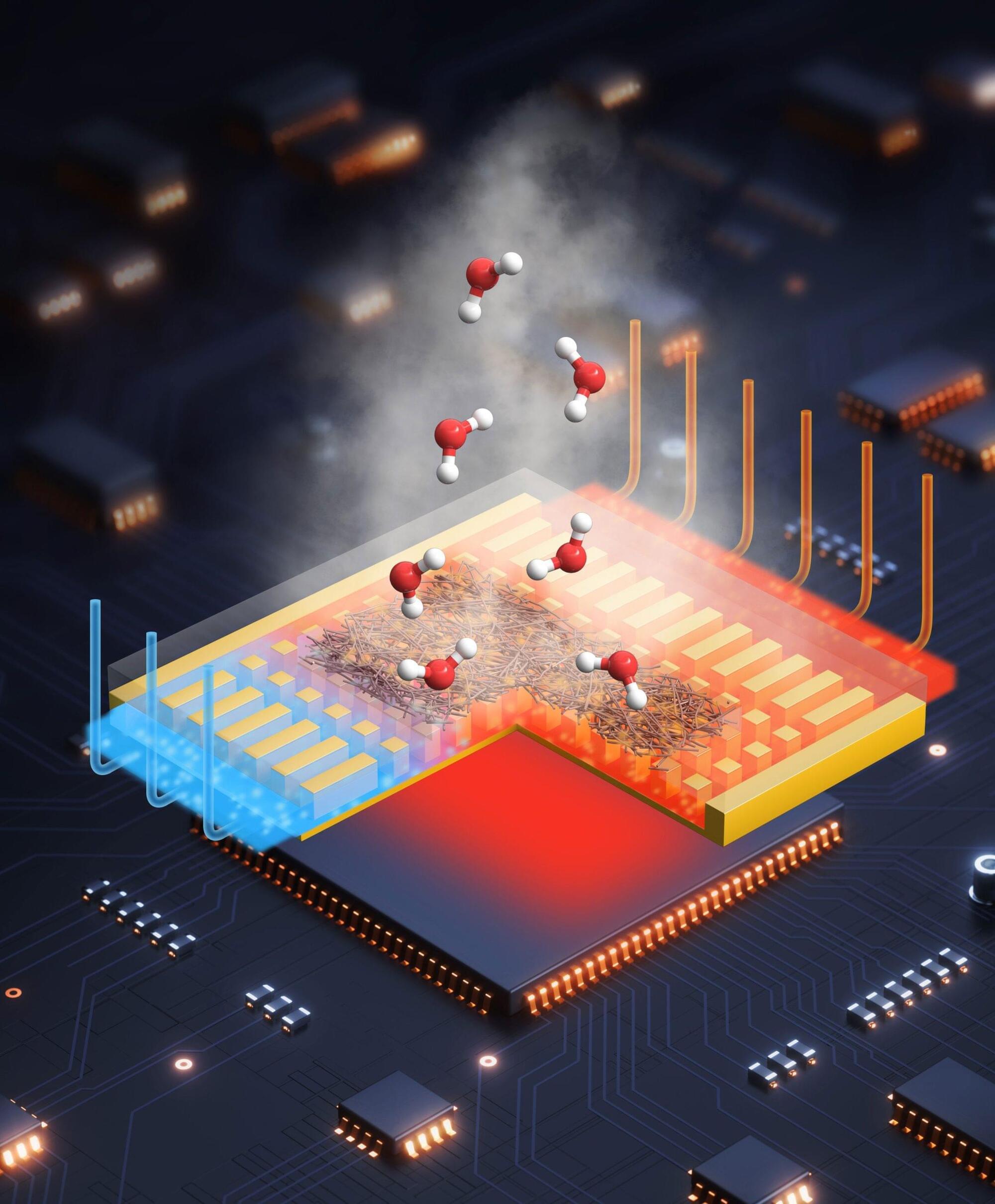

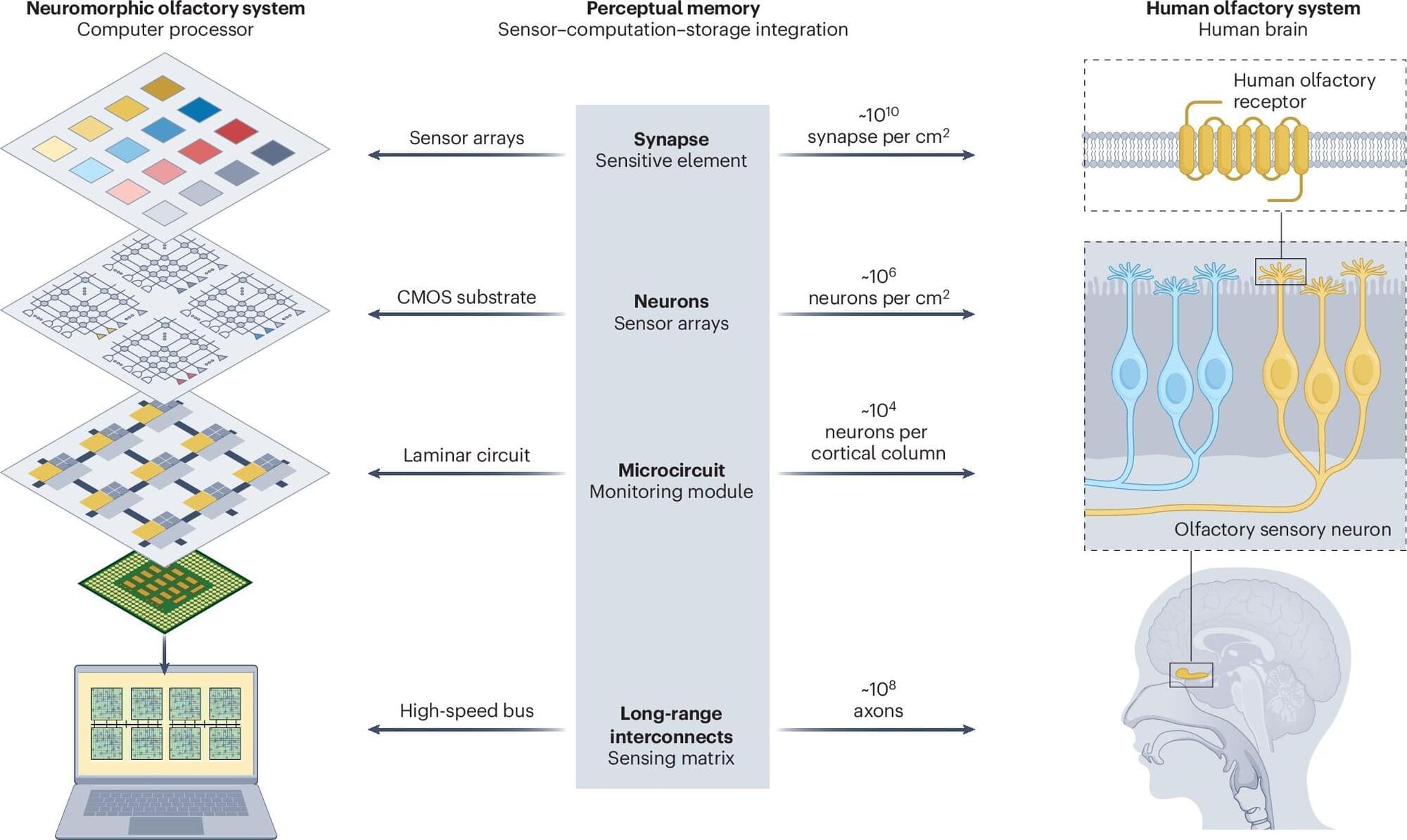

Brain-inspired chips are helping electronic noses better mimic human sense of smell

After years of trying, the electronic nose is finally making major progress in sensing smells, almost as well as its human counterpart. That is the conclusion of a scientific review into the development of neuromorphic olfactory perception chips (NOPCs), published in the journal Nature Reviews Electrical Engineering.

Evolution has perfected the human nose over millions of years. This powerful sense organ, while not the best in the animal kingdom, can still detect around a trillion smells. The quest to develop electronic noses with human nose-like abilities for applications like security, robotics, and medical diagnostics has proved notoriously difficult. So scientists have increasingly been turning to neuromorphic computing, which involves designing software and hardware that mimics the structure and function of the human nose.

In this review, a team of scientists from China highlights some of the key advances in developing olfactory sensing chips. The paper focuses heavily on gas sensors because they are key components of the electronic nose system. They must physically detect odor molecules and convert them into electrical signals.

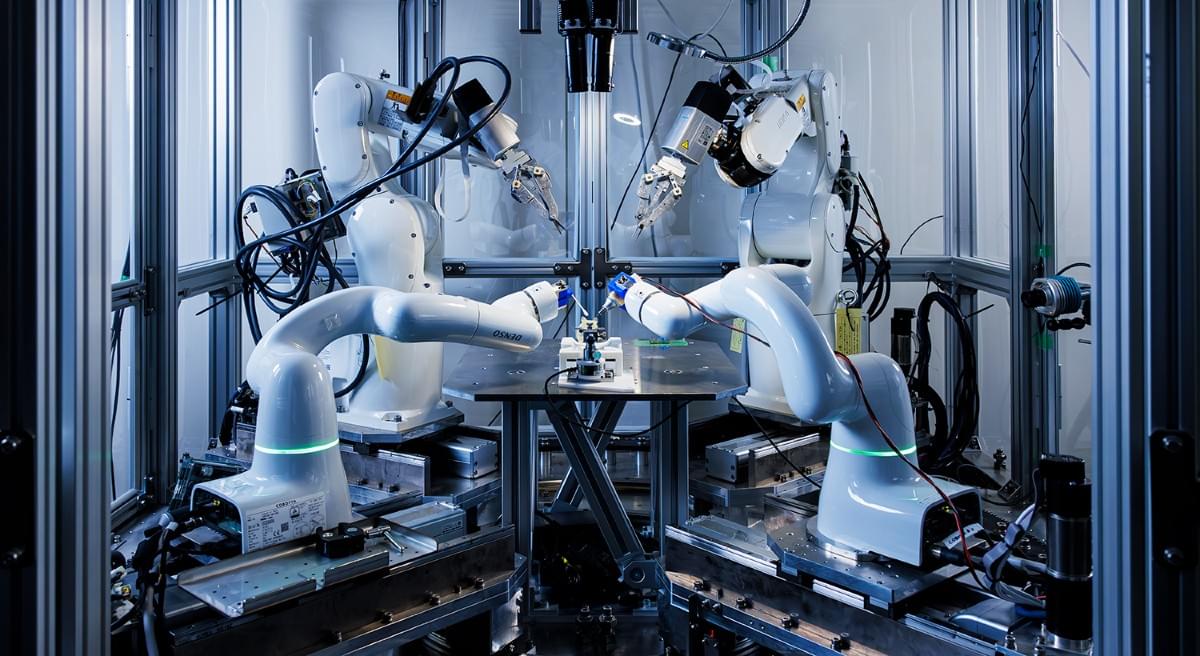

Startup provides a nontechnical gateway to coding on quantum computers

Quantum computers have the potential to model new molecules and weather patterns better than any computer today. They may also one day accelerate artificial intelligence algorithms at a much lower energy footprint. But anyone interested in using quantum computers faces a steep learning curve that starts with getting access to quantum devices and then figuring out one of the many quantum software programs on the market.

Now qBraid, founded by Kanav Setia and Jason Necaise ‘20, is providing a gateway to quantum computing with a platform that gives users access to the leading quantum devices and software. Users can log on to qBraid’s cloud-based interface and connect with quantum devices and other computing resources from leading companies like Nvidia, Microsoft, and IBM. In a few clicks, they can start coding or deploy cutting-edge software that works across devices.

“The mission is to take you from not knowing anything about quantum computing to running your first program on these amazing machines in less than 10 minutes,” Setia says. “We’re a one-stop platform that gives access to everything the quantum ecosystem has to offer. Our goal is to enable anyone—whether they’re enterprise customers, academics, or individual users—to build and ultimately deploy applications.”