Researchers detail new AI and phishing kits that steal credentials, bypass MFA, and scale attacks across major services.

Sundar Pichai predicts AI will cause “societal disruption,” but of course, he himself won’t do anything about it.

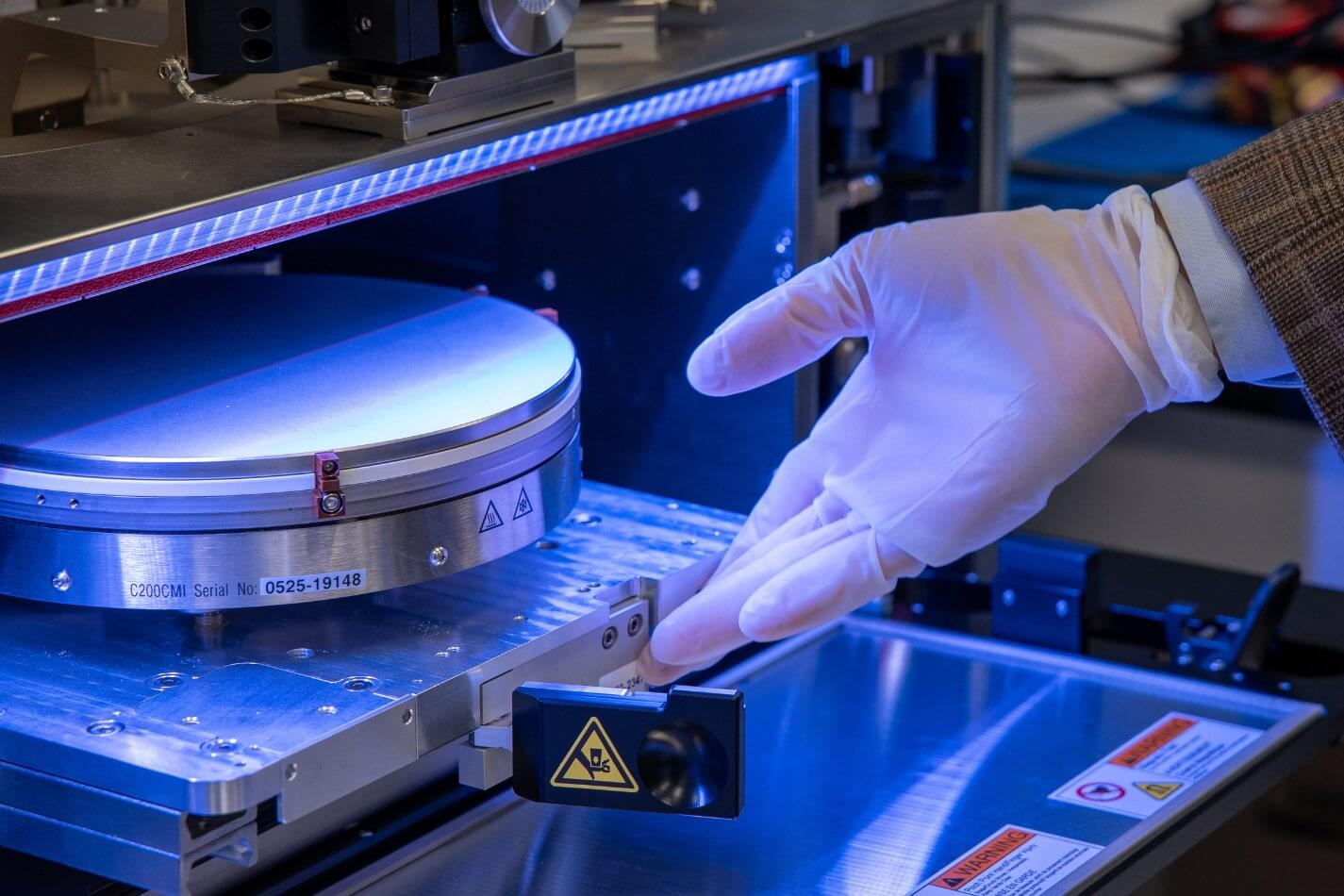

A collaborative team has achieved the first monolithic 3D chip built in a U.S. foundry, delivering the densest 3D chip wiring and order-of-magnitude speed gains.

Engineers at Stanford University, Carnegie Mellon University, University of Pennsylvania, and the Massachusetts Institute of Technology have collaborated with SkyWater Technology, the largest exclusively U.S.-based pure-play semiconductor foundry, to develop a novel multilayer computer chip whose architecture could help usher in a new era of AI hardware and domestic semiconductor innovation.

Unlike today’s largely flat, 2D chips, the new prototype’s key ultra-thin components rise like stories in a tall building, with vertical wiring acting like numerous high-speed elevators that enable fast, massive data movement. Its record-setting density of vertical connections and carefully interwoven mix of memory and computing units help the chip bypass the bottlenecks that have long slowed improvement in flat designs. In hardware tests and simulations, the new 3D chip outperforms 2D chips by roughly an order of magnitude.

Researchers at UCLA have developed an artificial intelligence tool that can use electronic health records to identify patients with undiagnosed Alzheimer’s disease, addressing a critical gap in Alzheimer’s care: significant underdiagnosis, particularly among underrepresented communities.

The study appears in the journal npj Digital Medicine.

After reviewing thousands of benchmarks used in AI development, a Stanford team found that 5% could have serious flaws with far-reaching ramifications.

Each time an AI researcher trains a new model to understand language, recognize images, or solve a medical riddle, one big question remains: Is this model better than what went before? To answer that question, AI researchers rely on batteries of benchmarks, or tests to measure and assess a new model’s capabilities. Benchmark scores can make or break a model.

But there are tens of thousands of benchmarks spread across several datasets. Which one should developers use, and are all of equal worth?

Predictive computational methods for drug discovery have typically relied on models that incorporate three-dimensional information about protein structure. But these modeling methods face limitations due to high computational costs, expensive training data, and inability to fully capture protein dynamics.

Ainnocence develops predictive AI models based on target protein sequence. By bypassing 3D structural information entirely, sequence-based AI models can screen billions of drug candidates in hours or days. Ainnocence uses amino acid sequence data from target proteins and wet lab data to predict drug binding and other biological effects. They have demonstrated success in discovering COVID-19 antibodies and their platform can be used to discover other biomolecules, small molecules, cell therapies, and mRNA vaccines.

Medra, which programs robots with artificial intelligence to conduct and improve biological experiments, has raised $52 million to build what it says will be one of the largest autonomous labs in the United States.

The deal brings Medra’s total funding to $63 million, including pre-seed and seed financing. Existing investor Human Capital led the new round, which came together just weeks after the company started talking publicly about its work in September, Chief Executive Officer Michelle Lee said in an interview at the company’s San Francisco lab. The company recently signed an agreement to work on early drug discovery with Genentech, a subsidiary of pharmaceutical giant Roche Holding AG.

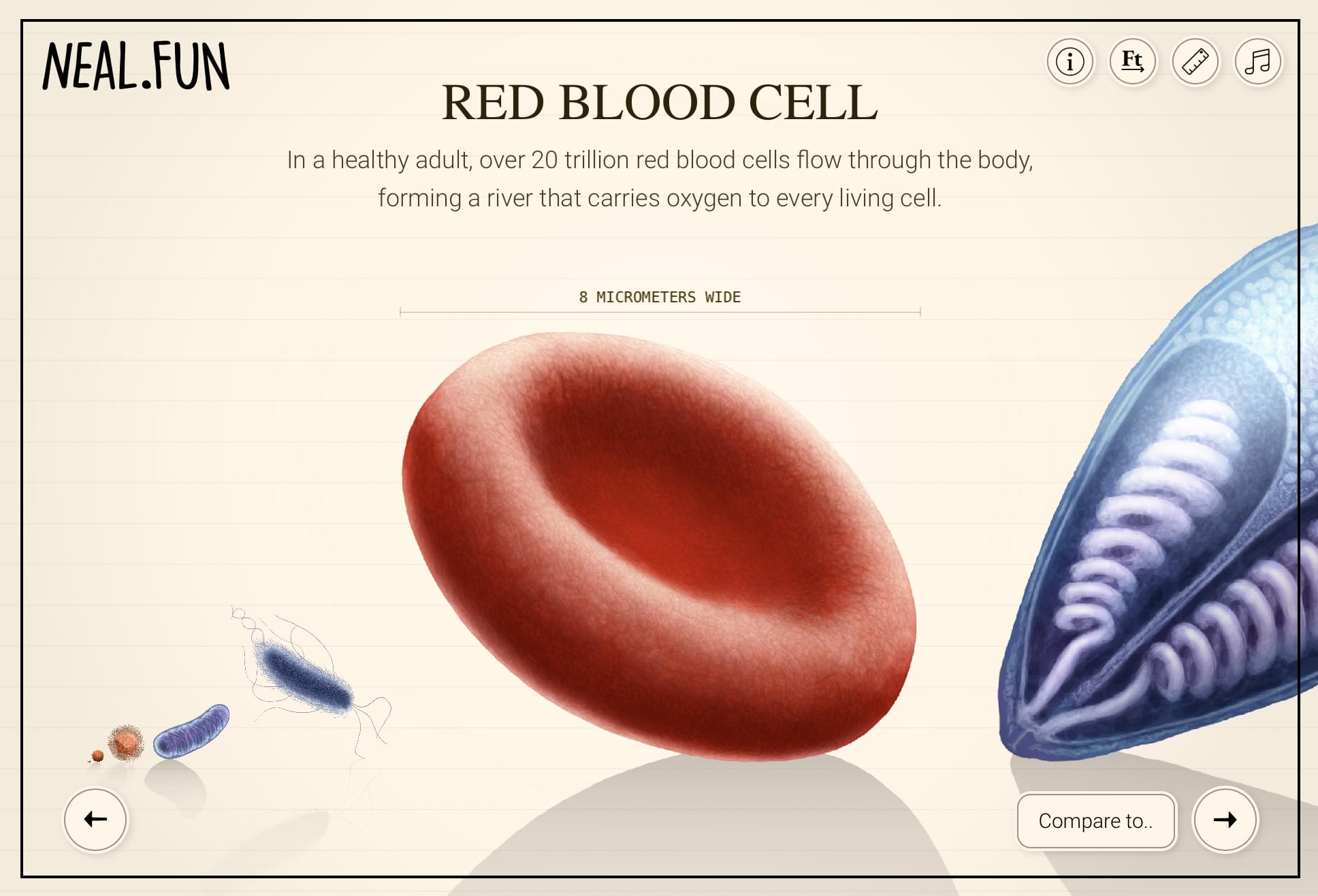

Neal Agarwal published another gift to the internet with Size of Life. It shows the scale of living things, starting with DNA, to hemoglobin, and keeps going up.

The scientific illustrations are hand-drawn (without AI) by Julius Csotonyi. Sound & FX by Aleix Ramon and cello music by Iratxe Ibaibarriaga calm the mind and encourage a slow observation of things, but also grow in complexity and weight with the scale. It kind of feels like a meditation exercise.

See also: shrinking to an atom, the speed of light, and of course the classic Powers of Ten.